########################################################

## ADD DERIVED AGGREGATED COLUMN FROM ALL DATA SET##

#############################################################

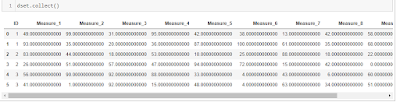

corr_cols = list(map(lambda y: y[0], filter(lambda x: (x[1]=='DOUBLE' or x[1]=='DECIMAL'), dset.dtypes())))

corr_cols

#define the group by clm

groupby_col = 'ID'

#define the aggr functions

agg = [ 'count','avg', 'stddev', 'min', 'max', 'median']

agg_sql = []

for operation in agg:

agg_string = map(lambda x: tuple((operation, x, operation+'_'+x)), corr_cols)

agg_sql.extend(agg_string)

dset1 = dset.agg(agg_sql, group_by=groupby_col)

#Run Statement

#dset1.collect()

#Get the sql generated

dset1.select_statement

SAP HANA ML code

This code implements the steps required to produce the result ( new aggregated columns ) that we want. Initially, we are keeping on a list only the columns which are type of double or decimal .Then we define,

◉ The column from the table that we want the grouping to applied. (groupby_col = ‘ID’)

◉ The aggregated functions that we want to create on these columns (agg = [ ‘count’,’avg’, ‘stddev’, ‘min’, ‘max’, ‘median’])

agg_list : A list of tuples. Each tuple is a triplet. The triplet consists of (aggregate_operator, expression, name) where:

◉ aggregate_operator is one of [‘max’, ‘min’, ‘count’, ‘avg’]

◉ expression is a str that is a column or column expression name that is the name of this aggregate in the project list.

group_by : str or list of str. The group by column. Only a column is allowed although expressions are allowed in SQL.

For every derived column, we build the corresponding name with the following syntax ,

“{Aggregation}_{Initial column name}”

Then by using the select_statement function, we gather the constructed SQL.

'SELECT "ID",

count("Measure_1") AS "count_Measure_1",

count("Measure_2") AS "count_Measure_2",

count("Measure_3") AS "count_Measure_3",

count("Measure_4") AS "count_Measure_4",

count("Measure_5") AS "count_Measure_5",

count("Measure_6") AS "count_Measure_6",

count("Measure_7") AS "count_Measure_7",

count("Measure_8") AS "count_Measure_8",

count("Measure_9") AS "count_Measure_9",

count("Measure_10") AS "count_Measure_10",

avg("Measure_1") AS "avg_Measure_1",

avg("Measure_2") AS "avg_Measure_2",

avg("Measure_3") AS "avg_Measure_3",

avg("Measure_4") AS "avg_Measure_4",

avg("Measure_5") AS "avg_Measure_5",

avg("Measure_6") AS "avg_Measure_6",

avg("Measure_7") AS "avg_Measure_7",

avg("Measure_8") AS "avg_Measure_8",

avg("Measure_9") AS "avg_Measure_9",

avg("Measure_10") AS "avg_Measure_10",

stddev("Measure_1") AS "stddev_Measure_1",

stddev("Measure_2") AS "stddev_Measure_2",

stddev("Measure_3") AS "stddev_Measure_3",

stddev("Measure_4") AS "stddev_Measure_4",

stddev("Measure_5") AS "stddev_Measure_5",

stddev("Measure_6") AS "stddev_Measure_6",

stddev("Measure_7") AS "stddev_Measure_7",

stddev("Measure_8") AS "stddev_Measure_8",

stddev("Measure_9") AS "stddev_Measure_9",

stddev("Measure_10") AS "stddev_Measure_10",

min("Measure_1") AS "min_Measure_1",

min("Measure_2") AS "min_Measure_2",

min("Measure_3") AS "min_Measure_3",

min("Measure_4") AS "min_Measure_4",

min("Measure_5") AS "min_Measure_5",

min("Measure_6") AS "min_Measure_6",

min("Measure_7") AS "min_Measure_7",

min("Measure_8") AS "min_Measure_8",

min("Measure_9") AS "min_Measure_9",

min("Measure_10") AS "min_Measure_10",

max("Measure_1") AS "max_Measure_1",

max("Measure_2") AS "max_Measure_2",

max("Measure_3") AS "max_Measure_3",

max("Measure_4") AS "max_Measure_4",

max("Measure_5") AS "max_Measure_5",

max("Measure_6") AS "max_Measure_6",

max("Measure_7") AS "max_Measure_7",

max("Measure_8") AS "max_Measure_8",

max("Measure_9") AS "max_Measure_9",

max("Measure_10") AS "max_Measure_10",

median("Measure_1") AS "median_Measure_1",

median("Measure_2") AS "median_Measure_2",

median("Measure_3") AS "median_Measure_3",

median("Measure_4") AS "median_Measure_4",

median("Measure_5") AS "median_Measure_5",

median("Measure_6") AS "median_Measure_6",

median("Measure_7") AS "median_Measure_7",

median("Measure_8") AS "median_Measure_8",

median("Measure_9") AS "median_Measure_9",

median("Measure_10") AS "median_Measure_10"

FROM (SELECT * FROM DEMO_USER.DEMO_AGG ) AS "DT_0" GROUP BY "ID"'

Extending the current scenario with even more aggregations based on a specific column, for example column ID on our case, it is really easy to implement. A final usage of SAP HANA ML join function is needed.

Final code

#############################################################

## ADD DERIVED AGGREGATED COLUMN FROM ALL DATA SET##

#############################################################

corr_cols = list(map(lambda y: y[0], filter(lambda x: (x[1]=='DOUBLE' or x[1]=='DECIMAL'), dset.dtypes())))

corr_cols

#define the group by clm

groupby_col = 'ID'

#define the aggr functions

agg = [ 'count','avg', 'stddev', 'min', 'max', 'median']

agg_sql = []

for operation in agg:

agg_string = map(lambda x: tuple((operation, x, operation+'_'+x)), corr_cols)

agg_sql.extend(agg_string)

dset1 = dset.agg(agg_sql, group_by=groupby_col)

#Run Statement

#dset1.collect()

#Get the sql generated

#dset1.select_statement

#############################################################

#############################################################

## ADD DERIVED AGGREGATED COLUMN PARTITION BY THE ID COLUMN##

#############################################################

agg = [ 'count','avg', 'stddev', 'min', 'max', 'median']

agg_sql = []

for operation in agg:

agg_string = map(lambda x :'%s("%s") over (partition by ID) AS "pct_%s_%s"'%(operation,x,operation,x),corr_cols)

agg_sql.extend(agg_string)

agg_string =", ".join(agg_sql)

df_window_fct_ren = conn.sql('select distinct * from (select "ID", ' + agg_string + ' from DEMO_USER.DEMO_AGG)')

result = dset1.set_index('ID').join(df_window_fct_ren.set_index('ID'))

#Run Statement

#result.collect()

#Get the sql generated - FINAL#

result.select_statement

#############################################################

Again, by using the select_statement function, we gather the new constructed SQL.

SELECT T0."ID",

T0."count_Measure_1",

T0."count_Measure_2",

T0."count_Measure_3",

T0."count_Measure_4",

T0."count_Measure_5",

T0."count_Measure_6",

T0."count_Measure_7",

T0."count_Measure_8",

T0."count_Measure_9",

T0."count_Measure_10",

T0."avg_Measure_1",

T0."avg_Measure_2",

T0."avg_Measure_3",

T0."avg_Measure_4",

T0."avg_Measure_5",

T0."avg_Measure_6",

T0."avg_Measure_7",

T0."avg_Measure_8",

T0."avg_Measure_9",

T0."avg_Measure_10",

T0."stddev_Measure_1",

T0."stddev_Measure_2",

T0."stddev_Measure_3",

T0."stddev_Measure_4",

T0."stddev_Measure_5",

T0."stddev_Measure_6",

T0."stddev_Measure_7",

T0."stddev_Measure_8",

T0."stddev_Measure_9",

T0."stddev_Measure_10",

T0."min_Measure_1",

T0."min_Measure_2",

T0."min_Measure_3",

T0."min_Measure_4",

T0."min_Measure_5",

T0."min_Measure_6",

T0."min_Measure_7",

T0."min_Measure_8",

T0."min_Measure_9",

T0."min_Measure_10",

T0."max_Measure_1",

T0."max_Measure_2",

T0."max_Measure_3",

T0."max_Measure_4",

T0."max_Measure_5",

T0."max_Measure_6",

T0."max_Measure_7",

T0."max_Measure_8",

T0."max_Measure_9",

T0."max_Measure_10",

T0."median_Measure_1",

T0."median_Measure_2",

T0."median_Measure_3",

T0."median_Measure_4",

T0."median_Measure_5",

T0."median_Measure_6",

T0."median_Measure_7",

T0."median_Measure_8",

T0."median_Measure_9",

T0."median_Measure_10",

T1."pct_count_Measure_1",

T1."pct_count_Measure_2",

T1."pct_count_Measure_3",

T1."pct_count_Measure_4",

T1."pct_count_Measure_5",

T1."pct_count_Measure_6",

T1."pct_count_Measure_7",

T1."pct_count_Measure_8",

T1."pct_count_Measure_9",

T1."pct_count_Measure_10",

T1."pct_avg_Measure_1",

T1."pct_avg_Measure_2",

T1."pct_avg_Measure_3",

T1."pct_avg_Measure_4",

T1."pct_avg_Measure_5",

T1."pct_avg_Measure_6",

T1."pct_avg_Measure_7",

T1."pct_avg_Measure_8",

T1."pct_avg_Measure_9",

T1."pct_avg_Measure_10",

T1."pct_stddev_Measure_1",

T1."pct_stddev_Measure_2",

T1."pct_stddev_Measure_3",

T1."pct_stddev_Measure_4",

T1."pct_stddev_Measure_5",

T1."pct_stddev_Measure_6",

T1."pct_stddev_Measure_7",

T1."pct_stddev_Measure_8",

T1."pct_stddev_Measure_9",

T1."pct_stddev_Measure_10",

T1."pct_min_Measure_1",

T1."pct_min_Measure_2",

T1."pct_min_Measure_3",

T1."pct_min_Measure_4",

T1."pct_min_Measure_5",

T1."pct_min_Measure_6",

T1."pct_min_Measure_7",

T1."pct_min_Measure_8",

T1."pct_min_Measure_9",

T1."pct_min_Measure_10",

T1."pct_max_Measure_1",

T1."pct_max_Measure_2",

T1."pct_max_Measure_3",

T1."pct_max_Measure_4",

T1."pct_max_Measure_5",

T1."pct_max_Measure_6",

T1."pct_max_Measure_7",

T1."pct_max_Measure_8",

T1."pct_max_Measure_9",

T1."pct_max_Measure_10",

T1."pct_median_Measure_1",

T1."pct_median_Measure_2",

T1."pct_median_Measure_3",

T1."pct_median_Measure_4",

T1."pct_median_Measure_5",

T1."pct_median_Measure_6",

T1."pct_median_Measure_7",

T1."pct_median_Measure_8",

T1."pct_median_Measure_9",

T1."pct_median_Measure_10"\nFROM (SELECT "ID",

count("Measure_1") AS "count_Measure_1",

count("Measure_2") AS "count_Measure_2",

count("Measure_3") AS "count_Measure_3",

count("Measure_4") AS "count_Measure_4",

count("Measure_5") AS "count_Measure_5",

count("Measure_6") AS "count_Measure_6",

count("Measure_7") AS "count_Measure_7",

count("Measure_8") AS "count_Measure_8",

count("Measure_9") AS "count_Measure_9",

count("Measure_10") AS "count_Measure_10",

avg("Measure_1") AS "avg_Measure_1",

avg("Measure_2") AS "avg_Measure_2",

avg("Measure_3") AS "avg_Measure_3",

avg("Measure_4") AS "avg_Measure_4",

avg("Measure_5") AS "avg_Measure_5",

avg("Measure_6") AS "avg_Measure_6",

avg("Measure_7") AS "avg_Measure_7",

avg("Measure_8") AS "avg_Measure_8",

avg("Measure_9") AS "avg_Measure_9",

avg("Measure_10") AS "avg_Measure_10",

stddev("Measure_1") AS "stddev_Measure_1",

stddev("Measure_2") AS "stddev_Measure_2",

stddev("Measure_3") AS "stddev_Measure_3",

stddev("Measure_4") AS "stddev_Measure_4",

stddev("Measure_5") AS "stddev_Measure_5",

stddev("Measure_6") AS "stddev_Measure_6",

stddev("Measure_7") AS "stddev_Measure_7",

stddev("Measure_8") AS "stddev_Measure_8",

stddev("Measure_9") AS "stddev_Measure_9",

stddev("Measure_10") AS "stddev_Measure_10",

min("Measure_1") AS "min_Measure_1",

min("Measure_2") AS "min_Measure_2",

min("Measure_3") AS "min_Measure_3",

min("Measure_4") AS "min_Measure_4",

min("Measure_5") AS "min_Measure_5",

min("Measure_6") AS "min_Measure_6",

min("Measure_7") AS "min_Measure_7",

min("Measure_8") AS "min_Measure_8",

min("Measure_9") AS "min_Measure_9",

min("Measure_10") AS "min_Measure_10",

max("Measure_1") AS "max_Measure_1",

max("Measure_2") AS "max_Measure_2",

max("Measure_3") AS "max_Measure_3",

max("Measure_4") AS "max_Measure_4",

max("Measure_5") AS "max_Measure_5",

max("Measure_6") AS "max_Measure_6",

max("Measure_7") AS "max_Measure_7",

max("Measure_8") AS "max_Measure_8",

max("Measure_9") AS "max_Measure_9",

max("Measure_10") AS "max_Measure_10",

median("Measure_1") AS "median_Measure_1",

median("Measure_2") AS "median_Measure_2",

median("Measure_3") AS "median_Measure_3",

median("Measure_4") AS "median_Measure_4",

median("Measure_5") AS "median_Measure_5",

median("Measure_6") AS "median_Measure_6",

median("Measure_7") AS "median_Measure_7",

median("Measure_8") AS "median_Measure_8",

median("Measure_9") AS "median_Measure_9",

median("Measure_10") AS "median_Measure_10"

FROM (SELECT * FROM DEMO_USER.DEMO_AGG\n ) AS "DT_0" GROUP BY "ID") T0

INNER JOIN (select distinct * from (select "ID",

count("Measure_1") over (partition by ID) AS "pct_count_Measure_1",

count("Measure_2") over (partition by ID) AS "pct_count_Measure_2",

count("Measure_3") over (partition by ID) AS "pct_count_Measure_3",

count("Measure_4") over (partition by ID) AS "pct_count_Measure_4",

count("Measure_5") over (partition by ID) AS "pct_count_Measure_5",

count("Measure_6") over (partition by ID) AS "pct_count_Measure_6",

count("Measure_7") over (partition by ID) AS "pct_count_Measure_7",

count("Measure_8") over (partition by ID) AS "pct_count_Measure_8",

count("Measure_9") over (partition by ID) AS "pct_count_Measure_9",

count("Measure_10") over (partition by ID) AS "pct_count_Measure_10",

avg("Measure_1") over (partition by ID) AS "pct_avg_Measure_1",

avg("Measure_2") over (partition by ID) AS "pct_avg_Measure_2",

avg("Measure_3") over (partition by ID) AS "pct_avg_Measure_3",

avg("Measure_4") over (partition by ID) AS "pct_avg_Measure_4",

avg("Measure_5") over (partition by ID) AS "pct_avg_Measure_5",

avg("Measure_6") over (partition by ID) AS "pct_avg_Measure_6",

avg("Measure_7") over (partition by ID) AS "pct_avg_Measure_7",

avg("Measure_8") over (partition by ID) AS "pct_avg_Measure_8",

avg("Measure_9") over (partition by ID) AS "pct_avg_Measure_9",

avg("Measure_10") over (partition by ID) AS "pct_avg_Measure_10",

stddev("Measure_1") over (partition by ID) AS "pct_stddev_Measure_1",

stddev("Measure_2") over (partition by ID) AS "pct_stddev_Measure_2",

stddev("Measure_3") over (partition by ID) AS "pct_stddev_Measure_3",

stddev("Measure_4") over (partition by ID) AS "pct_stddev_Measure_4",

stddev("Measure_5") over (partition by ID) AS "pct_stddev_Measure_5",

stddev("Measure_6") over (partition by ID) AS "pct_stddev_Measure_6",

stddev("Measure_7") over (partition by ID) AS "pct_stddev_Measure_7",

stddev("Measure_8") over (partition by ID) AS "pct_stddev_Measure_8",

stddev("Measure_9") over (partition by ID) AS "pct_stddev_Measure_9",

stddev("Measure_10") over (partition by ID) AS "pct_stddev_Measure_10",

min("Measure_1") over (partition by ID) AS "pct_min_Measure_1",

min("Measure_2") over (partition by ID) AS "pct_min_Measure_2",

min("Measure_3") over (partition by ID) AS "pct_min_Measure_3",

min("Measure_4") over (partition by ID) AS "pct_min_Measure_4",

min("Measure_5") over (partition by ID) AS "pct_min_Measure_5",

min("Measure_6") over (partition by ID) AS "pct_min_Measure_6",

min("Measure_7") over (partition by ID) AS "pct_min_Measure_7",

min("Measure_8") over (partition by ID) AS "pct_min_Measure_8",

min("Measure_9") over (partition by ID) AS "pct_min_Measure_9",

min("Measure_10") over (partition by ID) AS "pct_min_Measure_10",

max("Measure_1") over (partition by ID) AS "pct_max_Measure_1",

max("Measure_2") over (partition by ID) AS "pct_max_Measure_2",

max("Measure_3") over (partition by ID) AS "pct_max_Measure_3",

max("Measure_4") over (partition by ID) AS "pct_max_Measure_4",

max("Measure_5") over (partition by ID) AS "pct_max_Measure_5",

max("Measure_6") over (partition by ID) AS "pct_max_Measure_6",

max("Measure_7") over (partition by ID) AS "pct_max_Measure_7",

max("Measure_8") over (partition by ID) AS "pct_max_Measure_8",

max("Measure_9") over (partition by ID) AS "pct_max_Measure_9",

max("Measure_10") over (partition by ID) AS "pct_max_Measure_10",

median("Measure_1") over (partition by ID) AS "pct_median_Measure_1",

median("Measure_2") over (partition by ID) AS "pct_median_Measure_2",

median("Measure_3") over (partition by ID) AS "pct_median_Measure_3",

median("Measure_4") over (partition by ID) AS "pct_median_Measure_4",

median("Measure_5") over (partition by ID) AS "pct_median_Measure_5",

median("Measure_6") over (partition by ID) AS "pct_median_Measure_6",

median("Measure_7") over (partition by ID) AS "pct_median_Measure_7",

median("Measure_8") over (partition by ID) AS "pct_median_Measure_8",

median("Measure_9") over (partition by ID) AS "pct_median_Measure_9",

median("Measure_10") over (partition by ID) AS "pct_median_Measure_10" from DEMO_USER.DEMO_AGG)) T1

ON T0."ID" = T1."ID"

This example is based only on 10 columns, just to expose the power of SAP HANA ML. On a recent project, we had to handle more or less 800 measures coming from sensor data. Writing the sql only for those transformations would not be a nightmare only on matter of time to produce this SQL but also really difficult to make any changes. On few words, the win with this trick is not limited only to a fast and easily created SQL script but also a gain of having a scalable and automated process at our disposal.

No comments:

Post a Comment