Memory storage and Computing Power are fundamental blocks of Cloud Platforms and Infrastructure. The abundance of Memory storage and cost-effective computing power has ensured a lower baseline for cloud adoption. However, anything in excess without considering its consequences can corrupt our practices. I see a similar pattern with the cloud data models.

In SAP HANA, we replicate data predominantly from SAP systems through tables and CDS. Data replicated for tables may range from 1000s or rows to millions of records per month. The queries that join multiple tables without optimal selection of columns and filter conditions can consume the working memory resources. Eventually, despite the promise of infinite data and computing capacity(CC), the reality is there is a finite amount of memory and CC.

In this blog, I attempt to share my experience with efficient memory storage and loading of SAP HANA Column Tables.

My task was to manage the data in the BSEG Table.

Data Partition in Replication Task: Helps to improve performance during the data loading process, as it allows for parallel processing and reduces the load on the system.

The partitioning feature of the SAP HANA database splits column-store tables horizontally into disjunctive sub-tables or partitions. In this way, large tables can be broken down into smaller, more manageable parts. Partitioning is typically used in multiple-host systems, but it may also be beneficial in single-host systems.

◉ Since size limit on column-store tables is 2 billion, the limit is overcome by performing partitioning in the rep task. So, each partition can hold max 2 billion records

◉ Currently for BSEG we have performed Single-Level Partitioning based on “Range” for GJAHR values

The arrows in the above screenshot highlight the various options available to configure the partitions during the data load. (https://help.sap.com/docs/hana-cloud-database/sap-hana-cloud-sap-hana-database-administration-guide/...)

Data Movement in NSE(Native Storage Extension): Helps to optimize the In-memory storage without compromising on the performance of data retrieval. It used the NSE layer in HANA to storage records and they are termed as “Warm Storage”

Warm Data: Primarily read-only data that doesn’t need frequent access. Managed as part of the SAP HANA database, it participates in backup and system replication operations. Stored in lower-cost storage within SAP HANA.

When records in this store are requested, HANA uses the On-loading feature into the “Buffer Memory Cache”.

Once data use is over, HANA reloads the data from Buffer memory to NSE store.

In our scenario, we have only implemented it in HCD.

There may be some performance lag when used on Excel. However, the benefits of data out of in-memory is beneficial in this process.

We can have both types of partitions on a table. However, we have to be careful in planning on the tables to implement.

Our approach is a single table with partitions and Alter partition load characteristics.

ALTER TABLE ERP.BSEG

PARTITION BY RANGE (GJAHR) (

PARTITION 2023 <= VALUES < NULL COLUMN LOADABLE,

PARTITION VALUE < '2023' PAGE LOADABLE );

Later confirm the partition of the dataset using the below SQL statements

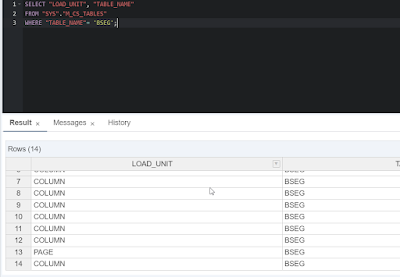

SELECT "LOAD_UNIT", "TABLE_NAME"

FROM "SYS"."M_CS_TABLES"

WHERE "TABLE_NAME"= 'BSEG';

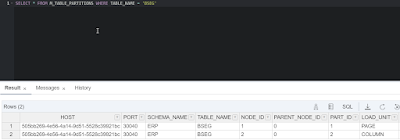

SELECT * FROM M_TABLE_PARTITIONS WHERE TABLE_NAME = ‘BSEG’;

Key Points on the Data Partition:

- The ALTER command for partitioning should typically be performed once, during the initial setup of the partitioning strategy. However, depending on your specific requirements, you may need to schedule it to run at certain events or intervals.

- For example, if you have a dynamic data set where new partitions need to be created periodically, you might schedule the ALTER command to run on a regular basis.

- Both types of partitions can be applied on a table. However, one needs to be cautious and judicious to monitor the load.

- If the ALTER command results in an error stating that a column is not a key, it means that the column we are trying to use for partitioning is not part of the primary key or a unique constraint of the table.

- In order to fix this situation, you need to ensure that the column you want to use for partitioning is part of the primary key or a unique constraint.

- If it is not, you may need to modify the table structure to include the necessary key or constraint.

- If the table structures are modified in the future, it can potentially affect the partitioning configuration.

- If you add or remove columns that are used for partitioning, you may need to update the partitioning strategy accordingly.

- Similarly, if you change the primary key or unique constraints of the table, it may also require adjustments to the partitioning configuration.

- It is important to carefully consider the impact of any structural changes on the partitioning strategy and make the necessary updates to ensure consistency.

No comments:

Post a Comment