Introduction

We will be addressing the data loading process in detail. How is data loaded from a source system? How is data loaded from one table to another within an HDI Container? What artifacts exists for loading? All relevant questions will be answered and considered with the help of our example project.

Data loading basics

In our first blog, we explained the fundamentals of XSA, while in our second blog we understood persistent structures and created our XSA example project. The XSA environment takes a new course when it comes to data loading as well, and uses its own services and structures.

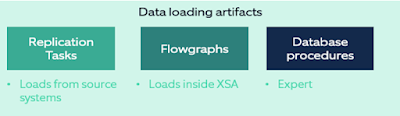

Every database needs data. Data loading plays a key role in data warehouse systems in particular, since the data is connected and harmonized from many different source systems. SAP offers a variety of artifacts to handle this task, which are summarized in the following figure.

Figure 1 – Artifacts for data loading

Replication tasks (.hdbreptask) are able to load data from source systems via virtual tables, whereby realtime, delta and simple transformations are possible. It is also possible to load datasources from an S/4HANA system, for instance.

Flowgraphs (.hdbflowgraph) are used for loading between persistent tables or views with harmonized processes. Standard nodes like projections, joins, or table comparisons can be used to easily implement the individual functions via the graphical interface.

Procedures (.hdbprocedure) are the expert solution, since SQLScript makes all options for data loading and manipulation available. We will not deal with the procedures further in this blog, since they are always structured specifically according to the use-case and often contain a complex logic. Therefore, we will be concentrating on the standard objects of the replication task and the flowgraph.

Connecting the source system

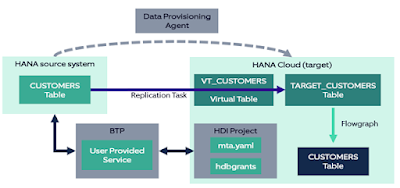

In this section, we would like to connect a HANA source system in our example project. Unfortunately, this cannot be completed in just a few clicks. Instead, we need a series of settings and tasks, where significant steps have to be completed outside of the BAS project. For our example project, we are pursuing the following goal: connecting a HANA system, loading the CUSTOMERS tables and loading to our CUSTOMERS table from the XSA project. In doing so, we want to expand our CUSTOMER table so that historization is possible. The target scenario looks like this:

Figure 2 – Overview of scenario

Unfortunately, many of the components must be manually structured and maintained. First, a Data Provisioning Agent (DPA) is required. This is a separate software component of SAP that is generally responsible for the exchange of data between systems. The task of setting up the DPA goes beyond the framework of this blog, so we will simply provide the following steps as a summary:

- Local installation of the DPA

- Start the service (Windows)

- Connect the DPA to the HANA Cloud instance

- Register an agent

- Install the adapter “HanaAdapter”

Now, the HANA system can be created in the Database Explorer as a new source system. This can be done, for instance, with the DBADMIN user.

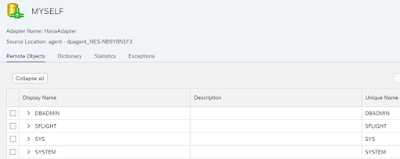

Figure 3 – Adding remote source

In our example, we are connecting our HANA Cloud instance with itself, so we will name the source system MYSELF and enter the actual host using the adapter “HanaAdapter.” If everything is set up correctly, the corresponding objects will be displayed under the new remote source.

Figure 4 – MYSELF

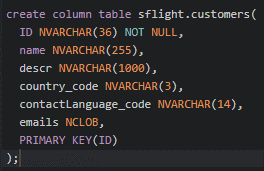

The next goal is to connect a table from the system. To do so, we will create a local database table in the previously created SFLIGHT schema. We will add two test records to this table. This simulates our source table in the source system.

Figure 5 – Source table

XSA projects live in their own HDI containers, and users within an HDI container only have restricted privileges. For our HDI user to receive authorizations for the new remote source, we need a technical role and a user who is centrally responsible for adjusting the remote source. The following SQL statements handle this task. Afterward, the user GRANTOR_SERVICE is authorized to create a virtual table on the remote source.

Figure 6 – Setting up Grantor Service

Next, we need a User Provided Service (UPS). This service can be used to centrally save the user’s authorizations and exchange them as needed. In addition, the entire database connection could be saved in a UPS, so switching to another system can be controlled using just one service. The UPS is created directly in the BTP under the corresponding space under “Instances.”

Figure 7 – Creating the UPS

Now, we need to add this service to our XSA project. To do so, we first expand the central control file of our project (mta.yaml) with the corresponding resource. The db module is also enhanced with the corresponding dependency.

Figure 8 – Expanding mta.yaml

Next, we create the “cfg” folder directly under the db folder. The name “cfg” must be used, since the files from this folder are deployed first, so authorizations on the source system are saved there. We create the file “myself.hdbgrants” in the new folder with the following content.

Figure 9 – Grants

This grants file gets the necessary authorizations for the remote source via the indicated service. Now, there is just one last adjustment before we can start data loading. The grants file refers to “ServiceName_1″. For development purposes (similar to a UPS), you cannot directly access services within an XSA project, but rather create a mapping. This mapping is saved in the .env file.

Figure 10 – Adjusting .env (1/2)

The .env file is used for local developments in your own HDI container to read the corresponding connection information. The UPS is then read from the BTP during deployment of the overall application.

A corresponding entry for the local UPS still needs to be added under “VCAP-SERVICES” in the same .env file. Since this new environment file is difficult to read, an old default-env.json can be created using the terminal command “hana-cli copy2DefaultEnv.”

Figure 11– Adjusting .env (2/2)

After adding the UPS, this file can then be converted again to the .env using “hana-cli copy2Env.”

Creating a replication task

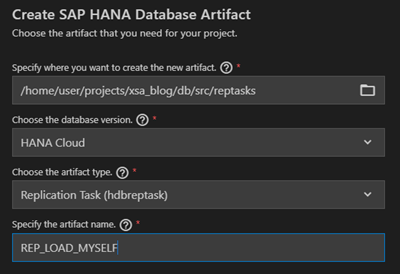

Whew! After all this work, we are now able to access the new source system. That means we can finally create a replication task. We create a “reptask” folder in the db folder, and create a new replication task there.

Basically, in a replication task a source system is selected, and individual objects that are to be loaded are updated. Multiple objects from one source system are possible in a replication task. An object always needs a virtual table and a persistent structure in which the data is to be saved.

The replication task can create these objects directly, or the corresponding tables are created via separate artifacts. In addition, further detailed properties like partitions, filters, or mappings can be defined for each object.

Figure 12 – Creating a replication task

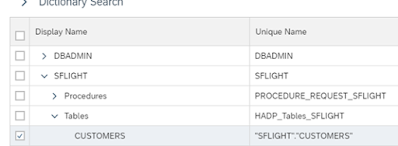

In the replication task itself, we first connect to the source system MYSELF. Then, we can create new objects using the “+” sign. We choose the CUSTOMERS table in the corresponding menu.

Figure 13 – Selecting the table

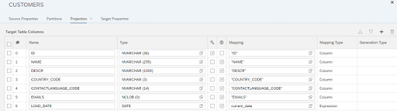

We want the replication task to create the required virtual table and target table. We can define the corresponding prefixes for the tables.

Figure 14 – Entering the prefix

We create a new column “LOAD_DATE” in the mapping rules, where the current date will be stored.

Figure 15 – Mapping

In addition, we can define the settings with which the target table should be loaded under the target properties. We can determine whether deleted columns should be considered, and whether the new data sets should be added via an Insert, Update or Upsert. Now the replication task is ready for deployment, and can then be executed directly. There is a task monitor in the context menu for checking the task.

Figure 16 – Executing the replication task

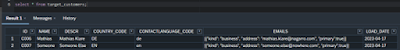

After the successful execution, we can see that the two data sets from the created CUSTOMERS table were successfully loaded.

Figure 17 – Testing new data sets

Adjusting the customer

In our scenario, we would like to change our existing CUSTOMERS table so that the information is historized. CAP and CDS help us to do so, once again, with pre-made aspects. We can easily add the aspect “temporal” to our table.

Figure 18 – Adding temporal aspect

These changes automatically insert “VALIDFROM” and “VALIDTO” columns and expand the key with “VALIDFROM.” For our scenario with the test files to continue working, corresponding fields also need to be added to the test data.

Figure 19 – Adjusting the test files

Creating flowgraphs

A flowgraph can be used for loading within the HDI container. We create a “flowgraphs” folder under “db” as usual. There, we use the wizard to create the flowgraph “FG_BT_CUSTOMERS.”

Flowgraphs always consist of at least one source and at least one target. Tasks like projections, filters, joins, table comparisons, or historization can be carried out easily via pre-defined standard nodes.

Figure 20 – Basic nodes

However, we should note that the functions of some nodes are restricted, and they don’t offer much flexibility. You cannot use the nodes types arbitrarily. Procedure nodes cannot, for instance, be used behind “Table Comparison” nodes.

Because of this, in complicated cases it may be necessary to write procedures and write comparisons between tables and inserting data using SQL commands like “MERGE,” “INSERT” or “UPDATE.” Two tables (our Billing Header and Items) can be joined and a new table written, for instance, as an example of a flowgraph.

Figure 21 – Example flowgraph

In our specific example, however, we want to write from our replicated table TARGET_CUSTOMER to our historized customer table. Overall, our flowgraph will have the following format.

Figure 22 – Customer flowgraph

As you can see, we use the standard nodes for comparing the data and creating the history. These can compare the corresponding data sets using the key and attributes and construct the history, e.g., the old data sets are completed and new data sets are inserted with corresponding validity.

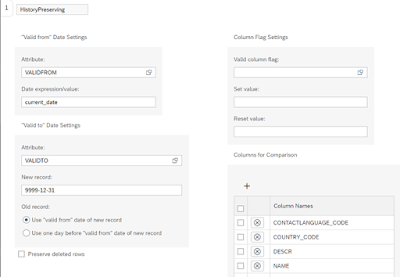

The tables to be compared and the attributes to be used for this purpose are entered intuitively in TableComparison nodes. Unfortunately, it is not currently possible to compare NCLOB columns, so we cannot use our email column. A range of settings can be completed in the HistoryPreserving nodes.

Figure 23 – Entering historization

After entering the time columns “VALIDFROM” and “VALIDTO,” we can indicate how these are to be filled out for each data set. Another flag can be added if needed to directly identify the current data set.

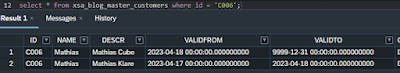

If we execute the flowgraph according to the build, the two data sets with corresponding validity are simply inserted first. We can either directly use the function in the BAS to execute them, or use the SQL statement “START TASK FG_BT_CUSTOMERS.”

Now, however, we are changing a data set in the source table using an UPDATE statement. We change the description of a customer from “Mathias Klare” to “Mathias Cube.”

Then we execute the replication task and the flowgraph. Now, we see the desired result in the target. The validity of the existing customer was ended, and a new data set was inserted.

Figure 24 – Historicized data set

It is also possible to load data between two HDI containers. However, here we need additional objects so the isolated HDI containers recognize one another.

No comments:

Post a Comment