1. What is HANA?

SAP HANA (High Performance Analytical Appliance) is an application that uses in-memory database technology that allows the processing of massive amounts of real-time data in a short time. The in-memory computing engine allows HANA to process data stored in RAM as opposed to reading it from a disk.

HANA is the first system to let you perform real time online applications (OLAP) analysis on an online transnational processing (OLTP data structure).

2. Difference between SAP Suite on HANA and SAP S/4 HANA?

SAP Suite on HANA(SoH)

SAP Suite on HANA is a whole product delivered by SAP for different industrial sectors (ECC is one of the modules of SAP Business Suite). The database can be HANA or any other database like DB2, DB6, MSSQL etc. Don’t get mix up ECC with SAP suite on HANA. ECC is part of SAP Suite on HANA.

SAP Business Suite 4 SAP HANA (SAP S/4 HANA)

This is the SAP system where you’ll get the Business Suite developed and optimized for HANA. The data model has been ‘simplified ‘and it works on the ‘Principle of One‘. For high-level understanding, we can say core tables of certain business processes (MM, FI etc) has been replaced by a single table (MATDOC, ACDOCA etc). SAP Business Suite 4 SAP HANA is therefore called S/4 HANA.

3. Concept of OLAP and OLTP:

(a). OLAP:

Online Analytical Processing (OLAP), a category of software tools which provide analysis of data for business decisions. Here the primary objective is data analysis not data processing.

For example: Any analytical used for stock analysis.

(b). OLTP:

Online Transaction Processing (OLTP) supports transaction-oriented (WRITE operations) applications in a 3-tier architecture. The primary objective is data processing not data analysis.

Example: when some data is saved or modified such as sales order creation or sales order change.

4. Evolution of SAP HANA/ Need of SAP HANA:

Part 1:

1. Traditional Database systems example Oracle, DB2, DB6, Sybase, MSSQl were only WRITE optimized (OLTP) but they were not read optimized (OLAP).

2. The reason for this low performance is because the whole data resides on disk storage. The ABAP reports used to read and fetch data from disk storage and then load it to RAM and further communicate to CPU to fetch the required results.

3. Here 90% of the tine is consumed in reading and fetching the data from the data base.

4. Also, here OLAP and OLTP are not simultaneously real-time.

5. This is because ABAP reports has fetched data from database and passed to RAM, meanwhile there can be high possibility that another write operation happened to the database. So, data is not real time.

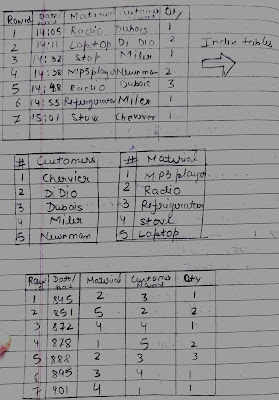

This can be explained with the help of the below diagram:

Part 2:

1. After that SAP comes with a concept of Business Warehouse (BW) systems which is basically an OLAP system. This system has better read operations. This is again near real time but not actually real time.

The below diagram explains the above concept:

This system again has some flaws like data redundancy, more hardware cost, low performance.

Part 3:

1. So, then SAP comes up with HANA database. Here the whole database (the disk) resides in the main memory (the RAM).

2. So, now the fetching, reading time is very much optimized since processing data in RAM is much faster than processing data in disk.

3. Also, this is the first system where both OLAP and OLTP transactions are real time.

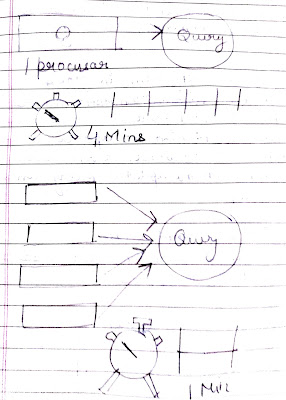

This below diagram explains the above concept:

5. Unique Functionalities of SAP HANA:

- In-memory computation

- Columnar data base

- Insert only approach

- Code push down

- Data compression

- Massive parallel processing of data

1. In-Memory Computation:

In-memory computation means the whole computation takes place inside the primary memory (the RAM). This is possible the whole disk now resides in RAM which makes the computation much faster.

2. Columnar data base:

2(a). Row based storage:

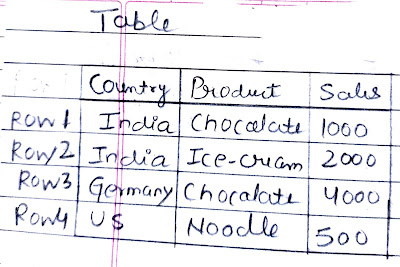

All our traditional database systems like Oracle, DB2, DB6, Sybase etc. used to store the data of tables in the form of row storage.

This means starting from first record of the table, we will traverse from left to right.

Consider the below example for row-based storage:

Pros of row-based storage:

1. Record data is stored together i.e. all the field values of a row are stored together.

2. Easy to insert/update i.e. row-based storage favours OLTP transactions because it will directly traverse at the end of the memory and will insert a new record.

Cons of rows-based storage:

1. All the data must be traversed during selection, even if only few columns are involved in the selection process. This means tables stored in row-based storage are not favourable for read operations (OLAP transactions). We have sequential search in row-based storage. For example: If we want to know the total sales of the country India, the query would be SELECT sales FROM ‘table name’ WHERE country = ‘India’. Now it will traverse through the entire memory block ever if the query would get the result in first two blocks only i.e. it has to traverse through the entire memory block.

2(b). Column based storage:

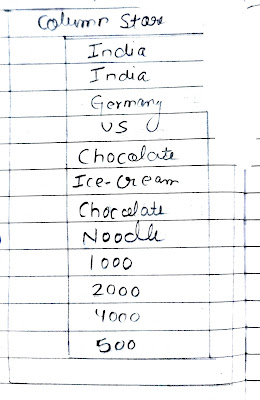

In column-based storage starting from first field, we will traverse from top to bottom.

Consider the below example for columnar-based storage:

Pros of column-based storage:

1. Only affected columns must read (traversed) during the selection process of a query because every column can serve as an index. Here, internally index search is there.

The system has a record of the starting address and ending address of each column. So, for the same query, it will fetch index1 and index2 from country column and it will map it to the projection column i.e. sales using the indexes.

Cons of column-based storage:

1. No easy insert updates.

2. After selection, selected rows (or values) must be reconstructed from columns.

What are the recommendations for using the column/row store in SAP HANA:

The default storage type for tables in SAP HANA is the column store. Tables should be in column store unless there are important reasons for putting them into the row store.

Reasons why a table could be in the row store:

◉ Huge range of single updates/ inserts/ deletes example: table NRIV (Number range intervals).

Reasons why a table must be in the column store:

◉ Tables is used in an analytical context (e.g.: contains business data).

◉ Table has many rows (rule of thumb: more than 100000) because of better compression in column store.

3. Insert only strategy in HANA:

Insert only strategy in HANA means when an existing row is updated, it is invalidated and no update to that row is made. However, it will insert (append) a new row with the updated entry and timestamp.

Advantages:

1. Help in fast read access (OLAP).

2. Does not require reordering and resorting.

3. Less load to the system.

4. Code push down:

Traditionally, we select (fetch) data from database layer to application layer and then do the data intense calculations in the application server only.

But in HANA data base, SAP suggests performing data intense calculation on the database layer itself. This is because the whole database now resides in RAM and processing the data in RAM is much faster.

5. Data compression:

Data compression would aim at reducing the memory complexity. In data compression, for each distinct entity (field), it involves the mapping of distinct column values to consecutive numbers, so that instead of actual values being stored, the typically much smaller consecutive number is stored. This method or process is known dictionary compression. This will help to store the same data in relatively less memory.

6. Massive Processing of data:

This would aim at reducing the time complexity. In this concept, a piece of work (for example a search query) is divided to multiple cores of the CPU so that run time of that piece of work can be minimized.

6. Code push down and its techniques:

The code-to-data paradigm is experienced in three levels or stages in SAP HANA each with increasing level of code complexity and performance:

1. Transparent optimizations: Fast data access, Table buffer enhancements.

2. Advanced SQL in ABAP: Open SQL enhancement, CDS Views

3. SAP HANA Native Features: AMDP, Native SQL.

Bottom-up approach for development:

In Bottom-Up approach, we will be developing HANA Views and database procedures in HANA database itself and then consuming these DB specific artefacts in my ABAP layer (Application sever) using remote proxies.

This approach would obviously be faster since we are creating these artefacts in the database layer itself but there are few drawbacks of this approach:

1. As an ABAP developer, we must work in two environments, HANA DB, where we create DB artefacts and ABAP to consume those artefacts as remote proxies. This also leads to data replication.

2. We will have to bear the responsibility of keeping our HANA and ABAP artefacts in sync and take care of the life cycle management.

Top-down approach for development:

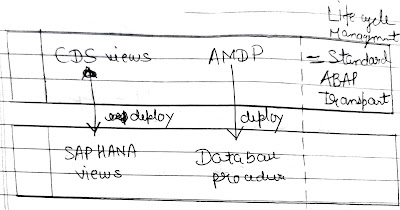

In top-down approach, we develop HANA based ABAP artefacts such as CDS Views and AMDP on the application layer itself and on activating them, this would create a HANA (database) view automatically on the HANA server.

The main advantage of Top-down approach is that only corresponding HANA based ABAP artefacts needs to be transported by the standard transport system. No HANA delivery unit is required.

No comments:

Post a Comment