The process of gathering data from numerous sources is an essential prerequisite for organizations to carry out advanced analytics, data science, and machine learning. However, ingesting and consolidating this data to establish a trustworthy, unified data repository is an arduous and expensive task.

In this blog post, I will demonstrate the process of ingesting data from the SAP HANA Cloud database by establishing a connection with HANA DB using the Python Database API Specification. We will then explore how to seamlessly integrate this data into Databricks Delta Lake. Finally, I will guide you through visualizing the ingested data in Tableau by establishing ODBC connectivity between Databricks and the Tableau tool.

By following this step-by-step guide, you will gain a clear understanding of how to efficiently import and visualize data from SAP HANA Cloud within the Databricks and Tableau environment.

This can be attained by progressing through three distinct phases:

1. Set up tables and import data into the SAP HANA Cloud Platform.

2. Ingest data from the SAP HANA Cloud into the Databricks Delta Lake database.

3. Utilize the Tableau tool to visualize the ingested data.

Phase 1: Set up tables and import data into the SAP HANA Cloud Platform.

In this step you will be able to effectively create tables in SAP HANA Cloud database based on Excel data and successfully import that data into the HANA Tables.

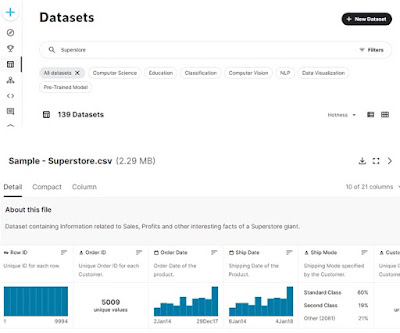

To accomplish this, I have utilized the sample Superstore Dataset available on the portal (https://www.kaggle.com) which is commonly used for data analysis purposes.

◉ Sample dataset:

Download and save the dataset to your local PC and proceed with next steps.

Import Data from excel to HANA Database:

We aim to import data into the HANA system from an Excel (.csv), which is the most efficient manual approach for loading data into the HANA system.

Requirement: The objective is to manually upload data into SAP HANA from either a flat file or an Excel document.

Prerequisite: You must have a database user with the IMPORT system privilege, and INSERT privilege needs to be granted for the target schema.

By fulfilling these requirements and prerequisites, you can proceed with the manual data upload process, allowing you to transfer data from external sources to the SAP HANA system efficiently.

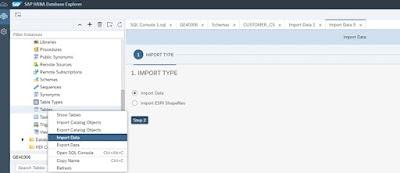

◉ Open SAP HANA DB Studio > Navigate to Tables > Right click and choose “Import Data”

◉ Select “Data from Local File” and click “Next Step”

◉ Fill all the required information and click Next Step

◉ Map the fields

◉ Click “Review”

◉ Click “Import Into Database”

- To view the imported data follow the below steps:

- In the Database Explorer tab, expand to Catalog and click on Tables.

- Right click any of the tables that appear below, and select Open Data.

- You should be able to see that the table is not empty and that the table has been successfully imported.

Phase 2: Ingest data from the SAP HANA Cloud into the Databricks Delta Lake database

Introduction to the Lakehouse Platform:

A Lakehouse platform is an innovative Data Management architecture that provides a unified environment for various workloads such as Business Intelligence (BI), SQL Analytics, Data Science, and Machine Learning. This platform combines the best of data lakes and data warehouses, allowing users to leverage its unique capabilities. By adopting a Lakehouse architecture, organizations can benefit from the following advantages:

1. Enables Direct Data Access across SAP and Non-SAP sources

2. Simplifies data governance

3. Removes Data Redundancy

4. Direct Connectivity to BI Tools

5. Simplified security with a single source of truth

6. Support for open source & commercial tooling

7. Operating on multi-cloud environments and many more.

Databricks is a leading example of a Lakehouse platform that embraces a unified approach. It integrates diverse workloads such as data engineering, analytics, data science, and machine learning. Databricks prides itself on its simplicity, openness, and multi-cloud capabilities. While future blogs will explore the specific features and capabilities of the Databricks Lakehouse platform, our focus will now shift to a data ingestion scenario involving the access of data from SAP HANA Cloud database into Databricks Lakehouse storage using the Python Database API Specification.

Access to the SAP HANA database is made available through connection objects. The module must provide the following constructor for these:

Connecting SAP HANA DB using ‘hdbcli’ SAP HANA Python Client:

The SAP HANA client extends support to Python versions 3.4 and above, as well as Python 2.7. It aligns with the Python Database API Specification v2.0 (PEP 249), which establishes a standardized set of methods to ensure a uniform interface for interacting with databases, irrespective of the specific database in use. To adhere to these specifications, the Python extension module for SAP HANA effectively implements PEP 249, enabling seamless integration and interaction between Python applications and SAP HANA databases.

Access to the SAP HANA database is made available through connection objects. The module must provide the following constructor for these:

Host: Specify the hostname or IP address of the SAP HANA Database server.

Port: Indicate the port number through which the database can be accessed.

User: Provide the username or user ID for authentication purposes.

Password: Enter the corresponding password associated with the provided user.

By supplying these parameters correctly, you can establish a successful connection to the SAP HANA Database, enabling you to interact with and manipulate the data stored within it.

- For HANA tenant databases, use the port number 3**NN**13 (where NN is the SAP instance number – e.g. 30013).

- For HANA system databases in a multitenant system, the port number is 3**NN**13.

- For HANA single-tenant databases, the port number is 3**NN**15.

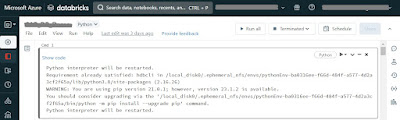

Next step is to load and transform data using the DataFrame API in Databricks, the Apache Spark Python (PySpark) framework comes into play.

By leveraging the PySpark DataFrame API in Databricks, you can perform various data operations, including loading and transforming data. This powerful API allows you to seamlessly read data from diverse sources, such as databases, CSV files, JSON files, and more. You can apply transformations like filtering, aggregating, joining, and sorting to manipulate the data according to your requirements.

Preview and compare the data both in Databricks & SAP HANA

Data preview in Databricks

Data preview in SAP HANA

Phase 3: Utilize the Tableau tool to visualize the ingested data.

In this phase, we will delve into the procedure of establishing a seamless connection between Tableau and a Databricks database. This connection will empower you to effortlessly configure the data source and construct a dynamic dashboard using the available data.

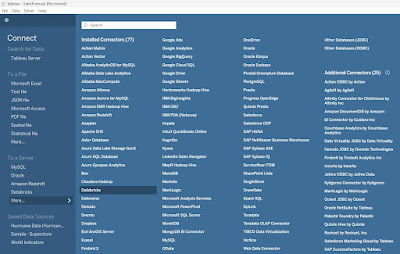

Connect Databricks database/table to Tableau Desktop.

Follow these instructions to connect to a cluster or SQL warehouse with Tableau Desktop.

1. Start Tableau Desktop.

2. Click File > New.

3. On the Data tab, click Connect to Data.

4. In the list of connectors, click Databricks.

To establish a connection with a Databricks using Tableau Desktop, Databricks driver is required.

1. Ensure that you have the necessary driver to communicate with the database. It’s possible that the required driver is already installed on your computer.

2. If the driver is not installed, Tableau will provide a message in the connection dialog box. This message will contain a link to the Driver Download page (opens in a new window), where you can find the relevant driver links and installation instructions.

After successfully installing the driver, you can utilize the following methods to connect to Databricks and select the specific database/tables that you intend to utilize for constructing the dashboard.

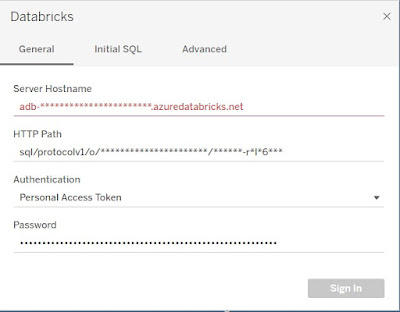

1. Enter the Server Hostname and HTTP Path.

2. For Authentication, choose your authentication method, enter your authentication credentials, and then click Sign in.

◉ To use a Databricks personal access token, select Personal Access Token and enter your personal access token for Password.

◉ To use a Databricks username and password, select Username / Password and enter your username for Username and your password for Password.

◉ OAuth/Azure AD. For OAuth endpoint, enter your OAuth endpoint. The format is https://<Serverhostname>/oidc. For example, https://example.cloud.databricks.com/oidc.

Preview of Tableau Dashboard (Demand forecast example using the sample sales data)

Up to this point, our focus has been on acquiring valuable skills in efficiently importing and visualizing data from SAP HANA Cloud within the Databricks and Tableau ecosystem.

No comments:

Post a Comment