Introduction

Jupyter Notebook or R are often the tools of choice for data scientists. It makes using data operations, data exploration and sharing convenient. SAP HANA is the database of choice for the most important businesses around the globe. Connecting both worlds, Jupyter Notebook and SAP HANA provides an incredible potential which needs to be seized.

SAP HANA

Jürgen Müller already provided a great blogpost about SAP HANA. I want to shortly formulate what SAP HANA is also with my own words. SAP HANA is an in-memory database which provides a lot of benefits, especially for analytical use cases. It connects different aspects of databases within one database. Besides typical properties of relational databases, it also delivers properties of NoSQL databases, like column-based databases. Depending on use-case, it is possible to activate or deactivate specific properties so that you can get the best performance out of your system.

SAP HANA is the database for SAP applications. SAP S/4HANA also uses SAP HANA in background. In SAP Business Technology Platform (BTP) SAP HANA is the database of choice for persisting data. With help of tools like CAP CDS it is convenient to do data modelling and build services. Also products like SAP Data Warehouse Cloud and SAP Analytics Cloud use SAP HANA as database.

Jupyter Notebook

Example of a Notebook

Jupyter Notebook, along with the R programming language, is the tool of choice when it comes to tackling data science challenges. In Jupyter Notebook, Pandas DataFrames are often used. Jupyter Notebook actually just provides the graphical web interface, which makes interactive programming in python possible. The actual libraries are Pandas, Numpy and Matplotlib. In Pandas there is a popular class called “DataFrame” which fits the needs data scientists often have in their work. These three python libraries have good integration with each other and provide a powerful tool.

DBeaver

I use DBeaver as my graphical user interface for databases. It helps investigating the raw data and run SQL statements to generate specific outputs. DBeaver is comparable with phpmyadmin, MySQL Workbench or pgAdmin.

DBeaver provides also connectivity with SAP HANA. Connecting to a SAP HANA database is simply selecting the SAP HANA tile and entering your credentials.

Example

Let’s start with the demo! I want to introduce the solution by providing a sample. If you have an existing SAP HANA instance with credentials, Jupyter Notebook on your computer and DBeaver, you can clone my repository, execute the notebook and do the same steps as I do here.

Sample Dataset

The dataset I use for this demo is the population data of Mannheim. Mannheim is the city where I am from, and the city provides some of its data in its own Open Data Portal. This dataset is licensed under dl-de/by-2-0. This means it can be used commercially as well as noncommercial as long as you link to the license text and data-source.

I downloaded and used the CSV source of this dataset. It is also contained in the git repository.

Load Data as DataFrame

The power of Jupyter Notebook becomes evident, when you want to load such datasets. The library “Pandas” provides very good interfaces to accomplish this. Reading data from machine-readable file formats like XML, JSON or CSV is a one-liner.

Here you can see in the first command block the declaration of the dependencies. In the second block the CSV file is read into a DataFrame. In the third block a part of the data set is output, so that you can check if everything is correct.

Data Processing

Now the data exists within the DataFrame. It’s so easy to do some data exploration. What value can we derive out of the data? Pandas DataFrames have interfaces for communicating with other well known data-science libraries like numpy and matplotlib. Merge, split, cleanup, connect with other datasets and do machine learning! Creativity is the limit for things to do at this step.

Persist Data in SAP HANA

Now comes the exciting part. I have to admit, I experimented around for a while until I figured out how it works. SAP HANA has security requirements that necessitate certain parameters. That’s also the real reason I’m sharing this post: In case others are working on a similar problem, you can use this example to get your quickstart.

My requirement was to use the “to_sql” interface provided by DataFrame to persist the dataset. This way you don’t have to worry about the data types and you get to the target faster.

For seamless integration the libraries “hdbcli” and “sqlalchemy” are needed. You can install them with the Python package manager pip. You need to add the credentials of your HANA instance into the according variables. Important: Do not push your credentials in git repositories. You need the port, address, user and password. The connection does not work with HANA without SSL. This means that one must also pass the SSL arguments along with the connection information. This happens in this example via the sqlalchemy library.

You create a connection and pass this to the “to_sql” command. There is a warning message which comes from sqlalchemy. This can be ignored. That’s it! It is this simple to persist a DataFrame in SAP HANA.

Check persisted data

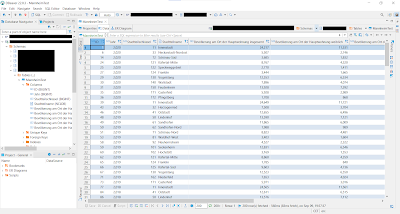

Now let us look with DBeaver, whether everything was persisted correctly. I open the according connection’s table.

Voila! Hereby we could confirm that everything worked fine.

Next Steps

What I have presented here is of course only the prerequisite for many other potential use cases. For fun, I persisted the table created in the above example to the HANA instance of an SAP Data Warehouse Cloud instance and built a small data pipeline.

The use case I needed this solution for is a data pipeline where I couldn’t automate the data source to SAP Data Warehouse Cloud (DWC) because it was sitting around in multiple Excel sheets and the data was changing regularly. Each time I run the Notebook, my local table in SAP DWC is updated, making this a semi-automated solution for former local datasets (e.g. in Excel, CSV). Highly relevant if you are working in a transformation project.

Graphical View in SAP Data Warehouse Cloud

Using the notebook, I perform the merge of several Excel files from my file system and persist them in the DWC HANA table. In DWC I can perform further operations using the Graphical Views or Data Flows.

Story in SAP Analytics Cloud

Finally here I visualized Mannheims population data in SAP Analytics Cloud using existing live connectors. This demonstrates a data-science use case based on sample data. I don’t know if I will write a separate post for this, to go more into detail. But for now, that’s it!

Conclusion

The ability to perform notebook operations on SAP HANA with Jupyter Notebook is a powerful tool, which has potential. In the context of data-science, for me it seems this potential is not explored sufficiently

No comments:

Post a Comment