Brief

When we starting with the operation of a SAP Business One on HANA 10 environment, sometimes you may find that there are no possibilities to install a SAP HANA Cockpit for the administration of the database HANA 2.0 or simply “it is not budgeted” in the scope of the project .

Due to the characteristics of this type of project and the target of some the clients, many times these don’t have the necessary infrastructure and the technical personnel qualified for the administration of HANA as it “should be”.

This happens mainly in On-Premise environments where the customer has to ensure the security and integrity of their data, regardless of whether the solution is installed under a hyperscaler or not.

Most hyperscalers offer replication solutions, data protection, etc. to guarantee the data of the products that we can use in them, but these are sometimes not products chosen by some “very small” customers profile.

In these types of clients, there is much less staff available to carry out the minimum backup / administration policies that a system that uses HANA requires, so in some way (and after installation) it is necessary to ensure its operability before untimely events during its life cycle.

A quick way to do it, and with few resources involved, is by implementing scripts that run the backups on a scheduled basis, delete the old ones and copy them to another site outside the Linux server.

Note: There are a thousand ways to do this “administrative task” (and that it is best to use the HANA Cockpit of that we are clear) to guarantee the minimum backup policies in the most economical way possible. This is just another one that can be used by technical consultants who implement SAP Business One or have to manage solutions of this type.

Let’s start with this solution:

1. Have the system correctly installed and running (no problems, it may be obvious but it is good to make sure before starting with this task).

2. Once we have met all the basics to have B1 running we can start with the following steps. Having launched a system and tenant backup for the first time is key for log backups to start.

3. Check that the HANA installation file systems have been properly installed.

4. Create a drive, a disk unit, or a mapped unit on a Windows that has access to the Linux server where we install HANA to replicate the backups that we will take from Linux in order to avoid that if we lose this instance, we lose all the system information. This drive must have at least 5-10 times the size of our full HANA backup, the idea is to have space to enter the backups of several days and thus have minimum data protection policies. We will call this drive SAPBackups:

5. Create a folder on /mnt (we could call it /SAPBK)

6. Once we have created the drive in Windows it must be “mounted” in Linux. For this we should first create a user at the level of the local Windows instance which we will call “montaje” with a specific password and with the following permissions. Something like that:

7. We edit the /etc/fstab file with vim and write the necessary line to mount this shared disk and be accessible from Linux. An example that works would be this:

//EC2AMAZ-XXXXX/BackupsB1 /mnt/SAPBK cifs user=montaje,pass=123XXX,rw,users 0 0

When you execute the cat on the file it should return something like this:

8. Executing mount should appear as a file system:

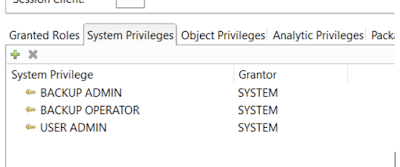

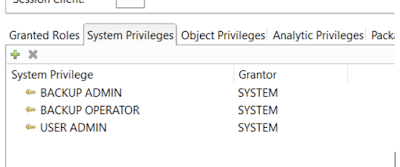

9. Then we’ll go to the HANA Studio and you have to create the user that will perform the scheduled backups. We will call this user ZBACKUP and it will have these privileges:

10. We will create 2 scripts with the root user which will take care of the “files” issues to avoid the infamous “disk full situation” and other stuff that happens when the customer don’t have or accomplish with a “good” backup strategy.

The scripts do the following:

a. We will call the first script ZSYNCLOGS.sh. This script is in charge of scheduling every 15 minutes in cron, between 5am and 11pm (data backups are executed at night). In this case, the “old” files will be erased and the cleaning will be in another script scheduled with root as well and that will synchronize the data backup made in the HANA backup execution script. The delete is placed in the script so that it will also clean the old files generated in the destination since it will normally only copy the recent log files.

b. The second script we will call ZSYNC-CLEANING.sh this is a backup cleaning script for SYSTEMDB and TENANT NDB (in this case we name the SID of HANA as NDB) and its log backups – for HANA we schedule it in the root crontab also, since it synchronizes the data backup made in the HANA backup execution script. Depending on the space in the /hana/shared and the size of each set of backup files, we leave n number of days of backup on the Linux server (in our case will be 3 days).

ZSYNCLOGS.sh

#!/bin/sh

rsync -avu –delete “/hana/shared/NDB/HDB00/backup” “/mnt/BACKUP/”

# the end

ZSYNC-CLEAN.sh

#!/bin/sh

find /hana/shared/NDB/HDB00/backup/data/DB_NDB -type f -mtime +2 -exec rm -fv {} +

find /hana/shared/NDB/HDB00/backup/data/SYSTEMDB -type f -mtime +2 -exec rm -fv {} +

find /hana/shared/NDB/HDB00/backup/log/DB_NDB -type f -mtime +2 -exec rm -fv {} +

find /hana/shared/NDB/HDB00/backup/log/SYSTEMDB -type f -mtime +2 -exec rm -fv {} +

find /mnt/BACKUP/backup/data/DB_NDB -type f -mtime +2 -exec rm -fv {} +

find /mnt/BACKUP/backup/data/SYSTEMDB -type f -mtime +2 -exec rm -fv {} +

find /mnt/BACKUP/backup/log/DB_NDB -type f -mtime +2 -exec rm -fv {} +

find /mnt/BACKUP/backup/log/SYSTEMDB -type f -mtime +2 -exec rm -fv {} +

rsync -avu –delete “/hana/shared/NDB/HDB00/backup” “/mnt/BACKUP”

# the end

11. With the root user we enable via crontab -e the 2 scripts that we create below, we leave them scheduled to run at that selected times. This is referential, it depends on the particularities of the business. These are the lines you should add:

0,15,30,45 5-23 * * * /hana/shared/NDB/HDB00/ZSYNCLOGS.sh

0 20 * * * /hana/shared/NDB/HDB00/ZSYNC-CLEAN.sh

12. Now we will create the script that executes the backups in HANA with the ZBACKUP user that we created earlier. For this we create a script called ZBACKUP.sh which will be responsible for making backups of the SYSTEMDB tenant and the NDB tenant (this is the tenant where we install SAP Business One).

This script will be executed by the user ndbadm (<SIDadm>) and therefore it will be programmed with the crontab of this user. The default backups go to the standard path of each tenant and that way (to avoid complications) it will be left that way. Then we will have 2 backup sets in these paths and below you can see how the script would look like calling to perform the backup:

ZBACKUP.sh

#!/bin/sh

time=”$(date +”%Y-%m-%d-%H-%M-%S”)”

/usr/sap/NDB/HDB00/exe/hdbsql -i 00 -n localhost:30015 -d NDB -u ZBACKUP -p 12345 “backup data using file (‘$time’)”

/usr/sap/NDB/HDB00/exe/hdbsql -i 00 -n localhost:30013 -d SystemDB -u ZBACKUP -p 12345 “backup data using file (‘$time’)”

# the end

The defaults paths:

/hana/shared/NDB/HDB00/backup/data/DB_NDB

/hana/shared/NDB/HDB00/backup/data/SYSTEMDB

13. One more thing to do is to program the script with the user ndbadm through a crontab -e and in that way we will have the planning of the “automated” HANA backups with this line:

0 19 * * * /hana/shared/NDB/HDB00/ZBACKUP.sh

14. The scripts must have permissions to be executed at the level of the corresponding users (root and ndbadm in each case).

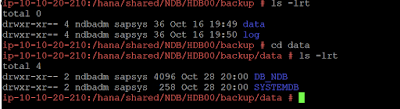

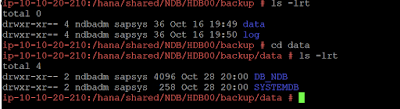

15. Backups will be generated in this way in Linux in the case of data and log, they will always have the same set of folders in relation to the number of tenants that exist or are being backed up:

16. Now you can can see a set of backups of 3 days in a row for the NDB tenant, that were executed according to the policies established in the scripts we use (for SYSTEMDB we would have the same set of files):

And the same for the logs which are executed every 15 minutes (default time determined by HANA after the first complete complete backup):

17. Once the backups start, we can also see how they are synchronized in Windows on the drive that we allocate for this purpose:

Wi we will have replicated, at least in 2 different locations, the backup sets of our HANA / SAP Business One system. The idea is that the client has the possibility of copying these backup sets from Windows and save them in turn in another location, in order to protect the data and thus avoid anomalous events.

No comments:

Post a Comment