In this blog, we will explore one of the latest innovations from SAP Datasphere. Effectively, from version 2021.03 onwards, SAP Datasphere has introduced the concept of ‘Replication Flows’.

Think of replication flows as your trusty sidekick in the world of data management. This feature is all about efficiency and ease. It lets you effortlessly copy multiple tables from a source to a target, without breaking a sweat.

The beauty in this story is that it is cloud-based all the way! Say goodbye to the hassle of dealing with on-premises components like installing and maintaining data provisioning agents. Thanks to SAP Datasphere's cloud-based replication tool, those headaches are a thing of the past. Replication flows support the following source and target systems:

Supported Source Systems:

- SAP Datapshere (Local tables)

- SAP S/4 HANA: Cloud and on premise

- SAP BW and SAP BW/4 HANA

- SAP HANA: Cloud and on premise

- SAP Business Suite

- MS Azure SQL

Supported Target Systems:

- SAP Datasphere

- SAP HANA: Cloud and on premise

- SAP HANA Data Lake

- Google BigQuery

- Amazon S3

- Google Cloud Storage

- MS Azure Data Lake Storage

- Apache Kafka

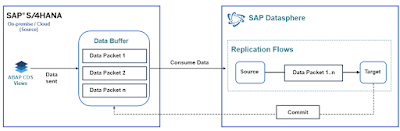

Technical architecture diagram:

So now, let us dive into how we can replicate S/4 HANA data to Google BigQuery using these newly released replication flows in SAP Datasphere!

Pre-requisites:

Establish a source connection (e.g., S4 Hana Cloud) within Datasphere.

Establish a connection between SAP Datasphere and Google BigQuery.

Replication process:

Follow these steps to configure the replication flows:

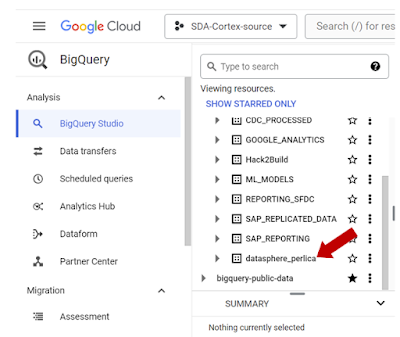

STEP1: Ensure that a BigQuery folder is ready to receive replicated tables.

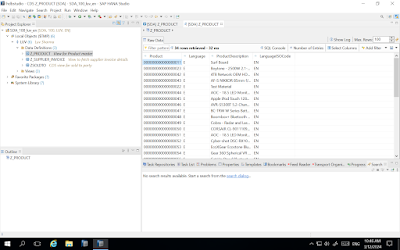

STEP2: Create the CDS view to be replicated from S/4 HANA on-premise system.

STEP3: Head to SAP Datasphere's Data Builder and select "New Replication Flow."

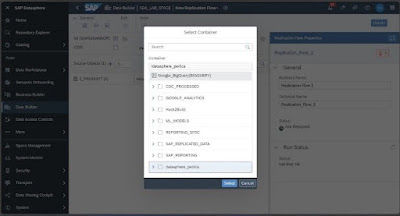

STEP4: Choose desired source views from the SAP system and a relevant target in BigQuery dataset.

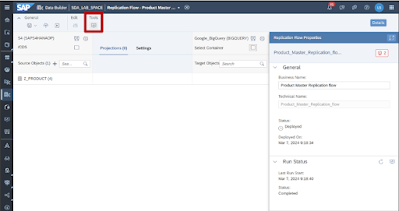

STEP 5: Set load type, configure, save, and deploy.

You can configure the settings for both Source and target systems. The Replication Thread Limit refers to the no. of parallel jobs that the replication flow can run. As per best practises, the thread limit on both the source and target system setting should be equal.

STEP 6: You can monitor the execution process from Tools section.

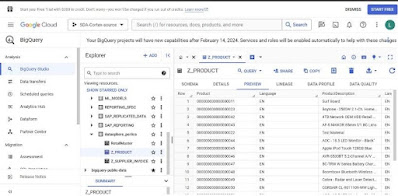

Step 7: Head over to the Google BigQuery folder which was selected in step 3 to verify.

RESULT: Congratulations on setting up replication from SAP S4 HANA Cloud to Google BigQuery!

What happens behind the scenes is that the data from source system is written in a component called Data Buffer in the form of packages. Datasphere reads these data packages and transfers it to the target system. Once all the packages have been transferred to the target system, a commit message is sent to the source system indicating that all the packages have been transferred and the data buffer can now be dropped.

No comments:

Post a Comment