In this blog post, I will demonstrate how to send data to SAP HANA Cloud using the Integration Suite. Additionally, I will explain how to handle nested JSON data and distribute it across multiple tables utilizing parallel multicast and mapping functions.

Problem Statement:

We have exposed an API endpoint through which we push data in JSON format and in response we get the insert count in particular tables. The input data contains user details and role details in nested form. We are supposed to insert the user details in User Table whereas in the User-Role mapping table for each role associated with a user, we ensure the creation of a corresponding entry, linking the user's details with their roles. Our requirement is to process the JSON data via CPI and populate these two tables.

Architecture:

Adding JDBC Data Source in Integration Suite

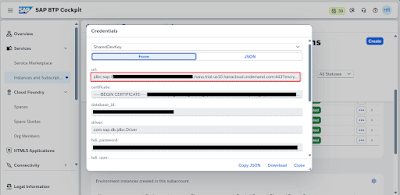

We need JDBC URL, User ID and Password to connect to the SAP HANA Cloud Database. We can get it from instance credentials.

Here we get the JDBC URL for our database which will be like “jdbc:sap:// <instanceID>.hana.trial-us10.hanacloud.ondemand.com:443/?encrypt=true”

We also get the Runtime User ID and Password for the Schema in the same key.

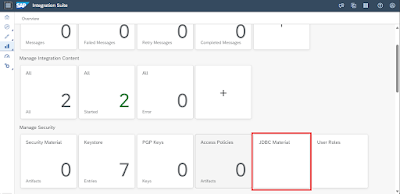

Go to your integration suite tenant and click on JDBC material under Manage security section.

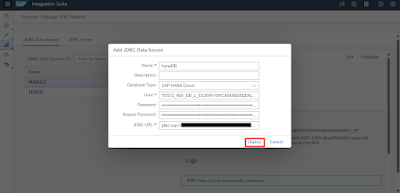

Add the instance details we got from credentials and deploy.

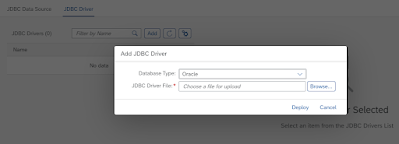

In case of 3rd party database such as Microsoft SQL Server, Oracle and DB2 you need to upload the JDBC driver jar file under JDBC driver tab. In case of HANA, it is not required.

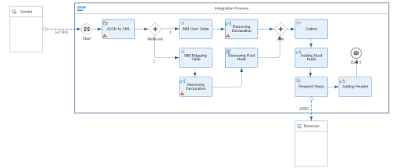

Integration Flow Design:

I have created this integration flow where I have exposed one endpoint to get the JSON data. Firstly, I have converted the JSON data to XML format because we will be sending data through JDBC Adapter in standard XML format. Below is the standard format for the XML which is used to insert data into database.

<root>

<StatementName1>

<dbTableName action="INSERT">

<table>SCHEMA.”TABLENAME”</table>

<access>

<col1>val1</col1>

<col2>val2</col2>

<col3>val3</col3>

</access>

</dbTableName>

</StatementName1>

</root>

I have used parallel multicast to route the message simultaneously in two different branches since we have to insert data in two different tables.

Let’s look at message mappings for both the routes.

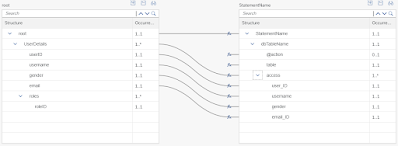

For User Table we are using simple one to one mapping and passing "INSERT" in the action attribute.

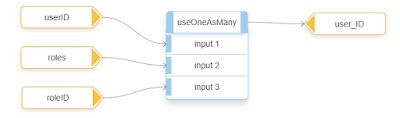

This is the mapping for the mapping table where we map users and their roles. As you can see the userID node has single occurrence per record, but we need it to occur multiple times as per the number of roles that particular user has. So, we will be using the useOneAsMany Node function.

We then gather the messages from both the routes and pass them together through the JDBC Adapter using Batch processing.

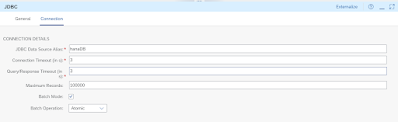

Let’s look at the configuration of JDBC Adapter.

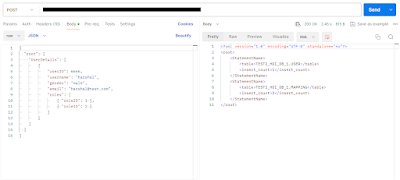

Here is the input payload content I am passing from the Postman.

{

"root": {

"UserDetails": [

{

"userID": 6666,

"username": "harshal",

"gender": "male",

"email": "harshal@test.com",

"roles": [

{ "roleID": 1 },

{ "roleID": 2 }

]

}

]

}

}

Here is the XML payload content that goes through JDBC Adapter.

<root>

<StatementName>

<dbTableName action="INSERT">

<table>TEST2_HDI_DB_1.USER</table>

<access>

<user_ID>6666</user_ID>

<username>harshal</username>

<gender>male</gender>

<email_ID>harshal@test.com</email_ID>

</access>

</dbTableName>

</StatementName>>

<StatementName>

<dbTableName action="INSERT">

<table>TEST2_HDI_DB_1.MAPPING</table>

<access>

<user_ID>6666</user_ID>

<role_ID>1</role_ID>

</access>

<access>

<user_ID>6666</user_ID>

<role_ID>2</role_ID>

</access>

</dbTableName>

</StatementName>

</root>

Response in Postman:

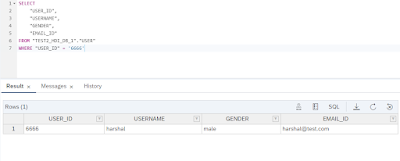

Let's check our entries in HANA Database.

No comments:

Post a Comment