Introduction

SAP Datasphere has introduced a new feature, 'Replication Flows.' This new capability (now available with Azure Data Lake) allows for the process of copying multiple tables from one source to another, offering a fast and seamless experience in data management.

In this blog, we’ll provide a step-by-step tutorial on replicating data from SAP S/4HANA to Azure Data Lake, showcasing the practical application and efficiency of this new feature in real-world scenarios.

Now, let's dive in. We'll walk you through each step necessary to effectively utilize 'Replication Flows' for transferring data from SAP S/4HANA to Azure Data Lake.

Steps:

1. To start, you will need to create a connection in your SAP Datasphere instance to Azure Data Lake.

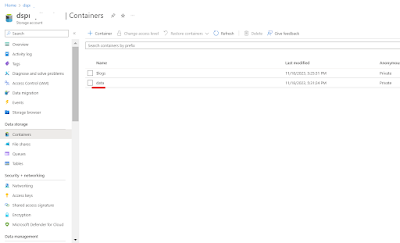

2. Please ensure you have a Dataset in your Azure Data Lake that you would like to replicate the tables into.

3. Make sure you have a source connection. In this case, we will be using a S4/HANA On-Premise connection. You will need to create this connection in the ‘Connections’ tab in SAP Datasphere.

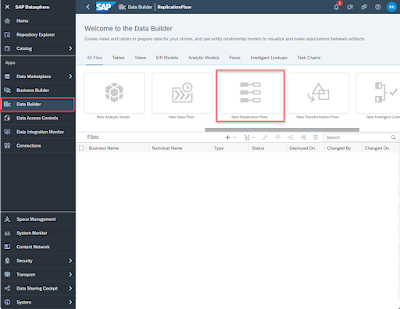

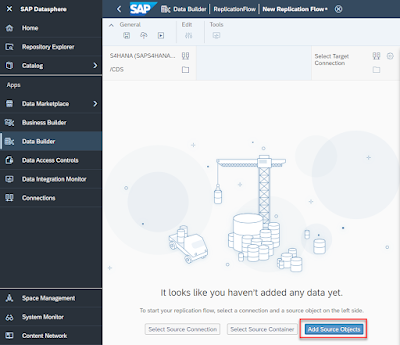

4. Navigate to SAP Datasphere and click on ‘Data Builder’ option on the left panel. Find and click the ‘New Replication Flow’ option.

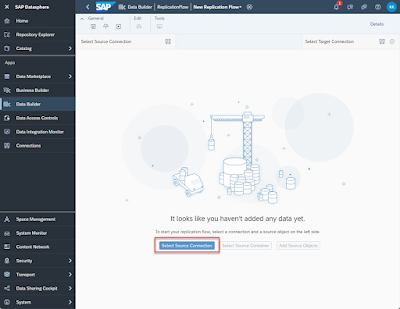

5. Click on ‘Select Source Connection’.

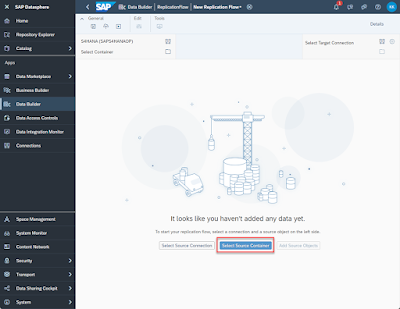

6. Choose the source connection you want. We will be choosing SAP S/4 HANA On-Premise.

7. Click select Source Container.

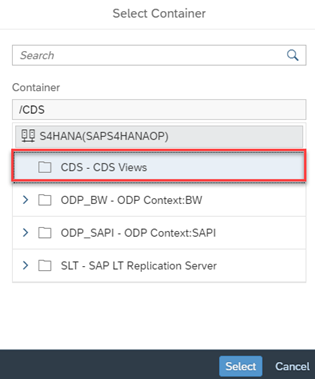

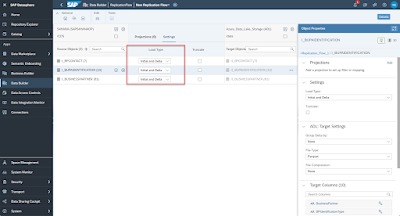

8. Choose ‘CDS Views’ then click Select.

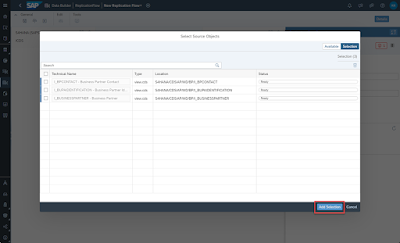

9. Click “Add Source Objects” and choose the views you want to replicate. You can choose multiple if needed. Once you finalize the objects, click add selection.

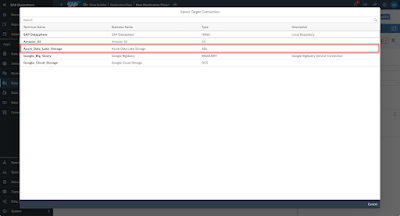

10. Now, we select our target connection. We will be choosing Azure Data Lake as our target. If you experience any errors during this step, please refer to the note at the end of this blog.

11. Next, we choose the target container. Recall the dataset you created in Azure Data Lake earlier in step 2. This is the container you will choose here.

12. In the middle selector, click ‘Settings’ and set your load type. ‘Initial Only’ means to load all selected data once. ‘Initial and Delta’ means that after the initial load, you want the system to check every 60 minutes for any changes (delta), and copy the changes to the target.

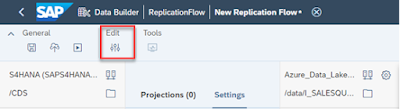

13. Once done, click on the ‘Edit’ projections icon on the top toolbar to set any filters and mapping.

14. You also can change the write settings to your target through the settings icon next to the target connection name and container.

15. Finally, rename the replication flow to the name of your choosing in the right details panel. Then, click on ‘Save’, ‘Deploy’ and ‘Run’ the replication flow through the top toolbar icons. You can monitor the run in the ‘Data integration monitor’ tab on the left panel in SAP Datasphere.

16. When the replication flow is done, you should see the target tables in Azure Data Lake as such. It should be noted that every table will have 3 columns added from the replication flow to allow for delta capturing. These columns are ‘operation_flag’, ‘recordstamp’, and ‘is_deleted’.

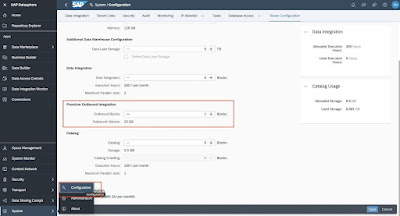

Note: You may have to include Premium Outbound Integration block in your tenant to deploy the replication flow.

No comments:

Post a Comment