This blog provides a comprehensive guide for users seeking a solution for processing large files from an SFTP source and transferring the data to a HANA Database. The solution outlined in this guide employs SAP Integration Suite. There have always been challenges associated with processing large files, which may include issues related to performance, memory usage, and overall efficiency. All of which are solved by the solution shared in this guide.

Background Information

During a recent migration project - we were challenged with designing a solution that eliminated a previously designed integration using Informatica Platform. Replacing it with SAP BTP Cloud Integration Suite, during the migration project a crucial task emerged: retrieving large files from an external SFTP and seamlessly loading the data into a HANA Database On-Premise table.

Systems and Platforms

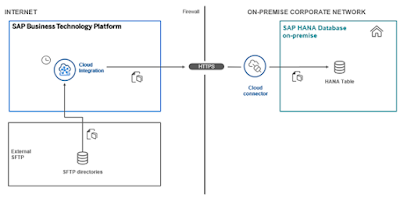

◉ SAP BTP Integration Suite (Cloud Integration): Integration Platform.

◉ SAP HANA Database On-Premise: Target destination for the data.

◉ External SFTP (SSH File Transfer Protocol): Source location for the large files.

Business Requirement

Regularly monitor the external SFTP folder for incoming files, and upon detection, initiate the seamless transfer of data to the HANA Database On-Premise table through SAP Cloud Integration. The graphic below is a diagram the represents the solution we deployed.

The Challenge with this Integration

While handling file processing within SAP Cloud Integration is generally straightforward and handled seamlessly by the platform. Challenges arise when dealing with large files, approximately 400MB or larger. Depending on the environment's capacity, unforeseen issues may occur, such as messages becoming stuck in processing status and being automatically aborted after 24 hours without completion.

Providing a specific recommendation on the maximum initial file size is challenging. Memory consumption varies significantly based on individual data content and processing step characteristics. Operations such as csv-to-xml conversion and message-mapping process the payload as a whole, resulting in considerable memory usage.

To address this, it is advisable to divide the input into manageable chunks for more efficient processing.

Solution Implemented

The solution we implemented involves utilizing the General Splitter capability, where two instances of General Splitter were employed.

The first one was responsible for breaking down the original input files into smaller pieces, while the second one facilitated sending the data to HANA in batches. This approach enables parallel processing with a defined number of concurrent processes, allowing the complete payload or data to be efficiently sent to HANA.

1 - SFTP Sender to trigger the iFlow

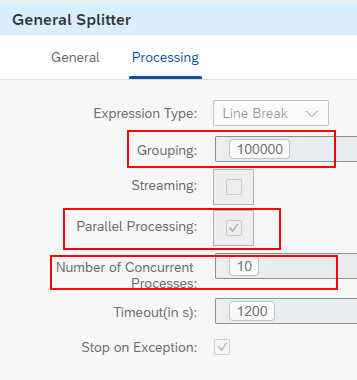

2 - File General Splitter to process a quantity of records per time (for example, a file with 1.000.000 rows will be split in 10 branches of 100.000)

◉ Grouping: number of rows in each block

◉ Parallel Processing: to enable the parallel processing

◉ Number of Concurrent Processes: number of threads executing in parallel

3 - Convert the original payload CSV to XML (using the standard function)

4 - Graphical mapping to create SQL XML payload to HANA (field transformation to meet HANA format)

5 - JDBC General Splitter to send to HANA a quantity of records per time (batches)

6 - Send the data to HANA

7 - Gather to merge all the HANA responses

8 - Gather to merge all the records processed

9 - Exception Handling sending an alert email to a distribution list. Afterwards the escalation event is raised

Benefits/Advantages of the implemented solution

This solution utilizes an approach that harnesses the strengths of SAP Cloud Integration while addressing common challenges associated with data processing and integration. The emphasis on parallelization, error prevention, and leveraging out-of-the-box capabilities contributes to the efficiency, reliability, and scalability of the overall solution.

No comments:

Post a Comment