The recent success of applying state-of-the-art AI algorithms on tasks with modern big data has raised concerns on their efficiency. For instance, ensemble methods like random forest usually require lots of sub-learners to achieve favorable predictive performance, which result in an increasing demand for model storage space with an increasing dataset size.

Such tree-based models may go to GB level, making them difficult to deploy on resource-constrained devices or costly in the cloud. Moreover, a larger model usually causes a higher inference time and energy consumption which are not usable in many real-world applications.

To address this problem, many efficient methods are proposed including making inference of a machine learning model faster (time-efficient), consuming fewer computational resources (computation-efficient), less memory (memory-efficient) and less disk space (storage-efficient). Model compression is one of the most popular methods that reduces the size of large models without compromising predictive accuracy.

In SAP HANA PAL (Predictive Analysis Library), we also introduce lossy model compression techniques in several popular methods including Support Vector Machine (SVM), Random Decision Trees (RDT) and Hybrid Gradient Boosting Tree (HGBT) with the minimum loss of the predictive accuracy and no delay for inference.

In the following sections, we will dive deep in the model compression methods applied in SAP HANA PAL. At the end of the blog post, some useful terms and links are listed.

Tree-based Algorithms – RDT/ HGBT

Regards to these tree-based algorithm whose trees are large and independent and identically distributed random entities containing many additional characteristics and parameters, given the training data. Hence, the ensemble model is thus rich of redundancy which permits us in inferring their probability structure and constructing efficient encoding scheme.

The compression methodology we applied in RDT/ HGBT focus on the following attributes:

1. Tree structure: Each of the trees’ structure is represent with a Zaks sequence and then we apply a simple LZ-based encoder after all sequences are concatenated.

2. The split of the nodes (Variable Name, Split Value): Usually, each node is defined by a variable name and a corresponding selected split value. As a result of the recursive construction of the tree, the method assumes that the probabilistic model for a node only depends on its depth and parents. Then, based on probabilistic modeling, models (Variable Name Models and Split Value Models) are clustered via Bregman divergence. Then, the data which corresponds to a model could be compressed by Huffman code according to center probability.

3. The values of the leaves: Also, the method uses a simplified model in which the distribution of the fits in a leaf relies on its depth and parent’s variable name. In the case of classification problems, the usage of entropy coding is suitable as the fits are categorical. However, in the regression problems, quantization techniques like Lloyd-max are required to take over continuous set of values. Such quantization results generally lead to very regularized distortion which could be handled well by setting the distortion level to achieve favorable compression rate.

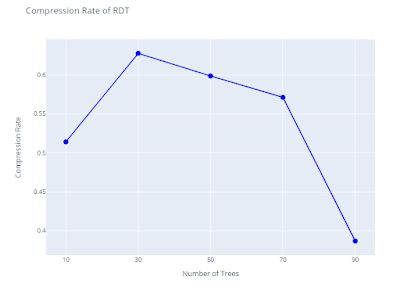

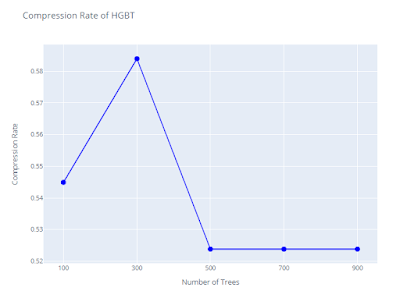

Moreover, this lossless compression scheme allows make predictions from the compressed model. The results of compression rate of RDT and HGBT with various number of trees are shown in the following two figures. The dataset (768 rows, 9 columns) used in the example is from Kaggle and the original data comes from National Institute of Diabetes and Digestive and Kidney Diseases.

SVM

The aim of SVM is to find a set of support vectors to distinguish the classes and maximize the margin. Sometimes, the number of support vectors is very huge. In particular, categorical data need to be mapped to be continuous through one-hot encoding technique, which required more space for model storage in JSON/PMML format.

Hence, we applied Lloyd-Max quantization and Huffman coding to compress support vectors into a string in SVM. The scheme also enables predictions from the compressed format. In addition, in one-hot encoding, each categorical value is represented only by a value which can significantly reduce the use of memory.

Terms

Compression Rate

Compression Rate = Compressed Size / Uncompressed Size

Quantization

Quantization is the process of mapping continuous infinite values to a smaller set of discrete finite values. One popular quantizer is Lloyd-Max algorithm which designs non-uniform quantizers optimized according to the prevailing pdf of the input data.

Entropy Coding

Entropy is the smallest number of bits needed to represent a symbol. Hence, entropy coding techniques are lossless coding methods which could approach such entropy limit. One common form is Huffman coding which uses a discrete number of bits for each symbol. Another is arithmetic coding, which outputs a bit sequence representing a point inside an interval. The interval is built recursively by the probabilities of the encoded symbols.

Tree-based Model Compression

There are many research directions of model compression of tree ensembles. One popular line focus on pruning techniques such as removing redundant components (features and trees), selecting optimal rule subsets, and choosing an optimal subset of trees. However, such pruning schemes are lossy and no guarantees on the compression rate. Another line is to train an artificial neural network to mimic the functionality of tree ensembles which is faster but both lossy and irreversible.

In this blog post, we introduce the model compression methodology used in RDT, HGBT and SVM in SAP HANA PAL which could offer lossless model compression without compromising predictive performance.

No comments:

Post a Comment