In this blog, I will give you an overview of a solution to extract supplier data from a Sourcing event in SAP Ariba Sourcing, and save it in a mailing list in SAP Qualtrics XM for Suppliers, using BTP services.

Process Part 1

First, to extract the information from SAP Ariba Sourcing, I use the Operational Reporting for Sourcing (Synchronous) API to get events by date range, and also the Event Management API which returns supplier bid and invitation information from the sourcing events.

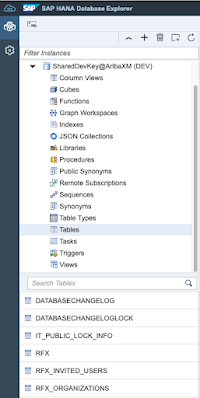

Then, I store the information I need in a SAP HANA Cloud database. I created 3 tables to store the information that I will send to SAP Qualtrics XM for Suppliers: RFx header information, Invitations, and Organizations contact data.

Finally, I send all the information needed to a SAP Qualtrics XM for Suppliers mailing list, which will then handle automatically sending surveys to suppliers that participated in the Ariba sourcing events.

To send the information to SAP Qualtrics XM for Suppliers, I use the Create Mailing List API.

Integration

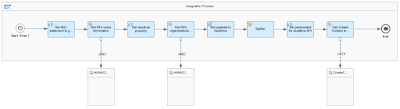

All this is orchestrated by the SAP Integration Suite, where I created 2 iFlows:

◉ The first iFlow is to get the information from the SAP Ariba APIs, and store it in the SAP HANA Cloud database.

No comments:

Post a Comment