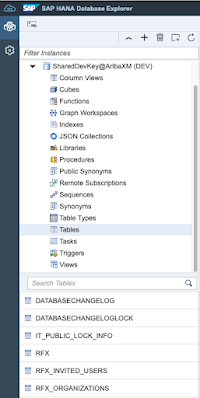

In this method we create database artifacts in a classic database schema using declarative SQL

For this exercise, I created the project in the SAP Business Application Studio as explained in the tutorial. I used these files to create the tables:

rfx.hdbtable

COLUMN TABLE rfx (

id NVARCHAR(15) NOT NULL COMMENT 'Internal ID',

title NVARCHAR(1024) COMMENT 'Title',

created_at DATETIME COMMENT 'Created at',

updated_at DATETIME COMMENT 'Updated at',

event_type NVARCHAR(30) COMMENT 'Event Type',

event_state NVARCHAR(30) COMMENT 'Event State',

status NVARCHAR(30) COMMENT 'Event Status',

PRIMARY KEY(id)

) COMMENT 'RFx Information'

rfx_invited_users.hdbtable

COLUMN TABLE rfx_invited_users (

id NVARCHAR(15) NOT NULL COMMENT 'Internal ID',

unique_name NVARCHAR(1024) NOT NULL COMMENT 'Contact Unique Name',

full_name NVARCHAR(1024) COMMENT 'Full Name',

first_name NVARCHAR(250) COMMENT 'First Name',

last_name NVARCHAR(250) COMMENT 'Last Name',

email NVARCHAR(250) COMMENT 'Email',

phone NVARCHAR(30) COMMENT 'Phone',

fax NVARCHAR(30) COMMENT 'Fax',

awarded BOOLEAN COMMENT 'Awarded',

PRIMARY KEY(id, unique_name)

) COMMENT 'Users invited to RFx'

rfx_organizations.hdbtable

COLUMN TABLE rfx_organizations (

id NVARCHAR(15) NOT NULL COMMENT 'Internal ID',

item INTEGER COMMENT 'Item No',

org_id NVARCHAR(15) COMMENT 'Organization ID',

name NVARCHAR(1024) COMMENT 'Name',

address NVARCHAR(1024) COMMENT 'Address',

city NVARCHAR(1024) COMMENT 'City',

state NVARCHAR(1024) COMMENT 'State',

postal_code NVARCHAR(10) COMMENT 'Postal Code',

country NVARCHAR(100) COMMENT 'Country',

contact_id NVARCHAR(1024) NOT NULL COMMENT 'Contact Unique Name',

PRIMARY KEY(id, item)

) COMMENT 'RFx Organizations'

After creating all files, the project should look like this: