Introduction

I read on social media about a New Year’s resolution idea: instead of answering questions sent in direct communication, write the response as a blog post and send the requester a link to the post. It sounds like a great idea to better utilize time and share knowledge so I decided to give it a try. Fourteen days into the new year and so far I’ve failed spectacularly. I find when I go to write a blog post I want to provide more background and detail. All this takes more time than you can usually squeeze into the day. This blog post represents my attempt to take at least one question I’ve received and answer via blog post, although admittedly after already responding to the original request.

The question I received:

The Question at Hand

Good question! It’s a question that will eventually hit all kinds of developers. HANA native developers working with XSA, HDI, or HANA Cloud are going to encounter this. But also most SAP Cloud Application Programming Model developers are going to hit it eventually as well. Maybe you need to consume an existing table from an SAP application system schema, a replicated table, a virtual table, a Calculation view from another developers project, or even a CAP entity from a separate project. All these scenarios will eventually take to this same problem and solution.

History

Before we talk about the solution to the question; let’s go back in time and discuss the reasons why this is even a question. If you aren’t interested in the background and just here for the answer yourself, feel free to skip ahead to the next section of this blog post.

Why is this even a question? Why can’t developers simply access every table or database object in the same database instance? For the origins of this question we need to go back to the earliest days of SAP HANA. Just after the big bang and before the universe started to cool and expand…

Well not quite that far back. Maybe only back to HANA 1.0 Service Pack 1 and 2. In these earliest days of HANA it was primarily used as secondary database for reporting. A subset of data was often replicated to a “side-car” HANA system from the transactional system and reporting done within HANA.

Security in the source systems (which were often ABAP based) was generally done at the application server layer. A technical DB user is used to connect and perform SQL statements. Therefore when the data is replicated to HANA, not table level security controls come over with it. Within a pure SQL database you would normally control authorizations via SQL grants directly on tables and views. Development user, “DEV_A”, would need SELECT authorization on any table to include it in their development objects. Likewise business user, “USER_B”, would need SQL SELECT authorizations to run reports on the tables in question.

But for development with HANA, SAP wanted to simplify this approach and not tie so much security directly to the database users. We wanted the security to act more like the authorizations within the Applications themselves. So there were two problems to solve – how to improve the security access for the Business User reporting on the data and how to improve the security access for the developer creating Views and other reporting content within the database.

The first problem is often resolved within databases using Views. A view allows you to build in restrictions and aggregations and basically control how the underlying tables are used. You have a Sales Order Header and Item table. They each contain a hundred or more columns; some of which might even be sensitive financial or personal data. Yet you want to give a group of users reporting access to see aggregated sales totals data. You don’t want to give each reporting user access to both database tables. You only want to give them access to view which contains a subset of the content.

And this is where the SAP HANA Calculation View was born. It contains a security abstraction approach that was already common in SQL databases for stored procedure execution called definer rights. The basic idea of definer rights is that the underlying SQL query within a view or procedure is not performed by the user requesting the query but by the DB user who created the DB object in question.

So user DEV_A creates a view named V_SALES. V_SALES aggregates data from both SALES_HEADER and SALES_ITEM. USER_B, the business reporting user, then is granted SELECT to V_SALES but has no access to the underlying tables SALES_HEADER or SALES_ITEM. When they run a report that performs a SELECT against V_SALES; within the database the query is not performed by their user but is actually executed by DEV_A – the user who created the view. This approach solves part of the security control issues, the part with the Business User; but leaves us still with issues around the Development User. Hence the HANA Repository was born.

The HANA Repository

The issue that remains is twofold. The user, DEV_A, now needs SELECT access to the two underlying tables (SALES_HEADER and SALES_ITEM). Even in production the developer user that created the view will need this access due to definer rights. But this leads to even bigger problem that if the developer user gets locked or deleted the view stops working for everyone. It’s really not idea to have development objects dependent upon the lifecycle of the development user who created them.

This is where the HANA repository comes to the rescue. The HANA Repository uses an abstracted approach to the creation of database artifacts called activation. It basically plays on the idea of definer rights again, but now to create the database artifacts in the database itself. When a development user activates a Calculation View, it doesn’t perform CREATE VIEW SQL commands as that database user. Instead the activate operation calls a stored procedure to perform the creation, altering or deletion of database objects. These stored procedures are created with Definer Rights and in turn all created themselves by a single System DB user called _SYS_REPO.

This way _SYS_REPO becomes the database object owner of all database objects created by the HANA Repository. So problem solved with the ownership issue with the development user. But this solves another problem. The Development user no longer needs access to the underlying database tables (SALES_HEADER and SALES_ITEM) either. As long as _SYS_REPO has access to all or most reporting tables; developers can freely create views against most everything. And hence the distinction between runtime and design time artifacts in HANA:

Content Management in SAP HANA circa 1.0 SPS 05

But ultimately this leads to another issue. You now have a single, nearly all powerful system user in _SYS_REPO. How then to restrict access between different organizations or development teams. Maybe you don’t want to want Department_A to access and reuse the views from Department_B? The HANA Repository has the concept of Packages and a whole new set of security build around Packages to control just such situations. But ultimately this approach doesn’t meet all the security restriction, ease of use, nor usage traceability requirements of all HANA users. These requirements we’ve discussed, along with others like version management/branching and application server layer access, ultimately lead HANA to its next major evolution — the change from the SAP HANA Repository to the SAP HANA Deployment Infrastructure or HDI for short.

HDI – HANA Deployment Infrastructure

The HANA Deployment Infrastructure or HDI takes the general concepts of the HANA Repository but then breaks it up into smaller pieces that can more easily be controlled and used standalone. Instead of one single Repository and all powerful system user, HDI creates almost a new repository (with both design time and runtime management) per project. This is referred to in HDI as an HDI Container. HDI itself was first introduced in HANA 1.0 SPS 11.

Each Container gets its own unique technical user to control database artifact creation similar to _SYS_REPO. But HDI goes even a step further and actually has two main technical users per container. One that acts as the Object Owner and performs all create/delete/alter SQL commands on the database artifacts themselves. The other user is the Container Runtime User that is used for all external data access (SELECT, INSERT, etc) to the database artifacts. This provides an even better layer of separation than what _SYS_REPO could.

Diagram of an HDI Container

But this brings us to the real root of the original question; how to access tables from a different schema or container?

The Answer

The two HDI container technical users intentionally have no access nor visibility to most other objects in the database outside of their own container. Only a few required system views/procedures are automatically granted to these technical users. So while they are all powerful within the scope of the content of their own container content; they are nearly powerless outside that container.

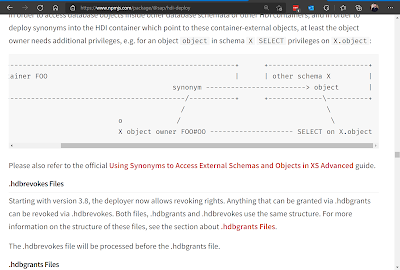

For the first problem of visibility we can solve this by using Synonyms. Synonyms are like a pointer or alias to another object in the database. But the beauty is that synonyms live within a specific Schema or it the case of HDI a Container (hint: within the DB the Container is really just a Schema). This way we can have a table in a foreign schema or container and use a synonym for the technical users in our database to have visibility to it. A SELECT against the foreign schema within the Container will fail but a SELECT against the Synonym will work (once the synonym is created of course).

But that comes to the other part – how to create the Synonym. If the technical users of Container_A can’t see or access the objects of your foreign schema or container; how can they create a synonym to them? Simple answer – they can’t.

They need to be granted that access specifically by the owner of the foreign schema or container. This way control of who access the tables or other database objects still relies with the owner. This is unlike the _SYS_REPO situation where any developer could essentially use most anything in the system. This special grant is done via the hdbgrants artifact in the HDI container where you want to create the synonym. It uses a user provided service or direct binding to the foreign container to perform the actual granting. This way a user from the foreign environment is the one granting access to the technical users in your container. But the definition of the grant (or think of it is as the request for the grant) is a design time artifact within your development project. This way the request for grant is just another development object that is transported along with your content and the grant itself is done upon deployment of the HDI container

A little hint: The README.md document within the @sap/hdi-deploy module on npm, actually contains some of the best documentation you can find on the whole cross container/cross schema subject. I highly suggest a further reading of the “Permissions to Container-External Objects” section of this document to learn even more about this topic. It goes deeply into sub-topics like procedure grantors, revoking, etc.

hdi-deploy documentation

The Answer – Step by Step

Now that we have discussed all the theory and background around this question, let’s walk through the how-to in a more step-by-step approach.

1. If connecting to a foreign Schema (non-HDI Container), you are going to need a User Provided Service. This is a way to configure a connection to the database and which user and password you will use to connect to the database. This should be a User in the foreign schema which has Grantor rights to the objects which you want to add to your container. Although it might be tempting to just utilize a user with full rights to the foreign schema, its’s strongly recommended to configure a separate user for each usage which the most restrictive rights possible. Only what is absolutely needed to fulfil the use case of this one HDI container’s needs.

2. If you developing using the SAP Business Application Studio, the HANA Projects view has a great wizard in the option “Add Database Connection” that’s going to perform the hard part of the next step for you. Don’t worry though if you are using other development tools. Approaches for on premise development are shown in the links in the next section.

3. This wizard is going to ask if you want to bind to an existing User-Provided Service, Create a New User-Provided Service (both of those options for foreign schema access, or Bind to an existing HDI container. You can then select the target depending upon the first option. This is going to bind the target container or schema to your project (updating your mta.yaml) making it possible to connect at deploy time later and use this connection to perform the actual grant.

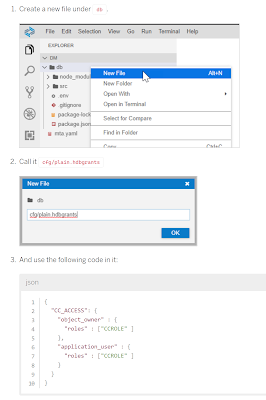

4. Now that the setup steps are done we can go about creating the grants themselves. That’s it’s own development artifact that contains what role or direct access types you want to assign to your container users. You can assign different authorizations to both your Container Object Owner (who creates and alters the DB objects) as opposed to the Application User (the one used for all runtime access to database objects in your container).

5. Personally during development, I like to deploy to the database. This will test all the security configuration and grant the necessary rights before we start trying to add synonyms to the container definition.

6. The final step is create the synonym. This is another HDI design time artifact called hdbsynonym. One trick – you don’t have to make the synonym object name the same as the target. This can be useful when working in Cloud Application Programming model where some of the HANA DB naming standards aren’t compatible with CAP. This is your opportunity to rename things to be compatible.

7. You can now use the synonym as you would any other DB object. For instance your Calculation Views.

8. There is one additional step if you want to bring the synonym into your Cloud Application Programming Model as an entity. Synonyms aren’t directly or automatically brought into your CAP metamodel. You must create a proxy entity in the CAP CDS definition for the Synonym with the special annotation @cds.persistence.exists. That annotation is very important. That’s what tells CAP not to try and try to create this entity in the database but just assume it already is there. Another hint – the hana-cli tool and the inspectTable or inspectView command can help same time and avoid mistakes with the generation of the proxy entity since it must contain the column names and data types of all fields in the target of the synonym.

No comments:

Post a Comment