The goal of fast restart option is to improve large in-memory SAP HANA database restart times. This article outlines the plan to address this important area of reducing downtime with a step by step approach, recommendation on tmpfs sizing and FAQ’s.

To understand the concept of fast restart option (FRO) with tmpfs, basic understanding of tmpfs file system functioning is required

Basics Of tmpfs filesystem: –

tmpfs (temporary file system) is a virtual filesystem created to store files in dynamic (volatile) memory also known as DRAM (RAM). Tmpfs filesystem is typically created on RAM.

If you create a filesystem on linux machine with type tmpfs, all data stored inside the tmpfs will be stored in RAM only and it doesn’t require additional space at disk level.

RAM is volatile memory and tmpfs is backed with RAM, hence tmpfs doesn’t survive an operating system restart causing deletion of all data from tmpfs.

FRO Concept

FRO is about technical ability to maximize uptime for SAP HANA by speeding up the SAP HANA start time, leveraging the SAP HANA persistent memory implementation to store the DATA columns in DRAM and be independent from SAP HANA process restarts, crashes or planned maintenance.

The SAP HANA Fast Restart option makes it possible to reuse MAIN data fragments after an SAP HANA service restart without the need to re-load the MAIN data from the persistent storage (from disk to RAM).

Which means the tables that are loaded within the tmpfs doesn’t get unload from tmpfs (RAM). And hence after the SAP HANA restart, reloading of these tables doesn’t happen again reducing start times dramatically.

FRO with tmpfs support was released with SAP HANA 2 SP4.

Configuration efforts

It hardly takes maximum 30 minutes to configure fast restart option. However, you need to be very sure about the sizing of tmpfs, parameters value’s and memory utilization events and scenarios in the system

Sizing can vary based on different scenario like new implementation, existing system with data size more than 50% of total available RAM, expensive queries consuming regular big heap area in the systems etc. Few such scenarios discussed in later part of this article.

Steps to Configure FRO

Before you configure the FRO, It is important to understand the underlying Hardware NUMA topology and memory allocation therefore it is important to create a TMPFS directory structure that is aligned with the underlying NUMA topology

Use the following command to understand the underlying NUMA topology

cat /sys/devices/system/node/node*/meminfo | grep MemTotal | awk ‘BEGIN {printf “%10s | %20s\n”, “NUMA NODE”, “MEMORY GB”; while (i++ < 33) printf “-“; printf “\n”} {printf “%10d | %20.3f\n”, $2, $4/1048576}’

And the output will show the number of NUMA node on the instance. In below example instance has 4 numa nodes

Alternatively, you can use below command to understand the NUMA topology in detail

numactl -H

Make a directory and mount for each node equipped with memory making sure that all tmpfs mounts have preferred NUMA policy as per below example

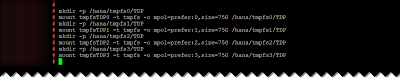

# mkdir -p /hana/tmpfs0/TDP

# mount tmpfsTDP0 -t tmpfs -o mpol=prefer:0,size=75G /hana/tmpfs0/TDP

# mkdir -p /hana/tmpfs1/TDP

# mount tmpfsTDP1 -t tmpfs -o mpol=prefer:1,size=75G /hana/tmpfs1/TDP

# mkdir -p /hana/tmpfs2/TDP

# mount tmpfsTDP2 -t tmpfs -o mpol=prefer:2,size=75G /hana/tmpfs2/TDP

# mkdir -p /hana/tmpfs3/TDP

# mount tmpfsTDP3 -t tmpfs -o mpol=prefer:3,size=75G /hana/tmpfs3/TDP

In this example, I decided to go with 75 GB for every single tmpfs mount point so the total data segments up to 300 GB (75*4) can reside in tmpfs.

Here, TDP is SID of test system.

Check the mounted file systems

Here, We used the mount option mpol=prefer to tell the operating system that the files in the filesystem shall be preferably stored on the following numa node number

Give Permission to the directories:

# chown -R tdpadm:sapsys /hana/tmpfs*/TDP

# chmod 777 -R /hana/tmpfs*/TDP

This is Ok to give 777 permission to /hana/tmpfs*/TDP as data inside this folder will be written with 750 and sidadm:sapsys

Ensure that these mount points are added in /etc/fstab, as it will be deleted during the OS reboot.

For example: –

tmpfsTDP0 /hana/tmpfs0/TDP tmpfs rw,relatime,mpol=prefer:0,size=75G

tmpfsTDP1 /hana/tmpfs1/TDP tmpfs rw,relatime,mpol=prefer:1,size=75G

tmpfsTDP2 /hana/tmpfs2/TDP tmpfs rw,relatime,mpol=prefer:2,size=75G

tmpfsTDP3 /hana/tmpfs3/TDP tmpfs rw,relatime,mpol=prefer:3,size=75G

Configuration at SAP HANA level

Enter the basepath location in the basepath_persistent_memory_volumes parameter (this parameter can only be set at the level of HOST). All MAIN data fragments are stored at the location defined here. Multiple locations corresponding to NUMA nodes can be defined using a semi-colon as a separator (no spaces), for example:

To change the default for all new created tables add the parameter table_default in the inderxserver.ini

table_default

◉ =on: tmpfs is default for all column store tables

◉ =off: tmpfs isn’t used per default (activation via ALTER possible on individual levels, e.g.for specific tables)

◉ =default: no explicit behavior, follow the existing default

This can be defined in other persistent services .ini file as well or at the database layer as whole, But remember the lower layer config takes the precedence. I prefer to update it only in indexserver.ini as indexserver carry most of the data.

Restart the database so that changes come into effect. Restart will take as usual duration for the first time and next time onwards you will see the dramatic changes in the restart duration. We have seen restart time has reduced from 40 mins to 4 Mins. This is totally based on sizing of tmpfs and available data within it.

Check if tmpfs filesystem has got some data after the restart.

Check the logs if basepath_persistent_memory_volumes have been setup properly or not.

Go to trace location and check indexserver logs, you should see Number of configured persistent memory basepaths = 4 as in this example we configure 4 paths. It indicates that FRO configuration is correct.

Sizing of tmpfs

There is no standard formula or calculation available to perform the sizing of tmpfs file system. For FRO tmpfs sizing, every system is unique. Your system administrator needs to decide on tmpfs size after studying the memory utilization statistical data from the system.

Let us assume few scenarios for better understanding

Scenario 1:

You need to plan a FRO tmpfs sizing for system having below specifications

Total SAP HANA system RAM size = 5 TB

Total SAP HANA DB size (Backup Size) = 2.5 TB

Used Memory = 3 TB

Peak Used Memory = 3.8 TB

Here you may think of creating tmpfs of size between 2.5 TB to 3 TB (SUM of ALL tmpfs size based on number of NUMA node).AS used memory is 3 TB and Peak used memory (do consider month/quarter/year end and other special scenario when system required more memory ) is 3.8 TB and system is still left with more than 1TB available.

In this scenario if you decide to make tmpfs of 3 TB, all persistence main column store data fragments will be stored in tmpfs mounts. This main column store data fragments size would be less than 2.5 TB and hence you will see tmpfs mounts in total is filled up to approx. 2.3 TB.

Now, tmpfs is still vacant to store around 700 GB, this can be utilized for future DB growth and adding new column store main data fragment. In fact, this 700 GB can also be utilized by database for pool or heap area operations required by SQL queries executions or other system operations. This means that vacant space (basically RAM) available in tmpfs can be used as available RAM for other operations.

Few questions from Scenario 1:

Q – What if tmpfs is consumed totally(3TB) after few months considering the DB growth.

A – This should not create a problem in system as 2 TB RAM is still available for query execution and other DB/OS operations. However, new column store main fragments will not be stored in tmpfs but will be loaded outside tmpfs RAM as and when needed.

In simple words,

◉ SAP HANA can store TMPFS data in RAM, if there is no space in TMPFS

◉ TMPFS configured vacant space can be used by SAP HANA for operations

Q – What will be the impact on Restart times of database.

A – In this scenario you will see the dramatic difference in the restart time as major chunk of database is always available in tmpfs. If the current start time is approx. 30 mins for this scenario, after FRO config it may come down to maximum 5 mins. However, this will not be the case when OS is rebooted as tmpfs will not survive an OS restart causing deletion of all data from tmpfs. Now it will take 30 mins to start the database system again for first time as it must reload the column store main data fragments from disk to tmpfs.

In simple words,

◉ tmpfs can survive SAP hana restart, indexserver kill and SAP HANA upgrade activities which doesn’t require OS reboot

◉ Fast restart option will impact only on the last step of database restart, which means Column store data reloading will be very fast as >90% of data is already in tmpfs and will not loaded from disk to memory again. Rest of restart procedure will be executed as it was before the FRO config

Scenario 2:

You need to plan a FRO tmpfs sizing for system having below specifications

Total SAP HANA system RAM size = 3 TB

Total SAP HANA DB size (Backup Size) = 1.5 TB

Used Memory = 2 TB

Peak Used Memory = 2.7 TB

Global allocation Limit = 2.7 TB

In this scenario, available RAM is very less and your system might be facing out-of-memory dumps during peak times or when concurrent expensive queries are consuming bigger heap and pools for operation.

Here you may think of creating tmpfs of size 1 TB (SUM of ALL tmpfs size based on number of NUMA node). But the total main column store data fragment size would be close to 1.3 TB so the remaining 300 GB (1.3TB – 1 TB ) will be loaded in RAM as and when needed.

This will ensure that sufficient RAM is available for operation’s and unload’s happen from RAM (say from that 300 GB which was loaded in RAM when needed) and query execution may not terminate with OOM.

However, in this scenario FRO can take up the advantage of only 1 TB tmpfs and remaining 300 GB main data fragment has to be re-loaded during every restart and hence the start time may take more than expected considering not all main data fragments can’t be loaded in tmpfs. Still the startup would be much faster comparing to without FRO.

Few questions from Scenario 2:

Q-What happens if SAP HANA requires more memory even after unloading tables from RAM, and tmpfs data needs to be unloaded.

A-Table unloads executed implicitly by SAP HANA services due to memory running low, do not by default free the memory from tmpfs but simply remove the mapping of relevant data structures from the database service process address space and the MAIN data fragment remains in tmpfs. This means that memory has not been acquired and can’t be used by database for operations.

However, this behavior can be controlled by setting parameter table_unload_action in the [persistent_memory] section of the indexserver.ini file.

◉ table_unload_action = delete: Main storage is cleared; actual deletion doesn’t take place immediately but with next savepoint

◉ table_unload_action = retain: Main storage remains

Regardless of the setting of this parameter, you can also use UNLOAD with the additional DELETE PERSISTENT MEMORY clause to completely free memory, for example

UNLOAD myschema.mytable DELETE PERSISTENT MEMORY;

This will clear the MAIN data fragments from tmpfs

In simple words,

It’s very important to size the tmpfs appropriately.

Setting up of table_unload_action = delete; will not delete the data immediately from tmpfs as waits till next savepoint and you may see OOM from SQL queries if sufficient memory was not available during this wait time to unload and clear the tmpfs.

You may refer SAP note in case you encounter OOM when FRO is enabled

2964793 – OOM when Fast Restart option is enabled

Few other FAQ’s irrespective of any scenario

Q- If fast restart feature with TMPFS is adapted, does it require additional physical memory?

A- Actually Not, but It depends on workload on the system.

The main point, you need to keep minimum required DRAM (DRAM without tmpfs). As explained with the help of above scenarios.

Q-How to check how many tables are loaded in tmpfs.

A – You may create a query on Monitoring Views such as m_cs_column.

Q – My landscape is having Primary, Secondary and DR site in replication, is it compulsory to config FRO on all three sites?

A- No, it’s not compulsory. You can config FRO on any one/two sites or all the sites. No impact on failover scenarios

Q- Any other known issues with respect to FRO

A – Linux parameter fs.file-max should be maintained in line with the recommendation given in 2600030 because every loaded column in tmpfs is an open file, so the amount of files being open on operating system side can be significant

Q- Is it possible to restore the database from the backup of system with FRO configured and target system doesn’t have FRO config?

A – Data backups save all data of the persistence volume without accessing the RAM. A data recovery restores all data back to the persistence volume. Data backups created in systems with tmpfs can be restored in systems without tmpfs and visa versa.

No comments:

Post a Comment