Prerequisite

Your SAP HANA database must be connected to your DI system via the connection management of DI.

1. Standard SAP HANA Operators in DI

The following section lists standard operators for integration with SAP HANA. The list is based on the categories in which the operators are grouped in DI. The focus is on the categories “Connectivity” and “SAP HANA“, as these are probably the most relevant for newcomer scenarios.

Connectivity

Connectors to various data sources are offered in the “Connectivity” category. With the “SAP HANA Monitor” and the “SAP HANA Client“, two operators for connecting to an SAP HANA database are provided.

◉ “SAP HANA Monitor” operator: can be used to monitor an SAP HANA table and output its newly inserted rows in a defined poll period. If no new row was inserted to the SAP HANA table since the last query, no output is created. This operator does not require any input and has one output port “outResult” of type “message” which contains the new rows as an array.

◉ “SAP HANA Client” operator: can be used to execute SQL statements (arbitrary SQL code or prepared statements) and to insert data (CSV or JSON) into an SAP HANA table. The operator can process SQL code (Input Port: “sql“, type: “message“) and CSV or JSON data (Input Port: “data“, type: “message“) as input and returns the result through the output port “result” of type “message”. Since the input ports work independently from each other, the execution of SQL statements and the insertion of data can be combined into more sophisticated scenarios. One simple use case is the creation of a new SAP HANA table and the ingestion of CSV data from a file within the data lake.

Connectivity (via Flowagent) / Structured Data Operators

The “Connectivity (via Flowagent)” category provides operators that run in the flowagent subengine of DI. The flowagent runs a lightweight version of SAP Data Services (Data Integration Platform) and enables the creation of pipelines for data transformation. The flowagent enables integration points to an SAP HANA database via the “HANA Table Consumer“, the “Flowagent Table Producer“, the “Flowagent File Producer” and the “Table Replicator” operator.

The category “Structured Data Operators” represents another very similar possibility to perform data transformations in pipelines. With Structured Data Operators, the new operator “Data Transform” can be utilized, which allows data transformations, such as projection, union, aggregation, and join to be modeled in a graphical interface. Structured Data Operators can connect to SAP HANA, SAP IQ, and SAP Vora.

HANA Flowgraph

The flowgraph is an artifact in SAP HANA Smart Data Integration (SDI). Flowgraphs are graphical representations of a data provisioning job, consisting of a sequence of nodes and flow lines. A HANA flowgraph can be used to transform data from remote sources into SAP through batch or real-time processing.

In the category “Data Workflows“, the operator “HANA Flowgraph” is offered to execute an SAP HANA flowgraph in an SAP HANA system via DI. The operator “HANA Flowgraph” has one input port (Input Port: “input“, type: “string“) and two output ports (Output Ports: “output” and “error“, type: “string“). This operator can only be used in a graph that exclusively contains other data workflow operators.

SAP HANA

The “SAP HANA” category provides standard operators that are specific to connecting to an SAP HANA database. The operators are dedicated to individual single use cases (e.g. initialization of a table), which is a differentiation from the “HANA Client” operator. There are many scenarios where these operators are used in combination, e.g. initializing a table and filling it with data.

◉ “Write HANA Table” operator: can be used to ingest data into one or more tables on an SAP HANA database. The operator can run either in static (one defined target table) or in dynamic mode (target table name is obtained from input message). The operator takes one input (Input Port: “input“, type: “table“) and has two output ports either for a successful ingestion (Output Port: “success“, type: “message.table“) or in case of an error (Input Port: “error“, type: “message.error“).

◉ “Run HANA SQL” operator: can be used to execute SQL statements on an SAP HANA database. The operator can run either in static (SQL command defined within the operator) or in dynamic mode (operator executes SQL statements from each input message). The operator can take one input (Input Port: “input“, type: “*“) and has two output ports either for a successful SQL execution (Output Port: “success“, type: “message.table“) or in case of an error (Input Port: “error“, type: “message.error“).

◉ “Initialize HANA Table” operator: can be used to initialize one or more tables on an SAP HANA database. The operator can run either in static (initializes a table based on the configuration settings, no input message needed) or in dynamic mode (initializes the table(s) based on each input message). The operator can take one input (Input Port: “input“, type: “table“) and has two output ports either for a successful initialization (Output Port: “success“, type: “message.table“) or in case of an error (Input Port: “error“, type: “message.error“).

◉ “Read HANA Table” operator: can be used to read data from a table in SAP HANA database. The operator can run either in static (reads the table based on the static configuration, no input message needed) or in dynamic mode (reads the table(s) based on each input message). The operator can take one input (Input Port: “input“, type: “table“) and has two output ports either for a successful query containing the queried rows (Output Port: “success“, type: “message.table“) or in case of an error (Input Port: “error“, type: “message.error“). If the operator is configured to static mode, the read mode can be set to “Once” (table is read once) or to “Poll” (operator periodically polls the table for data). Additionally, a batch mode can be configured, to define if the output is broken into separate messages.

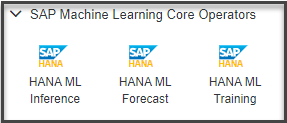

SAP Machine Learning Core Operators

The category “SAP Machine Learning Core Operators” comprises modeler operators and graph templates that are required for creating machine learning pipelines in the ML Scenario Manager. The topic of Machine Learning will not be discussed in depth here. However, it should be mentioned that the operators “HANA ML Inference“, “HANA ML Forecast” and “HANA ML Training” connect to a HANA database and apply algorithms of the libraries SAP HANA Predictive Analytics Library (PAL) or the SAP HANA Automated Predictive Library (APL).

2. Examples

This section will show simple examples of the SAP HANA integration for beginners. The first example shows how to create an SAP HANA table and fill it with data. The second example reads data from an SAP HANA table into DI.

NOTE: The following examples are demonstrated with the connection to an SAP Data Warehouse Cloud (DWC) instance. There are no specific DI operators for DWC so far. Most of the DI operators for SAP HANA shown here (especially those in the “Connectivity” and “SAP HANA” categories) can be used in exactly the same way for DWC connections.

Example 1: Create an SAP HANA table and ingest data

Our use case is to create a new table on an SAP HANA database, read a csv file and write the data from the csv file into the newly created SAP HANA table.

The graph will look like this:

Note: The “Terminal” operator is not mandatory. It is added to the “error” port of the “Write HANA Table” operator to output a possible error.

The “Initialize HANA Table” operator will be connected to the HANA database. In this example we create a static new column table “ZTEST_TABLE_WITH_PK_TH“.

The table has set the columns KUNNR, NAME1, LAND1 and ORT01 in the properties. The primary key property is set to the column KUNNR.

In the “Read File” operator a csv file path is set up. The file has matching column headers with the newly created HANA table.

In the next step, the “Write HANA Table” operator is set up. In this example the operator is set up statically. The table name must be entered in this style: “SCHEMA_NAME”.”TABLE_NAME”.

Now the operator can be executed. After that, we check how the table and the data will appear in the DWC schema.

You can see that the table has been created within DWC and the three rows of the csv file have been inserted into the table. Awesome!

Note: The same behavior could have been set up with a single “SAP HANA Client” operator instead of the “Initialize HANA Table” operator and the “Write HANA Table” operator.

Example 2: Read data from an SAP HANA table

Our use case is to read from an SAP HANA table in batches and to wiretap the connection.

The graph will simply look like this:

We will configure the “Read HANA Table” operator in a static way, so that no input message is required. We connect the operator to the SAP HANA (or here DWC) connection and enter the table name (incl. schema name), as well as the columns that we want to read. We set the “Read mode” to “Poll” and the “Poll period” to “50s“. This means we will continuously read from the table every 50 seconds. The “Batching” mode is set to “Fixed size” and the “Batch size (rows)” is set to “1“. This means that the table will be written to the output port “success” into batches of single rows.

Attention: With this set up the pipeline will run infinitely if you do not stop it manually.

Now let’s execute the graph and check the wiretap:

You can see that the rows are sent correctly in single batches and within a 50 second timeframe. Great job!

No comments:

Post a Comment