SAP has always helped its customer to run their best. And in this pandemic situation it is no different.

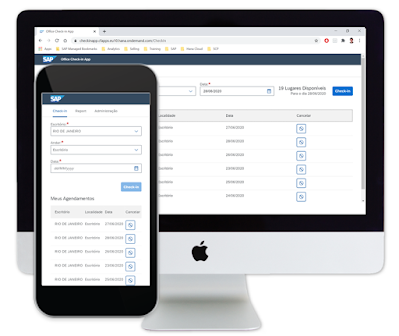

Bearing that in mind, we, from the Brazilian Presales Platform & Technology team, proudly developed an application that controls office access as an effort for a safe return to normality. It is a very simple application, which its source code will be distributed freely to customers. The goal of this app is to ensure that the office always respects its available capacity and employees are able to perform social distancing, in a very simplified manner and at an extremely low cost of implementation. The application flow is demonstrated on the picture below:

Application Areas

The Application was divided in three areas: Check-in, Report, and Administration. The check-in area is for general use, while the report and administration areas are available for the system administrator.

Administration

This is where the application would be configured.

◉ Office´s administration

◉ In the office’s administration, it is possible to visualize, edit, create and activate access for check-in in the office.

◉ Location´s administration

◉ In the location’s administration, it is possible to visualize, edit, create and activate access for check-in in the location. Furthermore, it is possible to attribute access controllers for each location.

◉ Administrator´s administration

◉ In this area is possible to visualize, edit, and create access controllers, which will receive daily a list with users that made check-in in the locations that they administrate.

Check-in

This is the area where the user will visualize the available locations and available capacity in selected dates and locations, as well as realize the check-in.

Report

Finally, we have the report area, where the administrator will be able to visualize the occupation rates by date and location.

Architecture

To build this application, we used the SAP HANA Cloud as database, react.js as the front end framework, the SAP Cloud Application Programming Model with node.js as the back end framework, and Azure Active Directory as an identity provider just as an example to demonstrate the possibilities when working with the SAP Cloud Application Programming Model and the SAP Cloud Platform, Cloud Foundry environment.

Prerequisites

One of the beauties from SAP Cloud Platform, Cloud Foundry environment is the ability of using the environment of your choice. In my case, I used the Visual Studio Code to develop locally on my machine, Github as code repository, the SAP Business Application Studio to create the services on the SAP Cloud Platform using a CLI provided by the SAP Business Application Studio, and an SAP Cloud Platform, Cloud Foundry environment instance. Advanced Javascript knowledges and basic knowledges about SAP Cloud Application Programming Model are desirable.

Visual Studio Code

On the Visual Studio Code, we will use Node.js and NPM to install and manage our dependencies and CLIs.

Github

The front end repository and the back end repository were hosted on Github. Please clone the repository to the desired environment.

SAP Business Application Studio

The Business Application Studio is the next generation of the SAP Web IDE. It comes out of the box with a predefined set of development environments (Dev-Spaces, virtual machines installed on the cloud) and provides the developer more control over their environment and code. I used it to create the services at the SAP Cloud Platform, as the Dev-Spaces are already configured to use the Cloud Foundry CLI, so we can get our application up and running quickly without further headaches about the installation.

SAP Cloud Platform

We will be deploying an SAP HANA Deployment Infrastructure container service in SAP HANA Cloud. We will deploy as well an SRV application to host our API and a Cloud Foundry Application to host our front end.

SAP Cloud Application Programming Model Back End

We used the SAP Cloud Application Programming Model framework on the back end. In this blog post we will be covering only the framework´s aspects regarding the developed application

Core Data Services

Core Data Services is used on this project to declare service definitions and data models. The service definitions will be found in the SRV folder and the data model on the DB folder.

Data Model

We created 7 entities for this application

◉ Users

◉ Used to identify the users that makes the check-ins.

◉ SysAdmins

◉ Used to declare the users that will configure the system.

◉ CheckIn

◉ Used to store the check-ins. It is associated to the user that made the check-in, to the location and office where the check-in was made.

◉ Offices

◉ Used to store the offices that will be available for check-in.

◉ Floors

◉ Stores the locations and the location capacity that will be available for check-in. It will be associated to offices.

◉ SecurityGuards

◉ Stores the access controllers for each location. It is possible to configure a job to send e-mails for these users on a daily basis with a list of users that made check-in for locations that they administrate.

◉ FloorSecurityGuards

◉ Many to many association between the floors entity and the security guards entity.

Furthermore, we created 7 views.

◉ Administrators

◉ Retrieves data associated to the FloorSecurityGuards entity.

◉ FloorsList

◉ Retrieves data associated to the Floors entity.

◉ FloorSecurityGuardsView

◉ Retries data related to FloorSecurityGuards entity to send the e-mails.

◉ CheckInList

◉ Retrieves data associated to the CheckIn entity.

◉ AvailableCapacity

◉ This view will retrieve the available capacity for the location. It uses a subtraction function (just as it would be used in “normal SQL”).

◉ OccupiedCapacity

◉ This view will retrieve the occupied capacity by location for reporting purposes. It uses a count function (just as it would be used in “normal SQL”).

◉ DailyCheckInList

◉ Retrieves data associated to the CheckIn entity to send e-mails for the access controllers.

Services

Once we have created our data model, we can define services that will retrieve the data from our data model and expose it in an OData endpoint. SAP Cloud Application Programming Model will bootstrap our services into node.js express and create some routes for our services, so we don´t have to worry about writing any javascript code to create an OData API (although we will be writing some javascript code to add some middleware to our endpoints further on). The services are defined on Core Data Services files on the SRV folder. We created two services for this application, one that will be accessed by system administrators and one for both the general user and the system administrator:

◉ AdminService

◉ Provides the services for the reporting area and the administrative area.

◉ CatalogService

◉ Provides the services for the check-in area.

Once the services are created, you should be able to see the endpoints created for them on the root URL for our API, such as seen below:

Middlewares

With our services up and running, we can get our hands dirty with some javascript code (don´t worry, it won´t be a lot, I promise). In this case, we have 3 objectives with the javascript code:

◉ Field validation on check-in insert, to prevent the user to insert duplicated check-ins.

◉ Authentication and permissions.

◉ Custom endpoint to send e-mails.

Event Handlers

It is possible to add custom logic for the created services using event handlers. For example, in this application, we added an event handler so that before CAP inserts a check-in, it blocks duplicated entries. As you can see on the code below:

module.exports = (srv) => {

const { CheckIn, Users } = cds.entities('my.checkinapi')

srv.before('CREATE', 'CheckIn', async (req) => {

const checkIn = req.data

const user = await cds.run(SELECT.from(Users).where({ ID: { '=': checkIn.user.ID } }))

if (user.length == 0) {

await cds.run(INSERT.into(Users).entries(checkIn.user))

}

const existsCheckIn = await cds.run(SELECT.from(CheckIn).where({ floor_ID: { '=': checkIn.floor_ID }, date: { '=': checkIn.date }, user_ID : {'=' : checkIn.user.ID} }))

if (existsCheckIn.length > 0) {

req.reject(400, 'Já existe um check-in para a localidade e data.')

} else {

req.data.user_ID = checkIn.user.ID

delete req.data.user

}

})

}

Support for local ./server.js

In this application we used the Azure Active Directory to authenticate users as a demonstration of the SAP Cloud Application Programming Model flexibility. Before we continue, please note that it would be possible to use the user account and authentication service (UAA) to authenticate users through JSON Web Tokens, strategy which was used as well in our application. Moving on to our authentication middleware, to implement this strategy, we will need to add a validation for every request for our API. Although we would be able to add an event handler for each endpoint to validate the access token, that would not be practical. For that reason, it is possible to add a local server.js and listen to the app events. In this scenario, we added our middleware on the bootstrap event, as seen in the code below:

cds.on('bootstrap', (app) => {

// add your own middleware before any by cds are added

var cors = require('cors')

app.use(cors())

app.use((req, res, next) => {

res.setHeader('Access-Control-Allow-Origin', '*');

next();

});

app.use('/catalog', Auth)

app.use('/admin', AdminAuth.authenticate)

app.get('/api/login', AdminAuth.login)

})

In this code added to the server.js file on the root folder of our API, we will benefit from the bootstrapped express in our app to add cors and access control allow origin header. We will be adding also a token validation for our catalog and admin routes (endpoints for our services) and a custom login route, which will identify the user logged in and return an access token for the remaining routes.

◉ Login method (adminAuth.js)

◉ Used to validate if the user is logged in the Azure Active Directory and check if it is a system administrator or not. It will return an access token to validate the access on the remaining endpoints. It uses the jsonwebtoken and the azure-ad-jwt dependencies, which can be installed using NPM. Code below:

exports.login = (req, res, next) => {

if (req.method === 'OPTIONS') {

next()

} else {

const token = req.headers.idtoken

if (!token) {

return res.status(403).send({ errors: ['No token provided.'] })

}

aad.verify(token, env.variables.AD_SECRET, async (err, decoded) => {

if (err) {

return res.status(403).send({

errors: ['Failed to authenticate token.', err],

sysAdmin: false

})

} else {

const { SysAdmins } = cds.entities('my.checkinapi')

const sysAdmin = await cds.run(SELECT.from(SysAdmins).where({ email: { '=': decoded.preferred_username } }))

console.log(sysAdmin)

if (sysAdmin.length > 0) {

const token = jwt.sign({ sysAdmin: sysAdmin[0].email }, env.variables.AD_SECRET)

res.json({ sysAdmin: sysAdmin[0].email, token })

} else {

res.json({ sysAdmin: false })

}

}

})

}

}

◉ Authenticate method (adminAuth.js)

◉ This method will validate the access token and check if the user is a system administrator. If the decoded token indicates that the user is not an administrator, access will be denied. It uses the jsonwebtoken dependency. Code below:

exports.authenticate = (req, res, next) => {

if (req.method === 'OPTIONS') {

next()

} else {

const token = req.headers.idtoken

if (!token) {

return res.status(403).send({ errors: ['No token provided.'] })

}

jwt.verify(token, env.variables.AD_SECRET, (err, decoded) => {

if (err) {

return res.status(403).send({

errors: ['Failed to authenticate token.', err]

})

} else {

if (decoded.sysAdmin) {

next()

} else {

return res.status(403).send({

errors: ['Invalid Token.']

})

}

}

})

}

}

◉ Auth method (auth.js)

◉ This method will authenticate users for our non-administrative area of the API (catalog service). It will simply validate the access token. It uses the azure-ad-jwt dependency. Code below:

module.exports = (req, res, next) => {

if(req.method === 'OPTIONS'){

next()

}else{

const token = req.headers.idtoken

if (!token){

return res.status(403).send({errors: ['No token provided.']})

}

aad.verify(token, env.variables.AD_SECRET,(err, decoded) => {

if (err){

return res.status(403).send({

errors: ['Failed to authenticate token.', err]

})

}else{

req.decoded = decoded

next()

}

})

}

}

A very important reminder: the token secret should never be stored in code repositories. In node.js it is possible to set your environment variables or simply a file not tracked by git to set theses variables (which it was used in this scenario to simplify the deploy to the SAP Cloud Platform). This file must be required by the files that are going to validate the token. Code below:

exports.variables = {

AD_SECRET: '<YOUR_SECRET>'

}

◉ Custom endpoint to send e-mails (server.js)

◉ We also created a custom endpoint to send daily e-mails with the employees that made check-in for the respective day, grouped by location that will be sent to the access controllers of each location. Code below:

app.get('/api/checkInList', async (req, res) => {

const { FloorSecurityGuardsView, DailyCheckInList } = cds.entities('my.checkinapi')

const dailyCheckIns = await cds.run(SELECT.from(DailyCheckInList).where({ date: { '=': moment().format('YYYY-MM-DD') } }))

let dailyCheckInsFloors = {}

dailyCheckIns.map((checkIn) => {

if (dailyCheckInsFloors[checkIn.floorID] === undefined) {

dailyCheckInsFloors[checkIn.floorID] = [checkIn]

} else {

dailyCheckInsFloors[checkIn.floorID].push(checkIn)

}

})

Object.keys(dailyCheckInsFloors).map(async (id) => {

const securityGuards = await cds.run(SELECT.from(FloorSecurityGuardsView).where({ floor_ID: { '=': id } }))

if (securityGuards.length > 0) {

const transporter = nodemailer.createTransport({

service: 'gmail',

auth: {

user: env.variables.MAIL_PROVIDER,

pass: env.variables.MAIL_AUTH

}

})

const emails = securityGuards.map((securityGuard) => {

return securityGuard.securityGuardEmail

})

const office = securityGuards[0].office

const floor = securityGuards[0].floor

transporter.sendMail({

from: env.variables.MAIL_PROVIDER,

to: [emails],

subject: `Lista de check-ins para o dia ${ moment().format('DD/MM/YYYY')}. Localidade: ${office} - ${floor}`,

html: `<h4>${moment().format('DD/MM/YYYY')} ${office} - ${floor}` +

'<table style="border: 1px solid black;border-collapse: collapse;"> <thead> <tr> <th style="border: 1px solid black;border-collapse: collapse;">Nome</th> <th style="border: 1px solid black;border-collapse: collapse;">E-mail</th></tr></thead><tbody>' +

dailyCheckInsFloors[id].map((checkIn) => `<tr><td style="border: 1px solid black;border-collapse: collapse;">${checkIn.userName}</td><td style="border: 1px solid black;border-collapse: collapse;">${checkIn.userEmail}</td></tr>`).join('')

+ "</tbody>"

}, function (error, info) {

if (error) {

console.log(error)

} else {

console.log('Email sent: ' + info.response)

}

})

}

})

res.status(200).send('success')

})

And that´s enough javascript code for now (we still have to have to add the front-end, but react.js is not the focus of this post). One last reminder: The auth.js, the adminAuth.js and the variables.js file must be in the root folder, otherwise their references won´t be found after deployed to the SAP Cloud Platform, Cloud Foundry environment and this will crash the application (we don´t want this happen, do we?).

React.js framework front end

React.js is the most popular front end framework available right now. It is great to control the application´s states and create componentized assets for the application. The react front-end will use the endpoints created to access the application data and for styling we used the UI5 Web Components for React library, to know more about it, please access the official documentation.

Application front end development

The application front end was developed using react.js as mentioned. This is not the focus of this blog post (please feel free to contact me if you want more information about the react.js development). However, I will demonstrate how the authentication was done in the front end, as it is an essential part for the token validation on the back end to function properly and also about the awesome library, UI5 Web Components for React.

MSAL.js and Azure Active Directory

First, we need to register our application in the Azure Active Directory Portal. It is possible to create a free account that will serve our purpose just fine. After creating the free account, please follow this detailed step-by-step that shows how to configure the app in Azure AD. On the last step from that tutorial it will be provided a client secret, please copy it, since it will never be shown again, and this is the secret key that we used on the back end to validate the login. Now that we configured the app in Azure AD, we are going to write some more javascript code, to use MSAL.js on the front end. MSAL.js (Microsoft Authentication Library) is a javascsript library that enables developers to acquire tokens from the Microsoft identity platform endpoint in order to access secured web APIs. To use it in our application, we will NPM import msal into our app and the code needed to add it to our app can be found on the msal folder of the front end repository. In the MsalConfig.jsx file, you will need to add your own clientId from the Azure AD app.

UI5 Web Components for React

The UI5 Web Components for React is a wrapper of the UI5 Web Components. This library was developed with the goal of facilitating the consumption of UI5 controls. It is aimed at developers who want to have more flexibility to use just HTML tags or arbitrary JS frameworks (exactly our case).

SAP Cloud Platform Deploy

After we finish the development, we want to deploy our application to SAP Cloud platform. In my case, I pulled the code from the Github repository into the SAP Business Application Studio, because, as I mentioned before, this environment already has all the CLI and plugins to facilitate the deploy to SAP Cloud Platform. Finally, before we begin our deploy, we need to login into the Cloud Foundry environment from SAP Cloud Platform that we want to use. Please use the command below to login:

cf login

Back end Deployment

Database Deployment

We will deploy our database to SAP HANA Cloud. However, SAP HANA Cloud currently does not support Core Data Services. Fortunately, SAP Cloud application programming Model has the option to transpile the Core Data Services files into hdbtable files (for example). For this to happen, before we run the deploy command, we need to specify in our package.json file that we want to deploy in the hdbtable format, as shown below:

"cds": {

"hana" : { "deploy-format": "hdbtable" }

}

cds deploy --to hana

This will deploy just the database parts of the project to an SAP HANA Cloud instance. If an hdi-shared service for the project was not created, it will create the service and an HDI container.

Service Deployment

Now that we deployed our database, we can deploy our service. First, we will add an MTA.yaml file in our project with the following command:

cds add mta

Then, we will build the MTA file and generate a .mtar file with the command below:

mbt build -t ./

And finally, we will deploy the .mtar file with the command shown below:

cf deploy <.mtar file>

After command has been executed check on your cloud foundry environment if the application was created and is up and running.

Front end deployment

The front end deployment is much simpler. We simply issue the command below in the CLI, and an application will be created with the name chosen in the command and the start command.

cf p <your_app_name> -c “npm start”

No comments:

Post a Comment