This blog I wanted to show you an end to end example of getting unstructured JSON data, loading it into SAP HANA, enriching with geo-spatial attributes and exposing to SAP Analytics Cloud for further analysis.

The problem with most tutorials usually – they are focused on some randomly generated abstract data (like SFLIGHT or EPM Model data) and for some people this doesn’t really mean much, so I thought a real life example of a real up to date data analysis would be very beneficial for everyone.

Now, there are many ways to automate most of the tasks shown in this post, but I just wanted to show quick and simple example of data consumption, transformation and analysis which can be done in a few hours over the weekend. Now let’s begin.

If we go to a certain petition page we would see a page which looks like this:

The problem with most tutorials usually – they are focused on some randomly generated abstract data (like SFLIGHT or EPM Model data) and for some people this doesn’t really mean much, so I thought a real life example of a real up to date data analysis would be very beneficial for everyone.

Now, there are many ways to automate most of the tasks shown in this post, but I just wanted to show quick and simple example of data consumption, transformation and analysis which can be done in a few hours over the weekend. Now let’s begin.

If we go to a certain petition page we would see a page which looks like this:

That doesn’t mean much and we can’t easily extract any meaningful data. But there is a way – if we change the link to https://petition.parliament.uk/petitions/241584.json we would be able to see the data in JSON format (quite unstructured on the screenshot):

There is a JSON view plugin for Chrome to help us see the formatted version:

Install the extension and reload the page:

Ok, now we are getting somewhere. The next step is – how do we get this data into our SAP HANA system? I decided to create a set of SQL statements to create the necessary unstructured table and insert data into it. To do that, I firstly had to find a way to easily create the SQL DDL and DML statements without going manually one by one through each line of JSON file.

There is another great online service for that: Sqlify.io – it allows you to feed it JSON or CSV files and creates a set of DDL and DML statements for you, which after some tweaking can be executed in SAP HANA system.

Let’s go to SQLify.io and enter our link from above:

Click “Convert to SQL” and see the result:

As I mentioned, we will end up with the table of unstructured data, which is fine with us as we would then create the necessary HANA Calculation Views on that table. We will just go ahead and remove the unnecessary fields. After tiding up we will end up with just a handful of meaningful fields:

Click “Save schema and continue”. Save the resulting SQL and open it locally (I use VS Code for any SQL, JS and sometimes ABAP development work):

It looks great (and perfectly unstructured), but hardly ready for SAP HANA SQL. What needs to be done is a little makeover which results in the following script which we can run in HANA. I have created a new schema for this blog post, so that everything is neatly contained in one place (the link to the full script can be found at the end of this post):

Open SQL console of your HANA DB (use the default tenant or whichever tenant you like) and run the SQL script to create petitions data table with all the values.

Check the created tables and values:

Ok, so far so good. Now, in order for us to have Geo-analysis we need to have geo coordinates table somewhere, I have found the table with countries and longitude and latitude details online and also converted it to the SQL script for HANA:

Run the script and check the resulting table:

All good with Countries. A bit harder with UK Constituencies coordinates. I went to the Office for National Statistics website and downloaded the full Postcode Directory (a bit of an overkill for this small exercise, but very useful in future). You can download the full archive from here:

http://geoportal.statistics.gov.uk/items/ons-postcode-directory-february-2019

Create the table definition using SQL command:

CREATE TABLE ONSPD_FEB_2019 (

pcon VARCHAR(10) NULL,

lat FLOAT NULL,

long FLOAT NULL

);

After the table is created, go to Eclipse and choose File->Import

Note: I switched to Eclipse here, because the file is massive and SAP HANA Web-based Development Workbench won’t be able to handle it. You can use Eclipse to complete all the steps in this post, if you prefer.

Choose Import data from local file:

Pick up the file from the downloaded archive and make other relevant selections:

Create the mappings and click “Finish” to start the upload (which would take quite a while if you are using the remote HANA system, so go grab some lunch or a cuppa):

After the upload is completed, check the data in the table:

The problem is that ONSPD goes into a finer granularity than we need, so that all the coordinates go way down to Ward and Parish, but we only need one set of coordinates per constituency. Therefore, I have decided to take the distinct value of ONS code from PCON field with average coordinates and use the coordinates of that record for the constituency, which isn’t really precise or ideal, but… There might be an easier way to find the coordinates based on the ONS code from Petitions site, but I haven’t found it and would love to hear your comments on this in the comments section below.

Now, before we proceed, I strongly suggest you check the following SAP Note:

https://launchpad.support.sap.com/#/notes/0002395407

In order to create ST_Geometry Location coordinates the steps above must be completed, otherwise the scripts below would fail.

After you have completed the prerequisites, run the script to create Location tables which are based on the countries and constituencies tables above. It creates the location definitions which can be consumed by SAP Analytics Cloud:

CREATE COLUMN TABLE "SAC_SOURCE"."Countries_Location" (

"Country_Code_LD" VARCHAR(2) PRIMARY KEY, "Location" ST_GEOMETRY(3857));

UPSERT "SAC_SOURCE"."Countries_Location" ("Country_Code_LD")

SELECT "COUNTRY_CODE" FROM "SAC_SOURCE"."COUNTRIES" GROUP BY

"COUNTRY_CODE";

UPDATE "SAC_SOURCE"."Countries_Location"

SET "Location" = new ST_GEOMETRY('POINT(' || "LONGITUDE" || ' ' || "LATITUDE" ||

')', 4326).ST_Transform(3857)

FROM (

SELECT MIN("LATITUDE") "LATITUDE", MIN("LONGITUDE") "LONGITUDE", "COUNTRY_CODE"

FROM "SAC_SOURCE"."COUNTRIES" GROUP BY

"COUNTRY_CODE"),

"SAC_SOURCE"."Countries_Location"

WHERE "COUNTRY_CODE" = "Country_Code_LD";

CREATE COLUMN TABLE "SAC_SOURCE"."Constituencies_Location" (

"ONS_CODE_LD" VARCHAR(10) PRIMARY KEY, "Location" ST_GEOMETRY(3857));

UPSERT "SAC_SOURCE"."Constituencies_Location" ("ONS_CODE_LD")

SELECT DISTINCT "PCON" FROM "SAC_SOURCE"."ONSPD_FEB_2019" WHERE

"PCON" IN ( SELECT "CONSTITUENCY_ONS_CODE" FROM "SAC_SOURCE"."PETITION_241584_DATA" WHERE "CONSTITUENCY_ONS_CODE" <> '' )

GROUP BY

"PCON";

UPDATE "SAC_SOURCE"."Constituencies_Location"

SET "Location" = new ST_GEOMETRY('POINT(' || "LONG" || ' ' || "LAT" ||

')', 4326).ST_Transform(3857)

FROM (

SELECT DISTINCT "PCON", AVG("LAT") "LAT", AVG("LONG") "LONG"

FROM "SAC_SOURCE"."ONSPD_FEB_2019" WHERE

"PCON" IN ( SELECT "CONSTITUENCY_ONS_CODE" FROM "SAC_SOURCE"."PETITION_241584_DATA" WHERE "CONSTITUENCY_ONS_CODE" <> '' ) GROUP BY

"PCON"),

"SAC_SOURCE"."Constituencies_Location"

WHERE "PCON" = "ONS_CODE_LD";

This would create two location supporting tables:

Check the contents of any:

Lovely, just what we are looking for!

Now let’ carry on building 4 very simple HANA Calculation views – 2 scripted and 2 graphical (just to illustrate different options).

Both are very simple containing a single table with just a different output selections:

Add petitions table to the aggregation node:

Map just three required fields from the source:

Set Country_Code as the key in Semantics node:

Save and run:

Create another view similarly by adding Constituency relevant fields:

Set ONS code as the key:

Save and run:

Marvellous.

Now we only need to create location supporting views for Country and Constituency in special package SAP_BOC_SPATIAL (this is very important).

I have decided to create them as scripted CVs to just show how they look.

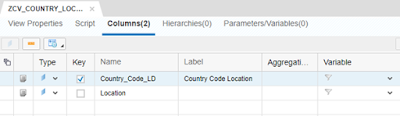

The first one is Country Location:

Create two columns on the right and paste the code:

Set Country_Code_LD as the key and run the view:

Great. Define the second scripted view ZCV_CONSTITUENCY_LOCATION:

Set ONS_CODE_LD to key and run the view:

Okay, job’s done on HANA side. We now have 4 views and they are ready to be consumed by SAP Analytics Cloud:

SAP Analytics Cloud

We will be creating Live HANA connection from SAC to our tenant, if you don’t have it yet, it’s not that trivial to set up (this involves SSL).

I have created the Live connection already so we can proceed.

Go to your SAC tenant and create new model:

Select “Get data from a datasource” and pick up your HANA Live connection:

Click on Data Source selector and choose our Country Calculation View:

Call the Model “Signatures_by_country” and confirm.

Check that the measure and dimensions are present:

Now we need to add location information to our model. Remember, we even have the supporting view for that!

Click “Create a location dimension” button:

And select the values as follows:

What we did is we actually told the system where the location information actually resides and ordered system to join that location info with our model based on the relationship between COUNTRY_CODE and COUNTRY_CODE_LD (Country Code Location).

Confirm and save the model.

Now before we proceed further, let’s create another model “Signatures_by_constituency” and add the relevant views to it – repeat all the previous steps with the following selections:

Map the location view like this:

Confirm and save the model.

Now let’s get to the cherry on the cake (finally!). Create new story:

With the canvas page:

Add Geo Map to canvas:

You can leave it light grey or choose many options for the base layer:

I chose “Streets” because it’s more colourful! Add new layer, call it “World” and add our “Country” model as the data source:

Switch layer to “Heat map” and add our Location dimension and Signatures as the measures:

Confirm, and see the results right away!

Play with different modes of maps until you’ve got the view that is most suitable for your analysis. For example, switch map to “Bubble layer”. It gives you the details when you mouse over:

Now go and create another Layer and call it “UK” and choose signatures by constituency:

Zoom in on the UK:

It gives you the heat map based on the UK constituencies now. Neat!

If you zoom out a bit you will see both layers – Bubble Layer showing the World and Heat map showing the UK :

Now we can add another page to our story and select “Grid”:

Insert models to the different pages:

This way we can have the map and two grids with raw data for our analysis:

Save it as the “Petitions story”:

Now you can try and enhance the base model with whatever you like, such as 2016 referendum data per constituency to see the correlations between voters who voted Remain and signatories of the petition to revoke Article 50. Go ahead and play!

Key takeaways and epilogue

◈ SAP Analytics Cloud Story is fully dynamic and based on live date from HANA system. If you change the value in the table the story will update right away.

◈ The end to end example did not use… any BW!

◈ HANA Calculation views can be expanded to read other data, such as referendum, election results, poverty, homelessness figures, etc. and all that extra data can be analysed quickly and efficiently on the fly using the provided example framework.

No comments:

Post a Comment