1. Introduction

In this blog post, we will delve into the concept of Machine Learning (ML) Explainability in SAP HANA Predictive Analysis Library (PAL) and showcase how HANA PAL has seamlessly integrated this feature into various classification and regression algorithms, providing an effective tool for understanding predictive modeling. ML explainability are integral to achieving SAP's ethical AI goals, ensuring fairness, transparency, and trustworthiness in AI systems.

Upon completing this article, your key takeaways will be:

Please note that ML explainability in HANA PAL is not just confined to classification and regression tasks but also extends to time series analysis. We will explore these topics in the following blog post. Stay tuned!

2. ML Explainability

ML Explainability, often intertwined with the concepts of transparency and interpretability, refers to the ability to understand and explain the predictions and decisions made by ML models. It aims to clarify which key features or patterns in the data contribute to specific outcomes.

The necessity for explainability escalates with AI's expanding role in critical sectors of society, where obscure decision-making processes can have significant ramifications. It is essential for fostering trust, advocating fairness, and complying with regulatory standards.

The field of ML explainability is rapidly evolving as researchers in both academia and industry strive to make AI smarter and more reliable. Currently, several techniques are widely employed to enhance the comprehensibility of ML models. These methods are generally divided into two categories: global and local.

Global explainability methods seek to reveal the average behavior of ML models and the overall impact of features. This category encompasses both:

- Model-Specific approaches, utilize inherently interpretable models like linear regression, logistic regression, and decision trees, which are designed to be understandable. For instance, feature importance scores in tree-based models assess how often features are used to make decisions within the tree structure.

- Model-Agnostic approaches that offer flexibility by detaching the explanation from the model itself, utilizing techniques like permutation importance, functional decomposition, and global surrogate models.

In contrast, local explainability methods focus on explaining individual predictions. These methods include Individual Conditional Expectation, Local Surrogate Models (such as LIME, which stands for Local Interpretable Model-agnostic Explanations), SHAP values (SHapley Additive exPlanations), and Counterfactual Explanations.

3. ML Explainability in PAL

PAL, a key component of SAP HANA's Embedded ML, is designed for data scientists and developers to execute out-of-box ML algorithms within HANA SQL Script procedures. This eliminates the need to export data in another environment for processing, thereby reducing data redundancy and enhancing the performance of analytics applications.

In terms of explainability, PAL offers a variety of robust methods for both classification and regression tasks through its Unified Classification, Unified Regression, and AutoML functions. The model explainability is made accessible via the standard AFL SQL interface and the Python/R machine learning client for SAP HANA (hana_ml and hana.ml.r). By offering both local and global explainability methods, PAL ensures that users can choose the level of detail that best suits their needs.

- Local Explainability Methods

- SHAP (SHapley Additive exPlanations values), inspired by game theory, serve as a measure to explain the contribution of each feature towards a model's prediction for a specific instance. PAL implements various SHAP computation methods, including linear, kernel, and tree SHAP, tailored for different functions. For example, in tree algorithms such as Decision Tree (DT), RDT, and HGBT, PAL also provides tree SHAP and Saabaas for computation. PAL also implements kernel SHAP in the context of AutoML pipelines to enhance the interpretability of model outputs.

- Global Explainability Methods

- Permutation Importance: A global model-agnostic method that involves randomly shuffling the values of each feature and measuring the impact on the model's performance during the model training phase. A significant drop in performance after shuffling indicates the importance of a feature.

- Global Surrogate: Within AutoML, after identifying the best pipeline, PAL also provides a Global Surrogate model to explain the pipeline's behavior.

- A native method to tree-based models like RDT and HGBT that quantifies the importance of features based on their frequency of use in splitting nodes within the tree or by the reduction in impurity they achieve.

4. Explainability Example

4.1 Use case and data

In this section, we will use a publicly accessible synthetic recruiting dataset which is derived from an example at the [Centre for Data Ethics and Innovation] as a case study to explore HANA PAL ML explainability. All source code will use Python Machine Learning Client for SAP HANA(hana_ml). Please note that the example code use in this section is only intended to better explain and visualize ML explainability in SAP HANA PAL, not for productive use.

This artificial dataset represents individual job applicants, featuring attributes that relate to their experience, qualifications, and demographics. This same dataset is also used in my another blog post on PAL ML fairness. We have identified the following 13 variables (from Column 2 to Column 14) to be potentially relevant in an automated recruitment setting. The first column includes IDs, and the last one is the target variable, 'employed_yes', hence the model shall predict if an applicant will or shall be employed or not.

- ID: ID column

- gender : Femail and male, identified as 0 (Female) and 1 (Male)

- ethical_group : Two ethic groups, identified as 0 (ethical group X) and 1 (ethical group Y)

- years_experience : Number of career years relevant to the job

- referred : Did the candidate get referred for this position

- gcse : GCSE results

- a_level : A-level results

- russell_group : Did the candidate attend a Russell Group university

- honours : Did the candidate graduate with an honours degree

- years_volunteer : Years of volunteering experience

- income : Current income

- it_skills : Level of IT skills

- years_gaps : Years of gaps in the CV

- quality_cv : Quality of written CV

- employed_yes : Whether currently employed or not (target variable)

A total of 10,000 instances have been generated and the dataset has been divided into two dataframes: employ_train_df (80%) and employ_test_df (20%). The first 5 rows of employ_train_df is shown in Fig.1.

Fig. 1 The first 5 rows of training dataset

4.2 Fitting the Classification ML Model

In the following paragraphs, we will utilize the UnifiedClassification and select the "randomdecisiontree" (RDT) algorithm to showcase the various methods PAL offers for model explainability.

Firstly, we instantiate a 'UnifiedClassification' object "urdt" and train a random decision trees model using a training dataframe employ_train_df. Following this, we employ the score() function to evaluate the model's performance. As shown in Fig.2, the model's overall performance is satisfactory, as indicated by its AUC, accuracy, and precision-recall rates for both classes 0 and 1 in the model report .

>>> from hana_ml.algorithms.pal.unified_classification import UnifiedClassification

>>> features = employ_train_df.columns # obtain the name of columns in training dataset

>>> features.remove('ID') # delete key column name

>>> features.remove('employed_yes') # delete label column name

>>> urdt = UnifiedClassification(func='randomdecisiontree', random_state=2024)

>>> urdt.fit(data=employ_train_df, key="ID", label='employed_yes')

>>> score_result = urdt.score(data=employ_test_df, key="ID", top_k_attributions=20, random_state=1)

>>> from hana_ml.visualizers.unified_report import UnifiedReport

>>> UnifiedReport(urdt).build().display()

Fig.2 Model Report

4.3 Local ML Model Explainability

SHAP values can be easily obtained through the predict() and score() functions. The following code demonstrates the use of the predict() method with 'urdt' to obtain the predictive result "predict_result". Figure 3 displays the first two rows of the results, which include a 'SCORE' column for the predicted outcomes and a 'CONFIDENCE' column representing the probability of the predictions. The 'REASON_CODE' column contains a JSON string that details the specific contribution of each feature value, including "attr" for the attribution name, "val" for the SHAP value, and "pct" for the percentage, which represents the contribution's proportion.

When working with tree-based models, the 'attribution_method' parameter offers three options for calculating SHAP values: the default 'tree-shap', 'saabas' designed for large datasets and can provide faster computation, and 'no' to disable explanation calculation to save computation time as needed.

>>> predict_result = urdt.predict(data=employ_test_df.deselect("employed_yes"), key="ID",

top_k_attributions=20, attribution_method='tree-shap', random_state=1, verbose=True)

>>> predict_result.head(2).collect()

Fig. 3 Predict Result Dataframe

For a more convenient examination of SHAP values, we provide a tool - the force plot, which offers a clear visualization of the impact of individual features on a specific prediction. Taking the first row of the prediction data as an example, we can observe that being female (gender = 0), having 3 years of experience (years_experience = 3), and not being referred (referred = 0), all contribute negatively to the likelihood of being hired. Furthermore, by clicking on the '+' sign in front of each row, you can expand to view the detailed force plot for that particular instance (as shown in Figure 4).

>>> from hana_ml.visualizers.shap import ShapleyExplainer

>>> shapley_explainer = ShapleyExplainer(feature_data=employ_test_df.sort('ID').select(features),

reason_code_data=predict_result.filter('SCORE=1').sort('ID').select('REASON_CODE'))

>>> shapley_explainer.force_plot()

Fig. 4 Force Plot

4.4 Global ML Model Explainability

4.4.1 Permutation Importance Explanations

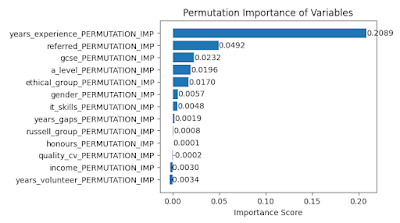

To compute permutation importance, you need to set the parameter permutation_importance = True when fitting the model. The results of the permutation importance scores can be directly extracted from the importance_ attribute of the UnifiedClassification object, with each feature name suffixed by PERMUTATION_IMP in Fig. 5 and the scores are visualized in Fig. 6.

>>> urdt_per = UnifiedClassification(func='randomdecisiontree', random_state=2024)

>>> urdt_per.fit(data=employ_train_df, key='ID', label='employed_yes', partition_method='stratified',

stratified_column='employed_yes', training_percent=0.8, ntiles=2, permutation_importance=True, permutation_evaluation_metric='accuracy', permutation_n_repeats=10, permutation_seed=2024)

>>> print(urdt_per.importance_.sort('IMPORTANCE', desc=True).collect())

Fig. 5 Permutation Importance Scores

Fig. 6 Bar Plot of Permutation Importance Scores

In Fig. 6, we can see the top three features in terms of importance, are 'years_experience', 'referred', and 'gcse'. This indicates that these features have the most significant impact on the model's predictions when their values are randomly shuffled, leading to a measurable decrease in the model's performance metric.

4.4.2 SHAP Summary Report

The ShapleyExplainer also provides a comprehensive summary report that includes a suite of visualizations such as the beeswarm plot, bar plot, dependence plot, and enhanced dependence plot. Specifically, the beeswarm plot and bar plot offer a global perspective, illustrating the impact of different features on the outcome across the entire dataset.

>>> shapley_explainer.summary_plot()

The beeswarm plot (shownin Fig. 7), which visually illustrate the distribution of SHAP values for features across all instances. Point colors indicate feature value magnitude, with red for larger and blue for smaller values. For instance, the color distribution of 'years_experience' suggests that longer work experience increase hiring chance while the 'years_gaps' spread implies a longer gap negative affects hire likelihood.

Fig. 7 Beeswarm Plot

The order of features in the beeswarm plot is often determined by their importance, as can be more explicitly seen in the bar plot shown in Fig. 8. which ranks features based on the sum of the absolute values of their SHAP values, providing a clear hierarchy of feature importance. For example, the top 3 influential features are 'years_experience', 'referred', and 'ethical_group'.

Fig. 8 Bar Plot

For a more granular understanding of the impact of each feature on the target variable, we can refer to the dependence plot shown in Fig. 9. This plot illustrates the relationship between a feature and the SHAP values. For instance, a dependence plot for 'years_experience' might show that shorter work experience corresponds to negative SHAP values, with a turning point around 6 years of experience, after which the contribution becomes positive. Additionally, the report includes an enhanced dependence plot that examines the relationship between pairs of features. This can provide insights into how feature interactions affect the model's predictions.

Fig. 9 Dependence Plot

4.4.3. Tree-Based Feature Important

The feature important for tree-based models is currently supported by RDT and HGBT in PAL. The feature importance scores can be directly extracted from the importance_ attribute of the 'UnifiedClassification' object "urdt". Below is a code snippet that demonstrates how to obtain and rank these feature importance scores in descending order. The result is shown in Fig. 9 and these scores can then be visualized using a bar plot (as shown in Fig. 10). It is clear that the top three features in terms of importance are 'years_experience', 'income', and 'gcse'.

>>> urdt.importance_.sort('IMPORTANCE', desc=True).collect()

Fig. 10 Feature Importance Scores

Fig. 11 Bar Plot of Feature Importance Scores

Figures 6, 8, and 11 present feature importance scores from 3 different methods, consistently identifying 'years_experience' as the most critical factor. However, the ranking of importance of other features varies across methods. This fluctuation stems from each method's unique approach to assessing feature contributions and the dataset's inherent characteristics. SHAP values are based on a game-theoretic approach that assigns each feature an importance score reflecting its average impact on the model output across all possible feature combinations. In contrast, tree-based models' feature importance scores reflect how frequently a feature is used for data splits within the tree, which may not capture the nuanced interactions between features. Permutation importance, on the other hand, can reveal nonlinear relationships and interactions that are not explicitly modeled. Thus, interpreting the model requires a multifaceted approach, considering the strengths and limitations of each method to inform decision-making.

5. Summary

The blog post introduces ML explainability in SAP HANA PAL, showcasing the use of varous local and global methods like SHAP values, permutation importance, and tree-based feature importance to analyze a synthetic recruiting dataset using Python Client. It emphasizes the necessity for a multifaceted approach to model interpretation, considering the strengths and limitations of each method for informed decision-making. This feature is crucial for SAP's ethical AI objectives, aiming to ensure fairness, transparency, and trustworthiness in AI applications.

No comments:

Post a Comment