Introduction:

SAP Datasphere is a powerful platform that allows organizations to create and manage data models, views, and integrations. On the other hand, Databricks is a widely used data analytics and machine learning platform. In this blog post, we will explore how to bring SAP Datasphere views to Databricks using SAP HANA Cloud JDBC.

1. Set up an SAP Datasphere Trial Account: Obtain access to SAP Datasphere by creating a trial account.

2. Create a Space and Add a Connection: Create a space in SAP Datasphere and establish a connection to your SAP S/4 HANA system.

3. Add a Database User: Create a database user in your SAP Datasphere space.

4. Databricks Trial Account: Obtain access to Databricks by creating a trial account.

Step 1: Importing Tables to SAP Datasphere

Once you have set up your SAP Datasphere account, space, connection, and database user, import all the required tables into your SAP Datasphere space using the data builder tool.

Import and deploy the tables.

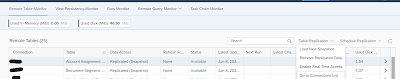

After importing the tables, load a snapshot (Table Replication) for the tables you want to persist. For tables that change rapidly in the operational system, you can keep them federated as remote tables. This can be done using the Data Integration Monitor.

Data Integration Monitor

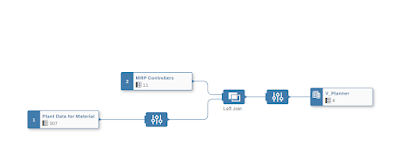

Step 2: Creating Dimension Views and Relational Dataset Views In SAP Datasphere

To enable seamless data consumption, SAP Datasphere allows you to create dimension views and relational dataset views using its intuitive graphical views in the Data Builder tool. Leverage these powerful capabilities to model and define your data views.

Dimension View

Analytical Dataset(view) for consumption

Expose the created view for consumption:

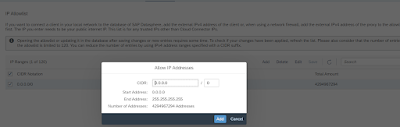

Step 3: Add an IP address range to IP Allowlist

To connect to the SAP HANA database from Databricks, you need to allow IPs to connect to the database.

IP Allowlist

Also, ensure that the database user has the necessary read/write privileges.

Copy the database connection URL for later use.

Step 4: Establishing a JDBC Connection to Databricks

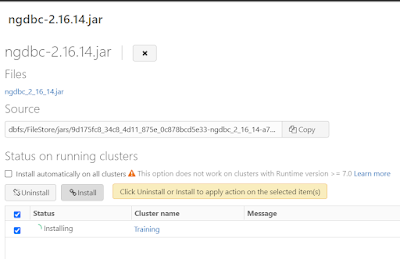

To establish a JDBC connection to Databricks, you will need the SAP HANA JDBC driver. Download the driver file (“ngdbc”) from the SAP Support Launchpad or SAP Development Tools. Once downloaded, upload the driver file to Azure Databricks.

SAP Development tools

In the Azure Databricks workspace, create a new library and upload the downloaded driver file.

Select the cluster on which you want to install the library.

Step 5: Checking Connectivity and Querying Views

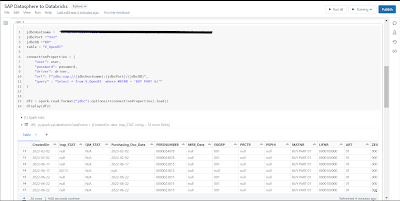

With the SAP HANA JDBC driver installed in Databricks, you can now check the connectivity and read the views created in SAP Datasphere.

You can also query the data using the available options.

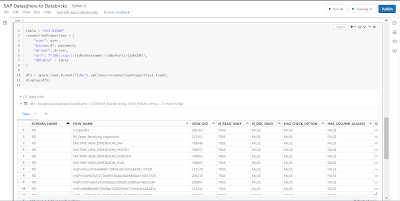

Additionally, you can view all the tables/views that were deployed for consumption in SAP Datasphere.

No comments:

Post a Comment