Prerequisites

◉ SAP BTP Trial account with SAP HANA Database created and running in SAP HANA Cloud

◉ cf command-line tool (CLI)

If you are not familiar with deploying Python applications to SAP BTP, CloudFoundry environment, then please check Create a Python Application via Cloud Foundry Command Line Interface tutorial first.

I won’t repeat steps from there, like how to logon to your SAP BTP account using cf CLI. But I will cover extras we are going to work with / experiment with here.

Let’s have a look at the application

Before discussing the details of the implementation of the application, let’s first check how it look and what it does.

Once started:

1. The app checks that expected schema exists in SAP HANA Cloud database and connects to it

2. It allows a user to upload any CSV or TXT file (please note 10MB limit).

3. Once you preview uploaded file you can persist it into your SAP HANA db instance.

We can see a table "STAGING4UPLOADS"."STAGING" with corresponding structure created and data uploaded.

If we upload another file, then the structure of this table will be recreated.

Implementation

Create a service for a staging area in SAP HANA db…

Checking available plans for hana service:

cf marketplace -e hana

The following command creates a hana service instance named hdb-staging-schema with the plan schema and database schema name to be created STAGING4UPLOADS:

cf create-service hana schema hdb-staging-schema -c '{ "schema" : "STAGING4UPLOADS" }'

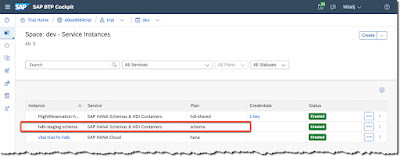

Once created you should see the service in your SAP BTP Cockpit too:

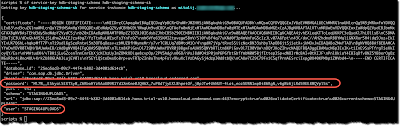

…and a service key for us to access data in that schema

cf create-service-key hdb-staging-schema hdb-staging-schema-sk

cf service-key hdb-staging-schema hdb-staging-schema-sk

We can use it to connect to the database and the schema.

CONNECT STAGING4UPLOADS PASSWORD "Your_Long_Password_From_The_ServiceKey";

SELECT Current_User, Current_Schema FROM dummy;

Implement our application

The source code of the application is downloadable from https://github.com/SAP-samples/sap-tech-bytes/tree/2022-06-08-btp-cf-py-app-hanacloud-csv-flexload.

loadfile_mvp.py

Main file with the minimal required logic (therefore mvp in the name).

from io import StringIO

import streamlit as st

import hana_ml.dataframe as dataframe

from cfenv import AppEnv

import pandas as pd

st.set_page_config(

page_title="CSV-to-HDB",

page_icon="https://hana-cockpit.cfapps.us10.hana.ondemand.com/hcs/sap/hana/cloud/assets/images/sap_logo.svg",

layout="wide",

)

st.title('CSV-to-HDB')

env = AppEnv()

HANA_SERVICE = 'hdb-staging-schema'

hana = env.get_service(name=HANA_SERVICE)

# Instantiate connection object

conn = dataframe.ConnectionContext(address=hana.credentials['host'],

port=int(hana.credentials['port']),

user=hana.credentials['user'],

password=hana.credentials['password'],

encrypt='true',

sslTrustStore=hana.credentials['certificate'])

st.write(f'1. Connected to the schema {conn.get_current_schema()}')

df_data = None

some_file = st.file_uploader("2. Upload a file to be loaded into SAP HANA Cloud db", type={"csv", "txt"})

if some_file is not None:

# To read file as bytes:

bytes_data = some_file.getvalue()

#st.write(bytes_data)

# To convert to a string based IO:

stringio = StringIO(some_file.getvalue().decode("utf-8"))

#st.write(stringio)

# To read file as string:

string_data = stringio.read().splitlines()

st.write('File preview (up to 5 lines):', string_data[:5])

# Load CSV into pandas DataFrame

df_data = None

if some_file is not None:

df_data = pd.read_csv(some_file, sep=None).convert_dtypes()

st.write(f"Pandas dataframe size: {0.0 if df_data is None else round(df_data.memory_usage(deep=True).sum()/1024/1024, 2)} MB")

st.write('Dataframe (up to 5 rows):', df_data if df_data is None else df_data.head(5))

# Load data to SAP HANA

service_schema = hana.credentials['schema']

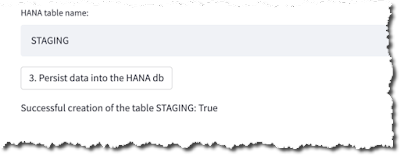

target_table = st.text_input('HANA table name: ', 'STAGING')

if st.button('3. Persist data into the HANA db') and df_data is not None and target_table!='':

df_remote = dataframe.create_dataframe_from_pandas(connection_context = conn,

pandas_df = df_data,

table_name = target_table,

schema = service_schema,

force = True,

disable_progressbar = True)

st.write(f'Successful creation of the table {target_table}: {conn.has_table(table=target_table, schema = service_schema)}')

Please note:

1. Setting up the page icon

2. The binding to the service to get connection details for SAP HANA database.

requirements.txt

The file with required modules to be pulled from PyPI.

cfenv

hana-ml

streamlit

1. cfenv is a tiny utility that simplifies interactions with Cloud Foundry environment variables

2. hana-ml is a Python machine learning client for SAP HANA

3. streamlit is a Streamlit’s open-source app framework to create web apps

runtime.txt

The file defines the version of Python to be used. The CloudFoundry buildpack with the corresponding version will be downloaded.

python-3.9.*

Please note the use of .* which ensures that the latest available buildpck for Python 3.9 will be used.

Procfile

The files defines the command to be executed when the application start.

web: streamlit run loadfile_mvp.py --server.port ${PORT}

Please note the use of the variable ${PORT} which ensures that streamlit app runs (via --server.port option) on the default web port of the CloudFoundry app instead of the default Streamlit port 5000.

manifest.yml

The CloudFoundry manifest of the application.

---

applications:

- name: ((app-name))

routes:

- route: ((app-name))-((account-name)).cfapps.((btp-region)).hana.ondemand.com

memory: 2048M

buildpacks:

- python_buildpack

env:

STREAMLIT_SERVER_MAX_UPLOAD_SIZE: 100

services:

- hdb-staging-schema

Please note:

1. The binding to hdb-staging-schemaa service instance.

2. Passing the Streamlit’s environment variable STREAMLIT_SERVER_MAX_UPLOAD_SIZE to limit a size of a file uploaded.

3. The use of variables to avoid hard-coding of the application name, account name (used to create unique URLs for your deployments), and a BTP region where the app is deployed. These variables are provided via a separate file vars.yml described below.

vars.yml

The file with the variables for the manifest file.

app-name: csv2hdb

account-name: e8ee8684trial

btp-region: us10

You should modify these three variable to match your application’s requirements!

Deploy the application

To deploy the application use:

cf push --vars-file vars.yml

You should see a log similar to the one below:

Pushing app csv2hdb to org e8ee8684trial / space dev as witalij... ...

Applying manifest file /.../sap-tech-bytes/scripts/manifest.yml...

Manifest applied

Packaging files to upload...

Uploading files...

1.89 KiB / 1.89 KiB [=========================================================================================================================================] 100.00% 1s

Waiting for API to complete processing files...

Staging app and tracing logs...

Downloading python_buildpack...

Downloaded python_buildpack

Cell d5f8ba8f-6b80-4d41-b335-1a257634845d creating container for instance e4c343c7-2922-43d4-a6a0-b7055e4ab8a7

Cell d5f8ba8f-6b80-4d41-b335-1a257634845d successfully created container for instance e4c343c7-2922-43d4-a6a0-b7055e4ab8a7

Downloading app package...

Downloaded app package (1.9K)

-----> Python Buildpack version 1.7.49

-----> Supplying Python

-----> Installing python 3.9.9

Copy [/tmp/buildpacks/49b223c631091864eee2a117ec43f025/dependencies/15b399819fce43a5b5eedb0316dbb3c1/python_3.9.9_linux_x64_cflinuxfs3_6a29c275.tgz]

Using python's pip module

...

-----> Running Pip Install

Collecting cfenv

Downloading cfenv-0.5.3-py2.py3-none-any.whl (4.5 kB)

Collecting hana-ml

Downloading hana_ml-2.13.22060800-py3-none-any.whl (5.1 MB)

Collecting streamlit

Downloading streamlit-1.10.0-py2.py3-none-any.whl (9.1 MB)

...

Exit status 0

Uploading droplet, build artifacts cache...

Uploading droplet...

Uploading build artifacts cache...

Uploaded build artifacts cache (127.7M)

Uploaded droplet (201.5M)

Uploading complete

...

Waiting for app csv2hdb to start...

Instances starting...

name: csv2hdb

requested state: started

isolation segment: trial

routes: csv2hdb-e8ee8684trial.cfapps.us10.hana.ondemand.com

last uploaded: Thu 09 Jun 12:55:08 CEST 2022

stack: cflinuxfs3

buildpacks:

...

name version detect output buildpack name

python_buildpack 1.7.49 python python

type: web

sidecars:

instances: 1/1

memory usage: 2048M

start command: streamlit run loadfile_mvp.py --server.port ${PORT}

state since cpu memory disk details

#0 running 2022-06-09T10:55:34Z 0.0% 106.1M of 2G 751M of 1G

The application is running and we can see it from the command line…

cf app csv2hdb

…and in the SAP BTP Cockpit

No comments:

Post a Comment