Motivation

Due to the intense promotion of SAP HANA (Cloud) Platform Smart Data Integration, I see that the easy integration of multiple sources and data utilization in the cloud has been growing in the market and therefore is sufficient justification to go for this tendency more and more.

Key Aspects

SAP HANA Smart Data Integration

Motivated by this vision, I decided to share a real world possible approach for SAP HANA Smart Data Integration to combine multiple data sources to model a conceptualized and reusable information entity for the whole company comsuption.

Extract, Load & Transform (ELT)

Another great achievement with this approach and SAP Technology is the ELT. If you don’t know it yet, here it goes:

ELT is a variation of the Extract, Transform, Load (ETL), a data integration process in which transformation takes place on an intermediate server before it is loaded into the target. In contrast, ELT allows raw data to be loaded directly into the target and transformed there. This capability is most useful for processing the large data sets required for business intelligence (BI) and big data Analytics.

Replication (Batch x Real-Time)

Obviously, with the help of the innovations and facilities that software and hardware have brought, today’s requirements have changed enough to make realtime an integral part of today’s solutions. However, batch processing has its specificity ensured within the analytical diagnostics. For our approach the batch processing was used, remote subscriptions weren’t considered for realtime processing because it would not be justified since the requirement didn’t have any concern about it.

Requirements

To start, let’s take a look in the business and technical requirements/considerations:

- The customerwants to integrate information from legacy with SAP ERP systems to combine them for a centralized and consistent analysis of the intellectual growth of its employees.

- The information of your employees’ certifications is generated and managed on an external system.While master data is handled in SAP ERP.

- This information should be available as foundation view (reusable source) to the whole company. It means that this view has to be semantically put on place and has to be the provider for further queries might exists in future as only point of truth for this matter.

- There are multiple source types that information comes from: SAP ERP Table, CSV File and Excel file.

- SAP HANA Smart Data Integration is part of Landscape and is available for development.

- No other ELT/ETL tools are available.

- Data must be replicated.

- The customer wants to take advantage of the best of new SAP HANA Cloud replication / integration technology through a new, innovative approach.

For the scenario above, the following landscape was designed for implementing the solution:

Linking the dots:

On the left side, an SAP HANA Cloud platform illustrates the use of additional features of SAP Enterprise Information Management (EIM), SAP HANA Smart Data Integration, and Data Provisioning Server; And the native modeling capabilities of SAP HANA Database (design-time artifacts).

In the middle, just to measure the security aspect, the firewall is represented to illustrate secure communication between the SAP HANA Cloud Platform and the machine that the Data Provisioning Agent (DPA) is installed on.

Another point is that in DPA the adapters are available for future registration in SAP HANA Database and according to the figure three of them will be used: fileAdapter, excelAdapter and OracleECCAdapter.

On the right side, the sources are discriminated: SAP ERP tables, CSV and Excel files.

ELT Layers

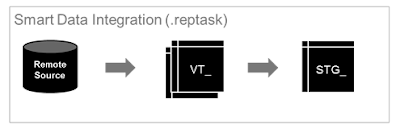

The first step to this approach is to design how the ELT will be composed then:

1) the first layer will be responsible for bringing the raw data, without any changes, depicting exactly what we have in the source. We will call it “Staging”

2) in the second layer, we will treat the data from the first layer. If datathat did not pass the tests, they go to the tables “Logs” else “Clean” (vide below image)

Foundation Layer

Now that we have designed the ELT and the data is already replicated into HANA, we need to design the model that we will make available to the entire company (the foundation view). For this purpose, the following rational was provided:

During the functional survey, it was observed that the link between employee certification / training information does not occur directly. For this, there is a correlation coming from an external source (Excel file) that is managed and manipulated by a specific corporate area.

In order to acomplish and support rational in the figure above, a data flow was designed, considering the restriction written:

Implementing

Now that we understand the requirements, constraints and available environment (tools), we can start with the implementation of this approach. To do this, the first step is to configure the adapters in the Data Provisioning Agent.

Data Provisioning Adapters

As we have seen, we will use 3 adapters: fileAdapter, excelAdapter and oracleECCAdapter. Then follow the instructions to register them in SAP HANA.

- Connect to SAP HANA Cloud Host/Port

- Once it’s connected to SAP HANA Cloud and adapter is registered, adapters can be registered.

- FileAdapter has to be registered into SAP HANA.

- Consider providing a token for the adapter now, since you will need it when you create the remote source in SAP HANA, and take the time to configure the directories that will received the files to be replicated.

- ExcelAdapter has also to be registered into SAP HANA ( vide “Yes” on the “Registered with HANA?” column”).

- Also consider providing a token for the adapter now, since you will need it when you create the remote source in SAP HANA.

- Last but not least, oracleECCAdapter has to be registered into SAP HANA.

- DPA additional settings are not required; but in Oracle side, database permissions, user creations and roles are prerequisites for remote source to be created into SAP HANA to work properly.

Creating Remote Sources

At this time, SAP HANA knows the adapters and it’s about time to create the remote sources to them.

- create file remote source “RS_FFL”

Additional info: SAP HANA Academy – Smart Data Integration: File Adapters

- create excel remote source “RS_EXL”

Additional info: SAP HANA Academy – SDI: Excel Adapter

- create Oracle ECC remote source “RS_ECC”

Create Replication Tasks

Reptasks development can vary a lot when variables ( such as architecture, requirements, landscape and, in some cases, clusters, filters, partitions, realtime or non-realtime characteristics) are considered before creating them. However, In our case, each data source has its own type what make us to create a reptask for each one since the reptaks is remote source-centric.

During the next reptasks illustrations, I will comment on three sections: header, item, and details. I will explain the motivations for the settings used and the results obtained. The idea here is to provide insights so readers can promote more resources from this SAP technology in their projects, leveraging their results even more.

1. Up to this point there is no dependence between artifacts. It means that we can create the reptask we want in the order we want. Let’s get started with “Person” that comes from ECC Adapter and is responsible for bringing person master data from ERP system.PERSON

HEADER

As I mentioned the remote source is unique. In this case, we are using RS_ECC. We do not need to use the “Drop target table if exists” flag because we do not have the possibility to change metadata foreseen in the application and / or short-term changes that need to be automated.

Just to remeber, “Drop target table if exists” optioin works with one of the following:

Existing: When this option is selected and there is an existing target table, the target table is deleted and recreated.

New: When this option is selected, a new target table is created.

As in the “Item” section we will manually enter the target and virtual table name and we do not want the package name to be appended to the table names in the Catalog, we’ll not use the optional flag “Use Package Prefix” and “Virtual Table Prefix “.

ITEM

As simple as it seems, just choose the data source (in this case, table PA9013) and write the name of the virtual table and target that is going to be created when saving the reptask.

DETAILS

Following our approach, we do not have many settings to do. Just reduce the number of fields from the ECC table to the 3 that we need for modeling, set the replication behavior to “Initial load only” (E)* because our requirement is restricted to batch processing and select the “Truncate table” flag. The “Truncate table” option is used so that we can use the STG_ * table only as a persistent staging area that will assume a temporary role of data acquisition. Raw data (L)* will be rested there until they are treated and taken to the second level (T)*.

ELT* comes to play!!!

2. Now, it’s the turn of “Employee” that comes from File Adapter and is responsible for bringing person and employee link.EMPLOYEE

HEADER

This time, we are using RS_FFL and the comments for this section are the same as the “Person”. The exception here is “Drop target table if exists” is now selected. Why is it being used?

The fileAdapter, in addition to the file, works with another file called “config file” that is responsible for providing the metadata required to read / write to/from SAP HANA via the agent. The point is that these settings are not updated automatically. That is, when the config file is modified where DPA is installed, SAP HANA does not update these parameters for the virtual table.

Try yourself:

SELECT * FROM VIRTUAL_TABLE_PROPERTIES

WHERE TABLE_NAME = 'VT_TURNOVER' -- YOUR VIRTUAL TABLE NAME

Because of this, if config gile changes, simply save the reptask in the productive environment to regenerate the VT_* based on the new settings and system will update it with the new metadata without the need for new developments.

ITEM

Just choose the data source (in this case, the file is turnover — I know that “turnover” does not have much to do with the “employee” proposal, but as it’s a real-world example … disregard 🙂 ) … and write the name of the virtual table and target that is going to be created when saving the reptask.

DETAILS

The comments for this section are the same as the “Person”.

You might notice an automatic addition of fields just like “PATH”, “NAME” etc… Be calm. They come from fileAdapter and you can take them out or just leave them there for further usage.

3. Lastly, it’s the turn of “Certification/Training” data that comes from Excel Adapter and is responsible for fact data about Employee Certification and Training.CERTIFICATION/TRAINING

HEADER

This time, we are using RS_EXL and the comments for this section are the same as the “Person”.

ITEM

The comments for this section are the same as the “Person”.

For ExcelAdapter, just one important tip in this section: stick to the name of the tab, columns, header, and more. Changes to the spreadsheet structure may incur errors in reptask.

DETAILS

The comments for this section are the same as the “Person”.

But, I must add my two pennies to the “Target Columns” tab: depending on the configuration on the remote source, the column “Projection Expression” may come without correct column assignment “COL1, COL2, COL3 …”. Be aware to set up the columns and data type.

Create FlowGraphs

From now on we will treat the data, ensure referential integrity, do consistencies and other rules to the second level. In this layer, we have two outputs: clean and log tables. Only then, we will use SAP HANA modeling capabilities to generate the foundation view on these tables (clean ones).

CONSIDERATIONS

For this approach, we will use 3 SAP HANA Smart Data Integration graphical nodes: “Data Source”, “Data Sync” and “Procedure”.

Because the data source contains referential integrity rules and data typing, data acquisition from SAP ERP will not have an associated flowgraph for transformation and data cleansing.

The two flowgraphs will be generated with “batch task” behavior since our application does not requires realtime processing.

DEVELOPMENT

1. EMPLOYEE

The first step is to select as the data source the STG_TURNOVER table that we obtained with the execution of the corresponding reptask. From there, a procedure is called to perform the data treatment abstracting two outputs for two other tables (CLOG_TURNOVER and CSTG_TURNOVER).

2. CERTIFICATION/TRAINING

The first step is to select as the data source the STG_CERTIFICA table that we obtained with the execution of the corresponding reptask. From there, a procedure is called to perform the data treatment abstracting two outputs for two other tables (CLOG_CERTIFICA and CSTG_CERTIFICA).

PROCEDURE NODE

The usage of the procedure node in the flowgraph is very simple; However, at the time of put the pieces together, we may have some doubts. In our case, we have 1 input and 2 outputs. Obviously, the result of the procedure must provide two outputs and get only one input.

For this, the idea is to generate 3 corresponding table types and pass as a parameter to the procedure and then pass the procedure to the node that will, therefore, pass the artifact to the flowgraph.

Of course you can find much more information about flowgraph development. I’d suggest you Bob’s videos (pretty good stuff):

Modeling the Foundation View

Well, now that we have done the ELT and already have the data treated in the Clean tables, we just have to model our foundation view in calc. view by implementing the rational we designed before.

Analyze your Data

From now on, the forms of analysis are what we already know. Just creativity and analytical knowledge.

No comments:

Post a Comment