NIFI is a great apache web based tool, for routing and transformation of data. Kind of an ETL tool. In my scenario, I am trying to fetch tweets from the Tweeter API, and after that, I wanted to save them to hadoop, but also, filter them and save them to HANA for doing Sentiment Analysis

My first idea was to save them to hadoop, and then fetch them to HANA, but after discovering NIFI, it was obvious that the best solution was to fetch the tweet, then format the json file, and then insert it on HANA.

Why nifi? the answer is simple, it is very intuitive and simple to use. Also very simple to install, and it is already integrated with twitter, hadoop, and JDBC. So it was the obvious choose for my idea.-

Of course you can use this, for using most of the SQL processors that NIFI has, but in this example, we’ll show how to insert a json file into HANA

The first thing that we need to do, after we get the tweet, is to create the processor ConvertJSONtoSQL.

Here, in configuration, the first option will be to create a JDBC Connection Pool we will say “create new service”

My first idea was to save them to hadoop, and then fetch them to HANA, but after discovering NIFI, it was obvious that the best solution was to fetch the tweet, then format the json file, and then insert it on HANA.

Why nifi? the answer is simple, it is very intuitive and simple to use. Also very simple to install, and it is already integrated with twitter, hadoop, and JDBC. So it was the obvious choose for my idea.-

Of course you can use this, for using most of the SQL processors that NIFI has, but in this example, we’ll show how to insert a json file into HANA

The first thing that we need to do, after we get the tweet, is to create the processor ConvertJSONtoSQL.

Here, in configuration, the first option will be to create a JDBC Connection Pool we will say “create new service”

Here, we will select DBCPConnectionPool and click on create

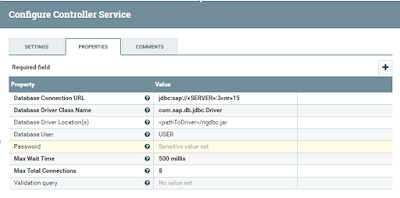

Once we’ve done this, we’ll click on the arrow next to the DBCPConnectionPool to create the actual connection

In save changes, click yes, and in the next screen, click on the edit icon, on the far right.

Where <SERVER> is the name of the HANA Server

<nr> is the number of the HANA instance

For example, if the name of the server is hanaServer and the instance number is 00 the connection url will be “jdbc:sap://hanaServer:30015”

In Database Driver Location, the path to the driver must be accessible for the NIFI user, so remember to either copy the driver to the NIFI folder, or make the NIFI user accessible to it.

In my case, I copied the ngdbc.jar to the /nifi/lib, where /nifi was my installation dir, and change the owner of the file to the nifi user.

The driver (ngdbc.jar) is installed as part of the SAP HANA client installation and is located at:

◈ C:\Program Files\sap\hdbclient\on Microsoft Windows platforms

◈ /usr/sap/hdbclient/on Linux and UNIX platforms

Then Database user and password, will be the credentials for HANA.

The rest you can leave it as it is.-

All the information regarding the JDBC driver for HANA is located here: Connect to SAP HANA via JDBC

In the end, the connection must be enabled, like this:

Once we created the connection, we are ready to format the JSON and send it to our database.

In my case, I’m sending part of retrieved tweets, into the DB. So first, I will just take the parts of the Twitter that I’m interested with the processor JoltTransformJSON , and I’ll put the following specification, in the properties:

[{"operation" : "shift",

"spec":{

"created_at":"created_date",

"id":"tweet_id",

"text":"tweet",

"user":{

"id":"user_id"

}

}

}]

Then, I’ll send the tweets to the processor ConvertJSONtoSQL that we created.

Before we can do that, we must ensure that we have the correct table created on HANA, in my Case here it is:

You can see how every field transformed in the JoltTransformJSON processor is in the table.

Now we can configure our ConverJSONToSQL

Basically we are saying that it’s an insert in the previous JDBC that we created, into the table TWEETSFILTRADOS and in the schema TWDATA (sorry for the spanish table name, filtrados means Filtered)

The rest, we can leave it as it is.

Finally, we have to create a PutSQL processor to make the insert.

In my case I’m also saving my tweets to hadoop, but apart from that, it should look like this in the end:

And now, our table is being filled:

Another way to do this, could be also with Odata, which I haven’t tried yet. So far this is working fine, but perhaps in the future, This could be also done with Odata, and Rest services.

No comments:

Post a Comment