Let’s Start –

Here is small list & introduction of the methods which I will be implementing one by one.

I have used Jupyter Notebook which uses the real time itemset dataset to demonstrate the association rule mining algorithms below which are provided by in hana_ml package.

◈ Apriori

◈ AprioriLite

◈ FPGrowth

◈ KORD

Apriori –

Apriori is a classic predictive analysis algorithm for finding association rules used in association analysis. Association analysis uncovers the hidden patterns, correlations or casual structures among a set of items or objects. For example, association analysis enables you to understand what products and services customers tend to purchase at the same time. By analyzing the purchasing trends of your customers with association analysis, you can predict their future behavior.

Prerequisites –

The input data does not contain null value.

There are no duplicated items in each transaction.

Apriori algorithm takes the itemset as an input parameter and generates the association rules based on the mini-support passed during model fitting.

Apriori Property –

If an item set is frequent, then all its subset items will be frequent.The item set is frequent if the support for the item set is more that support threshold.Before we execute the model we give the minimum support which actually filter’s the further itemset to be processed.

AprioriLite

This is a light association rule mining algorithm to realize the Apriori algorithm. It only calculates two large item sets.

e.g. for Apriori –

Suppose if we take mini support as 0.25 the further frequent itemset calculation

Suppose we have below transaction data for association analysis

Here is small list & introduction of the methods which I will be implementing one by one.

Introduction and algorithm description

I have used Jupyter Notebook which uses the real time itemset dataset to demonstrate the association rule mining algorithms below which are provided by in hana_ml package.

◈ Apriori

◈ AprioriLite

◈ FPGrowth

◈ KORD

Apriori –

Apriori is a classic predictive analysis algorithm for finding association rules used in association analysis. Association analysis uncovers the hidden patterns, correlations or casual structures among a set of items or objects. For example, association analysis enables you to understand what products and services customers tend to purchase at the same time. By analyzing the purchasing trends of your customers with association analysis, you can predict their future behavior.

Prerequisites –

The input data does not contain null value.

There are no duplicated items in each transaction.

Apriori algorithm takes the itemset as an input parameter and generates the association rules based on the mini-support passed during model fitting.

Apriori Property –

If an item set is frequent, then all its subset items will be frequent.The item set is frequent if the support for the item set is more that support threshold.Before we execute the model we give the minimum support which actually filter’s the further itemset to be processed.

AprioriLite

This is a light association rule mining algorithm to realize the Apriori algorithm. It only calculates two large item sets.

e.g. for Apriori –

Suppose if we take mini support as 0.25 the further frequent itemset calculation

Suppose we have below transaction data for association analysis

I will be illustrating on this dataset for rule mining & examples of formulas used for association rule generation. Start Applying the formula on the small dataset will clear the terms used in algorithms & later manipulation can be done on all the parameters getting passed during fitting of model or method.

Consider mini support 0.25 let’s see.

Support- how frequent an itemset in all the transactions

e.g. Support ({News} -> {Finance}) = 4 / 6 = .66 (66%)

Support indicates that 66% is the frequency of itemset (news & finance) together in total of 6 transaction.

Confidence – This is also one of the main factor in association rule analysis.This measure defines the likeliness of occurrence of consequent on the cart given that the cart already has the antecedents.

Confidence({X} -> {Y}) = Transactions containing both X & Y / Transactions containing X

e.g. Confi({News} – > {Finance}) = 4 / 5 = .8 (80%)

Lift – This is also one of the most important factor in finding High Association rule among all the generated rules.

Lift controls for the support (frequency) of consequent while calculating the conditional probability of occurrence of {Y} given {X}.Lift is a very literal term given to this measure.

Lift({X} -> {Y}) = ( (Transactions containing both X & Y) / (Transactions containing X) ) /

fraction of transaction containing Y

Lift({News} – > {Finance}) = (4/5) / 4 = 0.20(20%)

A value of lift greater than 1 vouches for high association between {Y} and {X}.

FPGrowth

FP-Growth is an algorithm to find frequent patterns from transactions without generating a candidate itemset.

In PAL, the FP-Growth algorithm is extended to find association rules in three steps:

– Converts the transactions into a compressed frequent pattern tree (FP-Tree)

– Recursively finds frequent patterns from the FP-Tree

– Generates association rules based on the frequent patterns found in Step 2

– FP-Growth with relational output is also supported.

Prerequisites

– The input data does not contain null value.

– There are no duplicated items in each transaction.

After a short explanation of method i will be now sharing some quick & brief info about the dataset

we will analyze the store data for frequent pattern mining , this is real time data from from Kaggle for market basket analysis.

here is the data soruce –

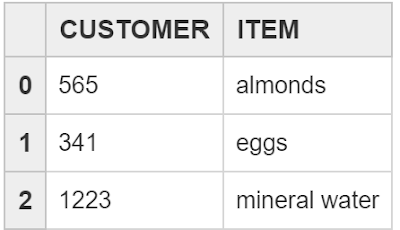

A quick transaction preview of dataset

So here we have transactions & Items which are accepted by the Apriori & other methods as well hence if you are not having data in that particular format then no worries , I have also written some staff on that as well which is going to help you to process the dataset & convert that into desired format if that doesn’t work post me here with the details I will be surely sharing the best possible help.

Attribute Information –

CUSTOMER – Transaction

ITEM – Item for a transaction

Apriori Transaction Table Structure –

Load Data for Analysis –

Before proceeding further please open your Jupyter notebook or any python tool you are using for development i am using jupyter so will be sharing code in that format & later the whole notebook will be shared –

Import Packages

Very first import all the libraries/Packages required further during development

from hana_ml import dataframe

from data_load_utils import DataSets, Settings

Setup Connection

In our case, the data is loaded into a table called “PAL_APRIORI_TRANS_TBL” in HANA from a .csv file “apriori_item_data.csv”. To do that, a connection to HANA is created and then passed to the data loader. To create a such connection, a config file, config/e2edata.ini is used to control the connection parameters. A sample section in the config file is shown below which includes HANA URL, port, user and password information.

*****************

[hana]

url=host-url

user=username

passwd=userpassword

port=3xx15

*******************

url, port, user, pwd = Settings.load_config("../config/e2edata.ini")

# the connection

#print(url , port , user , pwd)

connection_context = dataframe.ConnectionContext(url, port, user, pwd)

print(connection_context.connection.isconnected())

If connect is successful, the output is “True”.

Load Data –

function DataSets.load_apriori_data() is used to decide load or reload the data from scratch. If it is the first time to load data, an example of return message is shown below:

***********************

ERROR:hana_ml.dataframe:Failed to get row count for the current Data-frame, (259, ‘invalid table name: Could not find table/view PAL_APRIORI_TRANS_TBL in schema DM_PAL: line 1 col 37 (at pos 36)’)

Table PAL_APRIORI_TRANS_TBL doesn’t exist in schema DM_PAL

Creating table PAL_APRIORI_TRANS_TBL in schema DM_PAL ….

Drop unsuccessful

Creating table DM_PAL.PAL_APRIORI_TRANS_TBL

Data Loaded:100%

*************************

data_tbl = DataSets.load_apriori_data(connection_context)

Table PAL_APRIORI_TRANS_TBL exists and data exists

Basic Operations on Data-frame just to get some insights of data & attributes –

Create Dataframes

Create a dataframe df from PAL_APRIORI_TRANS_TBL for the following steps.

df = connection_context.table(data_tbl)

df.collect().head(3)

df.dropna() ##Drop NAN if any

Count total number of records in dataset –

df.count() #Total Number of records to be processed in Apriori Algorithm in SAP HANA ML#

6418

Columns –

display(df.columns)

['CUSTOMER', 'ITEM']

#Filter those items which comes under one transaction#

df.filter('CUSTOMER = 0').head(10).collect()

Aggregation on Items –

#This is just a Data Analysis on the items , total number of transaction appearance for an item#

df.agg([('count' , 'ITEM' , 'TOTAL TRANSACTIONS')] , group_by='ITEM').head(10).collect()

#Describe data in dataframe unique customers & unique items are displayed#

df.describe().collect()

It is showing unique items & customers

◈ Display distinct items

◈ Count all the distinct items

df_distinct = df.distinct(cols=['CUSTOMER' , 'ITEM']).collect()

df_distinct

Output – 6418 rows × 2 columns

Distinct table displays a huge similarity in all the transaction and items like user can see almonds has appeared in 565 different transactions and total transaction 565 indicates that it hasn’t always been bought as one item may be combination of one , two or three item together and we are going to find that soon.., it’s equally applicable for all other different items as well in the list.

df_distinct.count() ##No Duplicate transaction

Customer 6418

Item 6418

– Display the bar chart for first 20 items

df_distinct_new = df_distinct.head(15)

df_distinct_new.plot(kind='bar' , x = 'ITEM' , y = 'CUSTOMER')

Bar char is explaining the items and number of customers who bought that item , Such kind of a cross data analysis will be done through different Association analysis algorithms where we will get to know the items which are getting frequently picked.

print(df.dtypes())

Data analysis application of association analysis – In this section we are going to apply various association analysis methods for frequent pattern mining in data.

Apriori –

Our goal is to find out frequent pattern mining from the data hence we are going to apply the Apriori method very first import the Apriori method from HANA ML.

from hana_ml.algorithms.pal.association import Apriori

Mini Support - 0.001 as our transaction volume is very high so before passing this value please check total no of trans. (explained above in example)

Mini Confidence – This is also depend on the total number of transactions into data (explained above)

Mini lift – pass it at least 1 as it is considered a high association value

Note – you can also manipulate with values of Apriori method & analyse the result

ap = Apriori(conn_context=connection_context,

min_support=0.001,

min_confidence=0.5,

relational=False,

min_lift=1,

max_conseq=1,

max_len=5,

ubiquitous=1.0,

use_prefix_tree=False,

thread_ratio=0,

timeout=3600,

pmml_export='single-row')

1. Calculate the execution time of Apriori method

2. Pass the data into method

Method

fit(data[, transaction, item])

Association rule mining based from the input data.

import time

start = time.time()

ap.fit(data=df)

end = time.time()

print(str(end-start) + " " + "Secs")

ap.result_.head(100).collect()

100 rows X 5 Columns

Result Analysis –

Let’s analyse the first transaction from the result

0 pasta shrimp 0.0115 0.676471 8.351489

Support for item is 0.0115 this indicates the frequency of item in the dataset as we are processing the dataset of record count 6418 hence the value of this field will be low always as it is calculated by dividing the frequency of itemset appearing in all the transaction by total number of transaction.

e.g for Shrimp total count = 162 and total transaction = 6418 Support Count = 162/6418 = 0.025

probability of having shrimp on the cart with the knowledge that pasta is already in the cart is 0.7( round of .67) i.e. confidence which is absolutely a high confidence value & this can be considered a strong association rule.

result are displayed based on the parameters passed in Apriori method for example mini confidence is 0.5 hence in result all the rules which are having min confidence greater or equal to 0.5 are considered

Let’s analyse the next itemset –

98 almonds&green tea soup 0.0010 0.666667 11.594203

here confidence is .66 & lift is 11.5(which is off-course greater than 1) this states that 66% of chances are that soup can be purchased but customer if he/she is buying almonds & green tea.

result is displaying all the preceding & subsequent item & subsequent chances in terms of Confidence & Lift hence purchasing trends can be easily analyse but the Apriori method

Change Lift & Confidence

you can always follow this way by manipulating the parameters and can find different result set for analysis & off-course different set of association rules as well.

ap = Apriori(conn_context=connection_context,

min_support=0.001,

min_confidence=0.7,

relational=False,

min_lift=5,

max_conseq=1,

max_len=5,

ubiquitous=1.0,

use_prefix_tree=False,

thread_ratio=0,

timeout=3600,

pmml_export='single-row')

Method

fit(data[, transaction, item])

Association rule mining based from the input data.

ap.fit(data=df)

Result Analysis – Mini Confidence & Lift value got changed before executing the apriori method, Mini confi = 0.7 & lift is 5 so we can see the result is having all the items which are having mostly the confidence as 1 and lift greater than 5 hence we can consider these as a high association rules so it is totally based on the requirement & total number of transactions absolutely where you can have a decision on the parameters values.

ap.result_.head(100).collect()

Apriori algorithm set up using relational logic:

apr = Apriori(conn_context=connection_context,

min_support=0.001,

min_confidence=0.5,

relational=True,

min_lift=1,

max_conseq=1,

max_len=5,

ubiquitous=1.0,

use_prefix_tree=False,

thread_ratio=0,

timeout=3600,

pmml_export='single-row')

apr.fit(data=df)

apr.antec_.head(5).collect()

apr.conseq_.head(5).collect()

apr.stats_.head(5).collect()

Result Analysis

Same result is displayed in this relational as well but in form of 3 different table & using rule_id as key So one rule id is generated for one item (if item is frequent) & first table indicates ANTECEDENTITEM (preceding item)

second table CONSEQUENTITEM (subsequent item) & third table is displaying stats for that particular rule id containing support confidence & lift hence we can filter those records from stats table which are having high association.

Attributes/Parameters of Apriori method

Attributes

result_ (DataFrame) Mined association rules and related statistics, structured as follows: – 1st column : antecedent(leading) items. – 2nd column : consequent(dependent) items. – 3rd column : support value. – 4th column : confidence value. – 5th column : lift value. Available only when relational is False.

model_

(DataFrame) Apriori model trained from the input data, structured as follows: – 1st column : model ID, – 2nd column : model content, i.e. Apriori model in PMML format.

antec_

(DataFrame) Antecdent items of mined association rules, structured as follows: – lst column : association rule ID, – 2nd column : antecedent items of the corresponding association rule. Available only when relational is True.

conseq_

(DataFrame) Consequent items of mined association rules, structured as follows: – 1st column : association rule ID, – 2nd column : consequent items of the corresponding association rule. Available only when relational is True.

stats_

(DataFrame) Statistis of the mined association rules, structured as follows: – 1st column : rule ID, – 2nd column : support value of the rule, – 3rd column : confidence value of the rule, – 4th column : lift value of the rule. Available only when relational is True.

AprioriLite –

A light version of Apriori algorithm for association rule mining, where only two large item sets are calculated.

Set up parameters for light Apriori algorithm, ingest the input data, and check the result table:

from hana_ml.algorithms.pal.association import AprioriLite ##Import AprioriLite version of HANA ML

apl = AprioriLite(conn_context=connection_context,

min_support=0.001, ##Minimum Support Values 0.001

min_confidence=0.6, ##Let's have it 0.5 atleast

subsample=1.0,

recalculate=False,

timeout=3600,

pmml_export='single-row')

Method

fit(data[, transaction, item])

Association rule mining based from the input data.

import time

start = time.time()

apl.fit(data=df)

end = time.time()

print(str(end-start) + " " + "Secs")

Result Analysis

Here result says the same as we did above in Apriori method the only difference it works only on Two large itemsets hence it is just displaying preceding & Subsequent item with their stats , Support Confidence & lift.

Support , Confidence & lift the explanation is absolutely same as apriori & below tables displays the high association rues for the items.

apl.result_.head(1000).collect()

FPGrowth –

from hana_ml.algorithms.pal.association import FPGrowth

fpg = FPGrowth(conn_context=connection_context,

min_support=0.0001,

min_confidence=0.5,

relational=False,

min_lift=1.0,

max_conseq=1,

max_len=5,

ubiquitous=1.0,

thread_ratio=0,

timeout=3600)

import time

start = time.time()

fpg.fit(data=df)

end = time.time()

print(str(end-start) + " " + "Secs")

fpg.result_.collect()

Result Analysis –

◈ FPGrowth method works on Divide & Conquer Approach & Faster than Apriori Algorithm

◈ This builds FP tree using for finding the frequent itemset

◈ Apriori utilize a level-wise approach where it will generate patterns containing 1 items, then 2 items, 3 items, etc.

◈ Result Analysis Decrease the support count tells you that the frequency of item in total transaction is very low So if someone wants to do some analysis on those then it is fine else we can consider only those items which are frequent enough for example consider those items only which transacts 50 times in total of 10000 records then we can pass consider mini support count 0.005

Let’s increase the support count & re-evaluate the method

fpg = FPGrowth(conn_context=connection_context,

min_support=0.005,

min_confidence=0.6,

relational=False,

min_lift=1.0,

max_conseq=1,

max_len=5,

ubiquitous=1.0,

thread_ratio=0,

timeout=3600)

import time

start = time.time()

fpg.fit(data=df)

end = time.time()

print(str(end-start) + " " + "Secs")

fpg.result_.collect()

Support for first record is 0.0115 it clearly states that around 73 times this transaction has appeared hence we can surely say that if someone is purchasing pasta then 67% chances are that they will also buy shrimp as well.

from result displayed above can easily be considered as high Association rules as it contains a good support , confidence & lift So from all the mentioned techniques we can manipulate the parameters Support , Confidence & Lift & analyse the different result for Market-Basket Analysis as all the three parameters play a important rule in execution of method.

FPGrowth algorithm set up using relational logic:

fpgr = FPGrowth(conn_context=connection_context,

min_support=0.001,

min_confidence=0.6,

relational=True,

min_lift=1.0,

max_conseq=1,

max_len=5,

ubiquitous=1.0,

thread_ratio=0,

timeout=3600)

import time

start = time.time()

fpgr.fit(data=df)

end = time.time()

print(str(end-start) + " " + "Secs")

◈ Again mining association rules using FPGrowth algorithm for the input data, and check the resulting tables:

◈ No of frequent preceding items with rule id as key.

fpgr.antec_.collect()

◈ No of subsequent items having rule ID as key & satisfying the criteria we passed during FRGrowth method call

◈ Joining of these two tables based on the rule id can result all the antecedent & consequent items & rules can be considered as strong association rules

fpgr.conseq_.collect()

fpgr.stats_.collect()

Here stats clearly depicts that all the rules which are having strong association have been displayed for example the Support , Confidence & Lift for rule ID – 0 is high enough to be considered.

If user wants to further these result then filter on the Rules can be applied after the result display.

Filter –

Filter result as required , it is just displaying on those records which are having lift greater than 5

fpgr.stats_.filter('LIFT > 5').collect()

KORD

◈ K-optimal rule discovery (KORD) follows the idea of generating association rules with respect to a well-defined measure, instead of first finding all frequent itemsets and then generating all possible rules.

◈ Import KORD algorithms from HANA ML package

from hana_ml.algorithms.pal.association import KORD

◈ Set up a KORD instance:

krd = KORD(conn_context=connection_context,

k=50,

measure='lift',

min_support=0.001,

min_confidence=0.5,

epsilon=0.1,

use_epsilon=False)

start = time.time()

krd.fit(data=df , transaction='CUSTOMER' , item='ITEM')

end = time.time()

print(str(end-start) + " " + "Secs")

Result Analysis

◈ KORD display result in the form of 3 different table first contains all the preceding items(ANTECEDENT) which satisfies the criteria we passed above for example mini confidence & support

◈ Second table represents CONSEQUENT items , items which have followers in first table & all the tables containing the relations parameters as RULE_ID , RULE_ID can be used to join the tables if user wants any common value from data

◈ Third table displays the stats which contains all the rules which are passed in KORD criteria for example all the filtered rule

krd.antec_.collect()

◈ Frequent consequent items

krd.conseq_.collect()

◈ KORD Stats for frequent association rule mining

krd.stats_.collect()

Note – data format issues

If data format is not as the algorithm requires then below is sample code can be used to process the data & can convert it into the desired format

df – > your dataframe which is taking csv file from your system , mention in signature if it has header , else ignore it

df = pd.read_csv(r”C:\Users\XXXX\Desktop\HANA_ML\Apriori\grocery_data_new_2000.csv” ,header=None)

df = pd.read_csv(r”C:\Users\XXXX\Desktop\HANA_ML\Apriori\apriori_50.csv” , sep=’\t’, header=None)

df_main = pd.DataFrame(columns=['TRANS' , 'ITEMS']) ##declare pandas dataframe

count = 0

for j in range(len(df)):

for i in range(len(df.T)):

df_main = df_main.append({'TRANS': count, 'ITEMS': df[i][j]} , ignore_index=True)

count = count + 1

Save the file into data-sets folder & upload this into SAP HANA

df_main.to_csv(r"C:\Users\XXXXX\Desktop\HANA_ML\Apriori\apriori_231.csv")

save this file somewhere in your system & later this can be used for uploading into SAP HANA.

No comments:

Post a Comment