>>> from math import pi

>>> π = pi

>>> area = π * r**2

>>> résumé = "knows Python"

>>> "Python" in résumé

True

Python is a powerful tool in the Data Scientist’s toolbox. A quick search on LinkedIn will support this hypothesis (if you had any doubts). At SAP we appreciate that Python is not just a nice-to-have, but an expectation for modern technology platforms and from SAP HANA 2.0 (Express Edition Support Package 03, Revision 033), a Python Client API will provide integration for Data Scientist’s to accelerate and enhance their work.

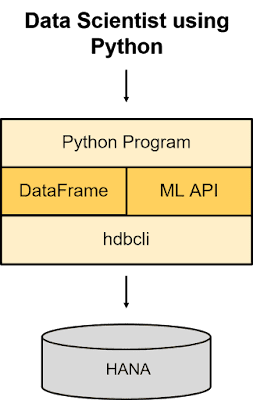

The API makes use of the HANA Python Driver (hdbcli) as shown by this diagram:

# The second method collect() returns the results in a Pandas DataFrame

2. This query is then run by a cursor() and returned with a fetchall() method.

3. The returned results are handled by the collect() method and copied into a Pandas DataFrame, along with the names of the columns.

Now that we have our DataFrame, we have a collection of methods that we can invoke covering 3 capabilities:

2. Descriptive Functions: statistics relating to the data, showing distinct values, creating DataFrame with top n values, etc.

3. DataFrame Transformations: copying an SAP HANA DataFrame to a Pandas DataFrame and materialize a DataFrame to a table.

Here is an example of how to use a descriptive function on the DataFrame:

>>> π = pi

>>> area = π * r**2

>>> résumé = "knows Python"

>>> "Python" in résumé

True

Python is a powerful tool in the Data Scientist’s toolbox. A quick search on LinkedIn will support this hypothesis (if you had any doubts). At SAP we appreciate that Python is not just a nice-to-have, but an expectation for modern technology platforms and from SAP HANA 2.0 (Express Edition Support Package 03, Revision 033), a Python Client API will provide integration for Data Scientist’s to accelerate and enhance their work.

The API makes use of the HANA Python Driver (hdbcli) as shown by this diagram:

The main two components that a Data Scientist will use are:

1. The SAP HANA DataFrame

2. The Machine Learning API

For the first part of this blog series, I will cover the HANA DataFrame and the second part will cover the Machine Learning API.

The SAP HANA DataFrame

Picture this… you’ve recently set up a team of highly skilled Data Scientists.

◈ 5+ years experience in IT? Check.

◈ Strong research background? Check.

◈ PhD in Astrophysics, Mathematics, Neuroscience etc…? Check. *sigh*.

Great. You’re surely on the path to success. You assign the team a task of building a fraud detection system using a database of 50 billion rows across 10 tables. A robust prototype algorithm must be presented in a months time.

What could go wrong? You have the skills, you have the data… but all too often Data Science is seen as more of an analysis task than a process.

When Data Science is appreciated as a process, performance and deployment are paramount and this is the difference between a “Data Science Project”, and building industrial machine learning.

The biggest performance hurdle comes from the data, and when dealing with the plethora of channels and data sources available… this equates to a LOT of data. When using Python IDE’s such as Jupyter, the data is persisted to the client and this means more processing time, which directly converts into a drop of productivity for your prized unicorns/Data Scientists.

“So how can we avoid this seemingly unavoidable issue? My Data Scientists don’t want to use proprietary tools, they like Python!”

This is where the SAP HANA DataFrame can add real value to a Data Scientist’s work.

What is it?

An SAP HANA DataFrame provides a way to view the data stored in SAP HANA without containing any of the physical data. The Machine Learning API’s make use of an SAP HANA DataFrame as input for training and scoring purposes. An SAP HANA DataFrame hides the underlying SQL statement, providing users with a Python interface to SAP HANA data.

What’s the value?

1. By reducing data transfer, this massively improves productivity and speed. This means faster development cycles, rapid prototyping and more flexibility.

2. No need to re-train or upskill Data Scientist’s with new tools. Use Pythonic syntax that they are comfortable and familiar with.

3. Open-ness. By combining open source packages with the Python API, we are not restricting Data Scientists to use specific tools. We are opening our technology to the Data Science world.

How can I use it?

For detailed instructions on how to set up the Python Client API, see this tutorial. Now, I will give you some details on how to use the API.

◈ To use an SAP HANA DataFrame you must create the “ConnectionContext” object, then use the methods provided in the library for creating an SAP HANA dataframe.

◈ The DataFrame is only usable while the “ConnectionContext” is open, and is inaccessible once the connection is closed.

◈ Let’s open the “ConnectionContext” and invoke a table() method for creating a DataFrame:

# First instantiate the Connection Object (conn)

conn = dataframe.ConnectionContext("IP ADDRESS", "PORT", "USER", "PASSWORD")

# Then create the HANA dataframe (df) and point to the table.

df = conn.table("TABLE", schema="SCHEMA")

df.head(5).collect()

| ITEM_IDENTIFIER | ITEM_WEIGHT | ITEM_FAT_CONTENT | ITEM_VISIBILITY | ITEM_TYPE | ITEM_MRP |

| FDA15 | 9.3 | Low Fat | 0.016047 | Dairy | 249.8092 |

| DRC01 | 5.92 | Regular | 0.019278 | Soft Drinks | 48.2692 |

| FDN15 | 17.5 | Low Fat | 0.01676 | Meat | 141.618 |

| FDX07 | 19.2 | Regular | 0 | Fruits and Vegetables | 182.095 |

| NCD19 | 8.93 | Low Fat | 0 | Household | 53.8614 |

| OUTLET_IDENTIFIER | OUTLET_ESTABLISHMENT_YEAR | OUTLET_SIZE | ITEM_VISIBILITY | OUTLET_TYPE | ITEM_OUTLET_SALES |

| OUT049 | 1999 | Medium | Tier 1 | Supermarket Type1 | 3735.138 |

| OUT018 | 2009 | Medium | Tier 3 | Supermarket Type2 | 443.4228 |

| OUT049 | 1999 | Medium | Tier 1 | Supermarket Type1 | 2097.27 |

| OUT010 | 1998 | Tier 3 | Grocery Store | 732.38 | |

| OUT013 | 1987 | High | Tier 3 | Supermarket Type1 | 994.7052 |

To understand what is happening behind the scenes, let’s take a look at the SQL that is generated and pushed down to HANA:

-- The first method, head() generates this SQL

SELECT TOP 5 * FROM (SELECT * FROM "SCHEMA"."TABLE") dt

# The second method collect() returns the results in a Pandas DataFrame

return pd.DataFrame(results, columns=self.columns)

1. The first select statement is generated by the head() method.

2. This query is then run by a cursor() and returned with a fetchall() method.

3. The returned results are handled by the collect() method and copied into a Pandas DataFrame, along with the names of the columns.

Now that we have our DataFrame, we have a collection of methods that we can invoke covering 3 capabilities:

1. DataFrame Manipulations: casting columns into a new type, dropping columns, filling null values, joining dataframes, sorting dataframes, renaming columns, etc.

2. Descriptive Functions: statistics relating to the data, showing distinct values, creating DataFrame with top n values, etc.

3. DataFrame Transformations: copying an SAP HANA DataFrame to a Pandas DataFrame and materialize a DataFrame to a table.

Here is an example of how to use a descriptive function on the DataFrame:

# Describe all columns in a DataFrame

df.describe().collect()

| column | count | unique | nulls | mean |

| ITEM_WEIGHT | 8523 | 416 | 0 | 10.65059 |

| ITEM_VISIBILITY | 8523 | 7880 | 0 | 0.066132 |

| ITEM_MRP | 8523 | 5938 | 0 | 140.9928 |

| OUTLET_ESTABLISHMENT_YEAR | 8523 | 9 | 0 | 1997.832 |

| ITEM_OUTLET_SALES | 8523 | 3493 | 0 | 2181.289 |

| std | min | unique | median | 25_percent_cont |

| 6.431899 | 0 | 416 | 11 | 6.65 |

| 0.051598 | 0 | 7880 | 0.053931 | 0.026989 |

| 62.27507 | 31.29 | 5938 | 143.0128 | 93.8265 |

| 8.37176 | 1985 | 2009 | 1999 | 1987 |

| 1706.5 | 33.29 | 13086.96 | 1794.331 | 834.2474 |

| 25_percent_disc | 50_percent_cont | 50_percent_disc | 75_percent_cont | 75_percent_disc |

| 6.65 | 11 | 11 | 16 | 16 |

| 0.026986 | 0.053931 | 0.053931 | 0.094585 | 0.094603 |

| 93.8094 | 143.0128 | 143.0128 | 185.6437 | 185.6608 |

| 1987 | 1999 | 1999 | 2004 | 2004 |

| 833.5816 | 1794.331 | 1794.331 | 3101.296 | 3101.296 |

Once again, let’s take a look at the SQL that is generated and pushed down to HANA (this example shows the SQL just for numeric columns):

-- First some simple statistics are calculated

SELECT 'ITEM_WEIGHT' AS "column",

COUNT("ITEM_WEIGHT") AS "count",

COUNT(DISTINCT "ITEM_WEIGHT") AS "unique",

SUM(CASE WHEN "ITEM_WEIGHT" IS NULL THEN 1 ELSE 0 END) AS "nulls",

AVG("ITEM_WEIGHT") AS "mean",

STDDEV("ITEM_WEIGHT") AS "std",

MIN("ITEM_WEIGHT") AS "min",

MAX("ITEM_WEIGHT") AS "max",

MEDIAN("ITEM_WEIGHT") AS "median" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0"

UNION ALL SELECT 'ITEM_VISIBILITY' AS "column",

COUNT("ITEM_VISIBILITY") AS "count",

COUNT(DISTINCT "ITEM_VISIBILITY") AS "unique",

SUM(CASE WHEN "ITEM_VISIBILITY" IS NULL THEN 1 ELSE 0 END) AS "nulls",

AVG("ITEM_VISIBILITY") AS "mean",

STDDEV("ITEM_VISIBILITY") AS "std",

MIN("ITEM_VISIBILITY") AS "min",

MAX("ITEM_VISIBILITY") AS "max",

MEDIAN("ITEM_VISIBILITY") AS "median" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0"

UNION ALL SELECT 'ITEM_MRP' AS "column",

COUNT("ITEM_MRP") AS "count",

COUNT(DISTINCT "ITEM_MRP") AS "unique",

SUM(CASE WHEN "ITEM_MRP" IS NULL THEN 1 ELSE 0 END) AS "nulls",

AVG("ITEM_MRP") AS "mean",

STDDEV("ITEM_MRP") AS "std",

MIN("ITEM_MRP") AS "min",

MAX("ITEM_MRP") AS "max",

MEDIAN("ITEM_MRP") AS "median" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0"

UNION ALL SELECT 'OUTLET_ESTABLISHMENT_YEAR' AS "column",

COUNT("OUTLET_ESTABLISHMENT_YEAR") AS "count",

COUNT(DISTINCT "OUTLET_ESTABLISHMENT_YEAR") AS "unique",

SUM(CASE WHEN "OUTLET_ESTABLISHMENT_YEAR" IS NULL THEN 1 ELSE 0 END) AS "nulls",

AVG("OUTLET_ESTABLISHMENT_YEAR") AS "mean",

STDDEV("OUTLET_ESTABLISHMENT_YEAR") AS "std",

MIN("OUTLET_ESTABLISHMENT_YEAR") AS "min",

MAX("OUTLET_ESTABLISHMENT_YEAR") AS "max",

MEDIAN("OUTLET_ESTABLISHMENT_YEAR") AS "median" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0"

UNION ALL SELECT 'ITEM_OUTLET_SALES' AS "column",

COUNT("ITEM_OUTLET_SALES") AS "count",

COUNT(DISTINCT "ITEM_OUTLET_SALES") AS "unique",

SUM(CASE WHEN "ITEM_OUTLET_SALES" IS NULL THEN 1 ELSE 0 END) AS "nulls",

AVG("ITEM_OUTLET_SALES") AS "mean",

STDDEV("ITEM_OUTLET_SALES") AS "std",

MIN("ITEM_OUTLET_SALES") AS "min",

MAX("ITEM_OUTLET_SALES") AS "max",

MEDIAN("ITEM_OUTLET_SALES") AS "median" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0";

-- Second, percentiles are calculated

SELECT 'ITEM_WEIGHT' as "column",

* FROM (SELECT percentile_cont(0.25) WITHIN GROUP (ORDER BY "ITEM_WEIGHT") AS "25_percent_cont",

percentile_disc(0.25) WITHIN GROUP (ORDER BY "ITEM_WEIGHT") AS "25_percent_disc",

percentile_cont(0.50) WITHIN GROUP (ORDER BY "ITEM_WEIGHT") AS "50_percent_cont",

percentile_disc(0.50) WITHIN GROUP (ORDER BY "ITEM_WEIGHT") AS "50_percent_disc",

percentile_cont(0.75) WITHIN GROUP (ORDER BY "ITEM_WEIGHT") AS "75_percent_cont",

percentile_disc(0.75) WITHIN GROUP (ORDER BY "ITEM_WEIGHT") AS "75_percent_disc" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0")

UNION ALL SELECT 'ITEM_VISIBILITY' as "column",

* FROM (SELECT percentile_cont(0.25) WITHIN GROUP (ORDER BY "ITEM_VISIBILITY") AS "25_percent_cont",

percentile_disc(0.25) WITHIN GROUP (ORDER BY "ITEM_VISIBILITY") AS "25_percent_disc",

percentile_cont(0.50) WITHIN GROUP (ORDER BY "ITEM_VISIBILITY") AS "50_percent_cont",

percentile_disc(0.50) WITHIN GROUP (ORDER BY "ITEM_VISIBILITY") AS "50_percent_disc",

percentile_cont(0.75) WITHIN GROUP (ORDER BY "ITEM_VISIBILITY") AS "75_percent_cont",

percentile_disc(0.75) WITHIN GROUP (ORDER BY "ITEM_VISIBILITY") AS "75_percent_disc" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0")

UNION ALL SELECT 'ITEM_MRP' as "column",

* FROM (SELECT percentile_cont(0.25) WITHIN GROUP (ORDER BY "ITEM_MRP") AS "25_percent_cont",

percentile_disc(0.25) WITHIN GROUP (ORDER BY "ITEM_MRP") AS "25_percent_disc",

percentile_cont(0.50) WITHIN GROUP (ORDER BY "ITEM_MRP") AS "50_percent_cont",

percentile_disc(0.50) WITHIN GROUP (ORDER BY "ITEM_MRP") AS "50_percent_disc",

percentile_cont(0.75) WITHIN GROUP (ORDER BY "ITEM_MRP") AS "75_percent_cont",

percentile_disc(0.75) WITHIN GROUP (ORDER BY "ITEM_MRP") AS "75_percent_disc" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0")

UNION ALL SELECT 'OUTLET_ESTABLISHMENT_YEAR' as "column",

* FROM (SELECT percentile_cont(0.25) WITHIN GROUP (ORDER BY "OUTLET_ESTABLISHMENT_YEAR") AS "25_percent_cont",

percentile_disc(0.25) WITHIN GROUP (ORDER BY "OUTLET_ESTABLISHMENT_YEAR") AS "25_percent_disc",

percentile_cont(0.50) WITHIN GROUP (ORDER BY "OUTLET_ESTABLISHMENT_YEAR") AS "50_percent_cont",

percentile_disc(0.50) WITHIN GROUP (ORDER BY "OUTLET_ESTABLISHMENT_YEAR") AS "50_percent_disc",

percentile_cont(0.75) WITHIN GROUP (ORDER BY "OUTLET_ESTABLISHMENT_YEAR") AS "75_percent_cont",

percentile_disc(0.75) WITHIN GROUP (ORDER BY "OUTLET_ESTABLISHMENT_YEAR") AS "75_percent_disc" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0")

UNION ALL SELECT 'ITEM_OUTLET_SALES' as "column",

* FROM (SELECT percentile_cont(0.25) WITHIN GROUP (ORDER BY "ITEM_OUTLET_SALES") AS "25_percent_cont",

percentile_disc(0.25) WITHIN GROUP (ORDER BY "ITEM_OUTLET_SALES") AS "25_percent_disc",

percentile_cont(0.50) WITHIN GROUP (ORDER BY "ITEM_OUTLET_SALES") AS "50_percent_cont",

percentile_disc(0.50) WITHIN GROUP (ORDER BY "ITEM_OUTLET_SALES") AS "50_percent_disc",

percentile_cont(0.75) WITHIN GROUP (ORDER BY "ITEM_OUTLET_SALES") AS "75_percent_cont",

percentile_disc(0.75) WITHIN GROUP (ORDER BY "ITEM_OUTLET_SALES") AS "75_percent_disc" FROM (SELECT * FROM "SCHEMA"."TABLE") AS "DT_0");

1. The first statement generates basic statistics for the columns.

2. The second statement generates 25, 50 and 75 percentile calculations for each column.

3. Finally, both statements are assigned an alias and called in a final select statement, bringing the results together into one table.

If you wish to view the generated SQL without using the collect method, you can issue the following statement:

# This will return the SQL statement for the describe method

df.describe().select_statement

No comments:

Post a Comment