With HANA Data Modeling Tools SPS02 in SAP Web IDE two new flags were introduced that enforce the push-down of filters to lower nodes in specific situations in which filter push-down would not happen per default. One flag is available in Rank nodes. The other flag is available in all nodes but only has an effect if the respective node is consumed by more than one succeeding node.

Push-down here means that a filter that is defined at a node (or in a query) becomes effective in lower nodes. Figuratively, the filter is “pushed down” to lower nodes. A push-down of filters is normally desired because it reduces the amount of records already at earlier processing stages. Reducing the amount of records early helps saving resources like memory and CPU and leads to shorter runtimes. Therefore, this push-down of filters typically happens automatically as long as the semantics and thus the results are preserved by it.

This push-down does not occur per default for rank nodes and nodes that feed into two other nodes. The reason is that here the level at which the filter is applied (before the node or after the node) has different semantic implications. The push-down in these situations, however, can be enforced by the developer by checking the new introduced flags. Therefore, to benefit from performance improvements by enforced filter push-down while not suffering from unintended semantic implications a thorough understanding of the flags is required. This is why the impact of these flags is discussed here.

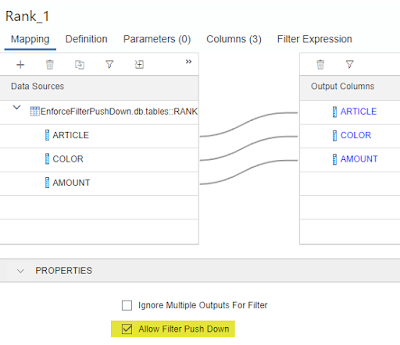

If a filter is defined above a rank node on a column that is not used as partition criterion in the rank node the filter will not be pushed-down per default. This can be overruled by setting the flag “Allow Filter Push Down” in the Mapping tab of the Rank node as shown in the screenshot below.

Push-down here means that a filter that is defined at a node (or in a query) becomes effective in lower nodes. Figuratively, the filter is “pushed down” to lower nodes. A push-down of filters is normally desired because it reduces the amount of records already at earlier processing stages. Reducing the amount of records early helps saving resources like memory and CPU and leads to shorter runtimes. Therefore, this push-down of filters typically happens automatically as long as the semantics and thus the results are preserved by it.

This push-down does not occur per default for rank nodes and nodes that feed into two other nodes. The reason is that here the level at which the filter is applied (before the node or after the node) has different semantic implications. The push-down in these situations, however, can be enforced by the developer by checking the new introduced flags. Therefore, to benefit from performance improvements by enforced filter push-down while not suffering from unintended semantic implications a thorough understanding of the flags is required. This is why the impact of these flags is discussed here.

Flag “Allow Filter Push Down” (available in Rank nodes)

If a filter is defined above a rank node on a column that is not used as partition criterion in the rank node the filter will not be pushed-down per default. This can be overruled by setting the flag “Allow Filter Push Down” in the Mapping tab of the Rank node as shown in the screenshot below.

Flag to Enforce the push down of filters below the rank node

Flag “Ignore Multiple Outputs For Filter”

The “Ignore Multiple Outputs For Filter” flag is used in situations when a node is consumed by two nodes. The workings of the flag will be demonstrated in two examples below. Setting of the flag has only local effects and thus has to be checked in the respective node with more than one consumer. If the flag is set in the “View Properties” it will only become effective if another model consumes more than once the model in which the flag is set. In nodes, the flag becomes visible when selecting the Mapping tab of a node without selecting any Data Source in the Mapping dialog (see screenshot below).

These examples demonstrate potential differences in results when the push-down of filters is enforced by flags. In each case the developer should ensure that filtering already early is indeed the intended semantic. Used correctly, these flags can help reducing data at early stages of processing and thus reduce memory and runtime.

No comments:

Post a Comment