Build Smart Data Streaming project

The virtual scenario to construct my project is the following:

I have several workspace locations such as, store, Factory, Library … across different country that I would like to monitor the temperature, humidity, air quality and air density.

Among this location, I want to track only my IT Room location based in Mexico where 12 employees are working in this place with many computers.

To do so, I will combine my WORKSPACE table entries, which contain my location information with my sensor data received, filter only for Mexico location and store the output in specific table

My project is name “environment_sds”

Once my project build is completed, I compile it to check for no error, to do so I click on the compile button

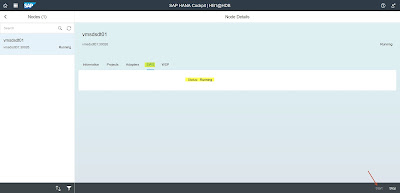

If no error, click on the “Run Streaming Project” to test it

Before to run the test let’s have a quick view on the different windows stream to see what my incoming data will look like. I click on each component to look at them

Now I will simulate the incoming data by choosing “Manual Input” and select the input stream

I insert random value for each of my field declared in my input stream and execute

Once done I can see that 1 row has been recorded for my output adapter

My input stream is filled up with the value

My join stream has the data populated for all location

And my filtered stream with only Mexico location

Finally, for the last validation, like mention earlier I have a table to store all the captured data name “MEXFL” where my output adapter push the data into, I check if I have my data recorded

Build Streaming Lite project

The Streaming Lite project to build is very simple; it consists of having the same input stream as the SDS project and the output adapter.

Streaming Lite support only “Streaming Web Output adapter, here the detail of what should be enter for parameter:

Protocol -> websocket or rest

Destination Cluster Auth. Data -> <user/password>

SWS Server Host -> the SDS Host “not Hana”

SWS Server Port -> the communication port

Dest. Workspace project -> the workspace where the project is created

Dest. Project name -> name of the target project

Dest. Stream name -> name of the stream input in SDS to fill

Once the Streaming Lite project is done, I check the by compiling it if not error

And load it into my Raspberry PI, to do so I transfer the .ccx project file via winscp

Once transferred, I test my SL project and try to send data from my Raspberry, to do so I need to invoke the streamingproject command by specifying the .ccx location and the port to use

Before to try to inject data from my Raspberry I need to start the Stream Web Service on Smart Data Streaming node, to start it I go on the Hana cockpit and select the manage streaming node

Click on SWS and start it

Back to my Raspberry, I need to start the following string command to load my test data:

echo “IOTLAB,i,,15:04:54 PM,35,26,VERY GOOD,43” | /sap/install/bin/streamingconvert -d “,” -p iotlab:9093/environment_st | /sap/install/bin/streamingupload -p iotlab:9093/environment_st

Thus, we can see my insert successfully proceed and from a process standpoint that my connection has been initiated

Now let’s check on the Hana side if my MEXFL table has received the information, and I can see all my input based on the insert command

The load test successfully done, I will achieve the last part of configuration in my Raspberry which consist of creating a custom python script which will retrieve the sensor data.

Retrieve sensor data and load them into Streaming Lite project

For my IoT project I use a GrovePI platform on top of my raspberry for sensor, thus I don’t have any physical sensor connect to my Raspberry.

GrovePI execute python script to use the sensor and retrieve the data, so how to load those data into Streaming Lite??

Once Streaming Lite is deployed on Raspberry, it basically uses XML-RPC internally to communicate which give several options:

- Create a custom java adapter from my hana studio and load it into my Raspberry

- Communicate directly with the XML-RCP internal server to load my sensor input

- Create a custom python script which encapsulate input data and execution command

I have decided to use the third option, from a sequence stand point the first thing to do is to create a special command line at os level.

In the /usr/bin I create the command called “stream” and include the following line

Now done I test my command with the syntax and check the result in Hana

So, my command is working, I will now create a test script to see if the proceed work to in python. I will voluntary put some data for input

Execute my script and check the data in Hana again

It works, after this validation I can now create the real python script which will be use to capture the sensor input and send then into Hana.

Check live data streamed and received in Hana

Below, here is my final script to be used to ship the sensor data captured from GrovePI into Hana

In my above script the line 25, P1 capture the sensor data and ended by a pipe command ( | ) followed by P2, line 26, which convert and upload the data to my smart data streaming server.

In order to control the cadence of data flowing to Hana, I have decided to only read every 10 second the captured data, line 28 time.sleep(10).

And finally, to be able to compare the data read by the script vs what is incoming in Hana I have declared a print, line 27, so I can see in realtime if the data matches.

Let’s run the script now and compare

As we can see the data are read and stored to Hana in my table “MEXFL” properly, I can follow up with Dynamic Tiering part.

Store the incoming data in Dynamic Tiering

Earlier in my documentation I have installed and configured dynamic tiering, the purpose of this is to avoid having a huge amount of data loaded in memory.

To store my MEXFL table in DT I will basically right click on it and mark as “extended”

Note : I have let me sensor for a moment in order to capture enough data before to store my table in the extended storage.

Moving table in extend storage is a very simple move, however you will need to take some consideration such as the volume of data to move and the disk write speed performance.

For my lab I’m using SAS storage with 10k speed and also I’m controlling the frequency of the data sending (every 10 sec), for a real life case scenario consider to read the following document for guidance and best practice recommendation

I am done with the DT part now, so I will go to the next part of my documentation with the creation of my custom application.

Build a custom application for my project

To expose and visualize my data I will create a basic XS application, SAP HANA XS can be used to expose data from Tables, Views and Modeling Views to UI layer.

There are major 2 ways to expose data the data to clients – OData and XSJS, in my case I will use the OData option.

I will briefly explain my step but won’t go deep since it’s not the main goal of this documentation, how do I proceed? here are the step

I start to build the logic (calculation views), for my setup I have created one calc view per application which will be used for my xsodata services

Once my calc view are all set, I create my xsapp and 3 folders such as:

“apps” – where I store my xswidget that will contain my dynamic application

“catalog” – where I store my xsappsite, which contain the catalog for application

“odata” – where i store my xsodata service used for each individual dynamic application

Once my build completed this is what my application look like

My setup is completed for this part, in my next blog will explain how to configure HSR for this particular setup with Dynamic Tiering and Smart Data Streaming.

No comments:

Post a Comment